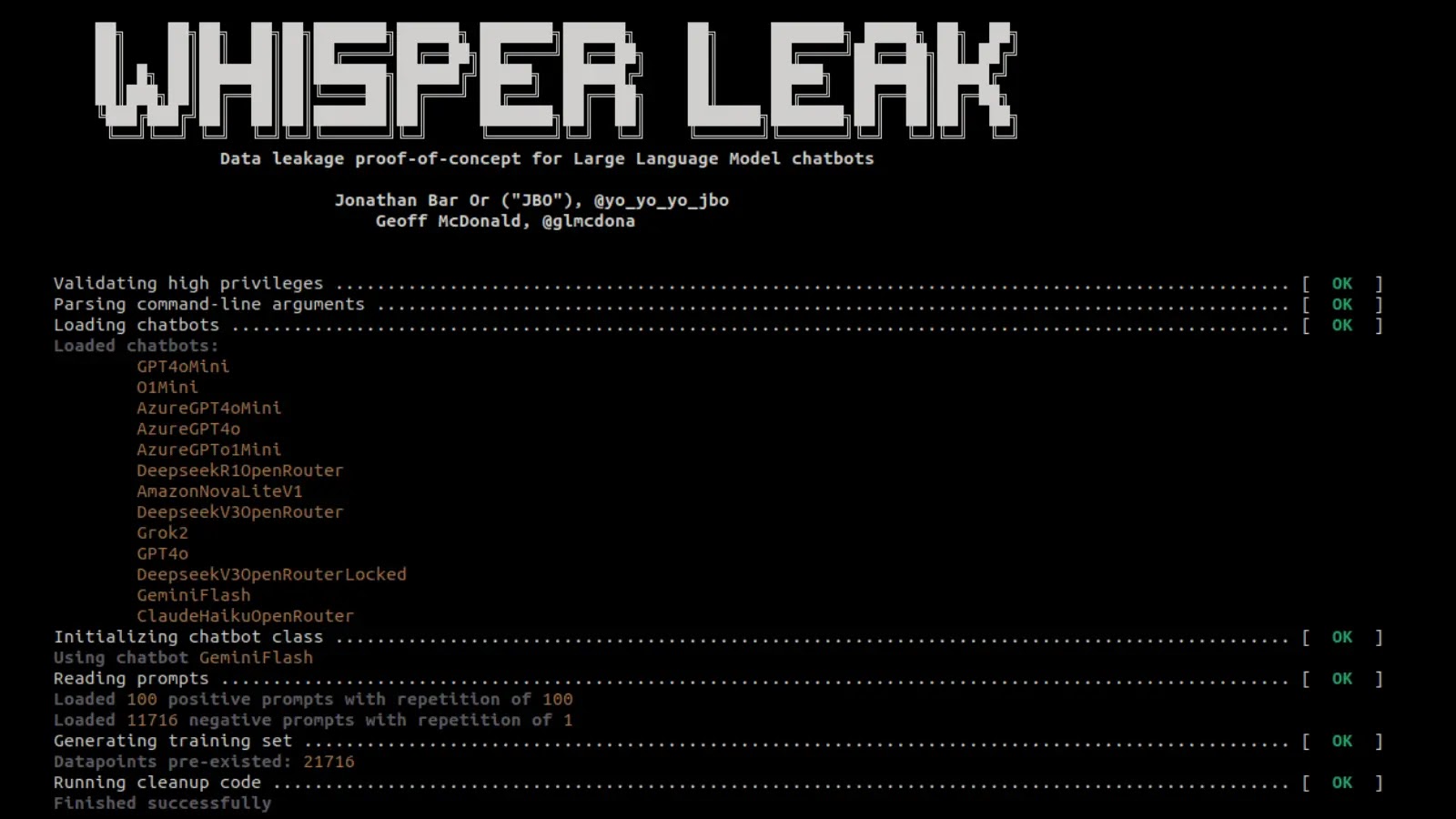

Whisper Leak: Unveiling the Privacy Risks in AI Chatbot Conversations

In an era where artificial intelligence (AI) chatbots are becoming integral to daily interactions, a newly identified vulnerability, termed Whisper Leak, has raised significant privacy concerns. This sophisticated side-channel attack enables eavesdroppers to infer the topics of conversations with AI agents, even when communications are safeguarded by end-to-end encryption.

Understanding the Whisper Leak Vulnerability

Whisper Leak exploits the metadata associated with encrypted communications between users and AI chatbots. By analyzing patterns in network packet sizes and their timings, attackers can deduce the subject matter of user prompts without decrypting the actual content. This method poses a substantial threat to user privacy, especially in environments where sensitive topics are discussed.

Mechanics of the Attack

AI chatbots, such as those developed by OpenAI and Microsoft, generate responses in a token-by-token manner, streaming output to provide immediate feedback. While this process is typically protected by Transport Layer Security (TLS) encryption, Whisper Leak targets the observable characteristics of the encrypted data. Variations in packet sizes, which correspond to token lengths, and the intervals between packet transmissions reveal distinctive patterns associated with specific topics.

Research Findings

Microsoft’s research team conducted a comprehensive study to demonstrate the feasibility of this attack. They trained classifiers on encrypted traffic data, focusing on prompts related to sensitive topics like legality of money laundering. By generating numerous prompt variations and comparing them against a large dataset of unrelated questions, the researchers achieved remarkable accuracy. Many models surpassed 98% accuracy in distinguishing target topics from noise, highlighting the effectiveness of this side-channel analysis.

Real-World Implications

In practical scenarios, an attacker monitoring a vast number of conversations could identify sensitive discussions with high precision. For instance, out of 10,000 monitored conversations, the attacker could flag those involving illicit queries with 100% precision and a recall rate between 5% and 50%. This means that while not all sensitive conversations would be detected, those that are flagged would be accurately identified, posing significant privacy risks.

Mitigation Efforts

In response to the Whisper Leak vulnerability, Microsoft collaborated with AI service providers, including OpenAI, Mistral, xAI, and its own Azure platform, to implement effective countermeasures. OpenAI introduced an obfuscation field that inserts random text chunks into responses, effectively masking token lengths and disrupting the patterns exploited by the attack. Similarly, Mistral added a p parameter for randomization, and Azure adopted comparable strategies. These mitigations have significantly reduced the risk associated with Whisper Leak, rendering the attack largely ineffective.

Recommendations for Users

To further protect against potential privacy breaches, users are advised to:

– Avoid discussing sensitive topics over public networks: Public Wi-Fi and other unsecured networks are more susceptible to monitoring.

– Utilize Virtual Private Networks (VPNs): VPNs encrypt internet traffic, adding an additional layer of security.

– Opt for non-streaming communication modes: Non-streaming modes may reduce the metadata patterns exploited by side-channel attacks.

– Choose AI service providers that have implemented mitigations: Ensure that the platforms you use have addressed the Whisper Leak vulnerability.

Conclusion

The discovery of Whisper Leak underscores the evolving challenges in maintaining privacy within AI-driven communications. While current mitigations have addressed the immediate threat, the incident highlights the need for continuous vigilance and proactive measures to safeguard user privacy as AI technologies become increasingly embedded in our daily lives.