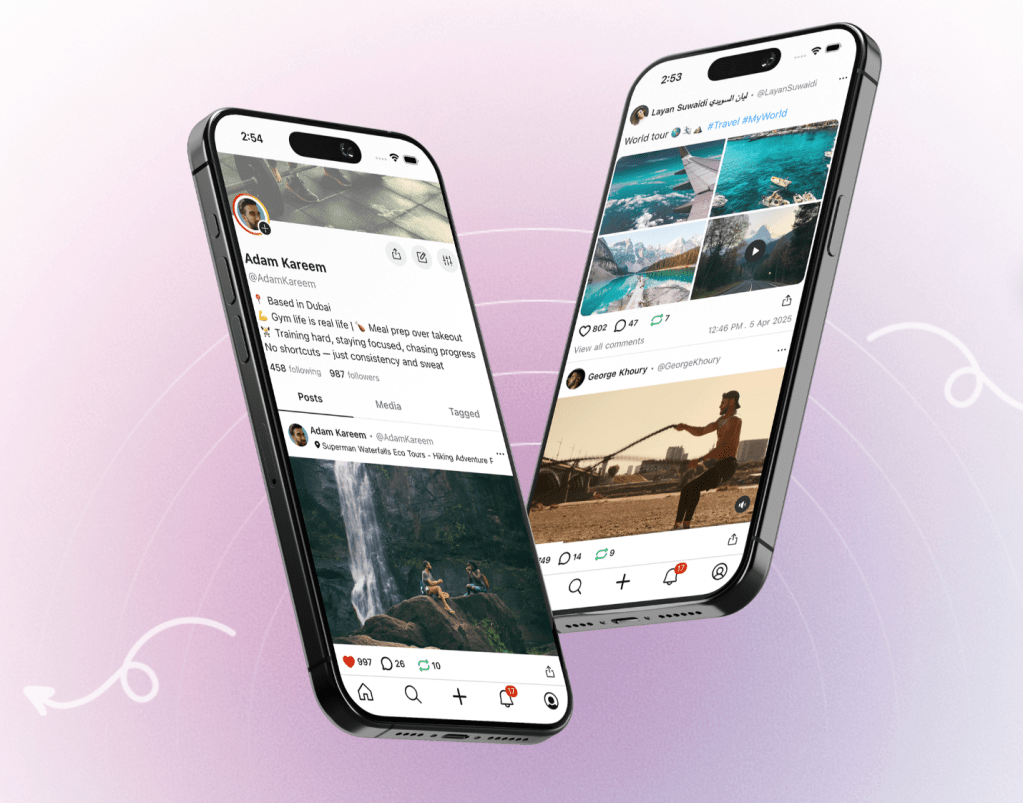

UpScrolled’s Rapid Growth Exposes Challenges in Moderating Hate Speech

UpScrolled, a burgeoning social media platform, has recently encountered significant challenges in moderating hate speech following a rapid surge in user numbers. The platform’s swift expansion has brought to light the complexities of content moderation in the digital age.

The Rise of UpScrolled

Founded in 2025, UpScrolled positioned itself as a platform where every voice holds equal power. This inclusive approach resonated with users, especially after TikTok’s ownership changes in the U.S., leading to a substantial increase in UpScrolled’s user base. By January 2026, the platform boasted over 2.5 million users, with more than 4 million downloads on iOS and Android since June 2025.

Emerging Moderation Challenges

Despite its rapid growth, UpScrolled has faced criticism for its handling of hate speech and inappropriate content. Users have reported the presence of usernames and hashtags containing racial slurs and other offensive material. Investigations confirmed instances where usernames incorporated explicit slurs or phrases like Glory to Hitler. Additionally, harmful content, including text posts and multimedia glorifying extremist ideologies, was found within the platform.

The Anti-Defamation League (ADL) highlighted these issues, noting that UpScrolled was becoming a hub for antisemitic and extremist content, including material from designated foreign terrorist organizations.

UpScrolled’s Response

In response to these concerns, UpScrolled acknowledged the presence of harmful content and emphasized its commitment to creating a respectful digital environment. The company announced plans to expand its content moderation team and upgrade its technological infrastructure to more effectively identify and remove inappropriate content.

Broader Implications for Social Media Platforms

UpScrolled’s challenges are not unique. Other platforms have faced similar issues:

– Meta’s Policy Changes: In January 2025, Meta announced an overhaul of its content moderation policies, including ending its third-party fact-checking program and lifting certain content restrictions. These changes sparked debates about the balance between free expression and the need to curb harmful content.

– X’s Legal Challenges: Formerly known as Twitter, X faced legal scrutiny in Germany for failing to remove illegal hate speech, highlighting the global challenges of content moderation.

– Gab’s Removal from App Stores: The social network Gab was removed from the Google Play Store in 2017 for violating hate speech policies, underscoring the consequences platforms face when failing to moderate content effectively.

The Path Forward

The rapid growth of platforms like UpScrolled underscores the importance of robust content moderation strategies. Balancing the principles of free expression with the need to prevent the spread of hate speech and harmful content remains a complex challenge for social media companies. As UpScrolled continues to evolve, its approach to these issues will be closely watched by users and industry observers alike.