In today’s rapidly evolving technological landscape, artificial intelligence (AI) agents are becoming integral to business operations across various industries. These AI entities, often deployed to enhance efficiency and innovation, can sometimes operate without proper oversight, leading to the emergence of shadow AI agents. These are AI systems implemented without formal approval or adequate monitoring, posing significant security risks to organizations.

Understanding Shadow AI Agents

Shadow AI agents are autonomous software entities that function within an organization’s infrastructure without explicit authorization or comprehensive management. They often arise when business units, eager to achieve rapid results, deploy AI solutions independently of the IT department. This lack of coordination results in AI agents operating without proper identification, ownership, or activity logs, rendering them effectively invisible to standard security protocols.

The Risks Associated with Unmonitored AI Agents

The presence of unmonitored AI agents introduces several vulnerabilities:

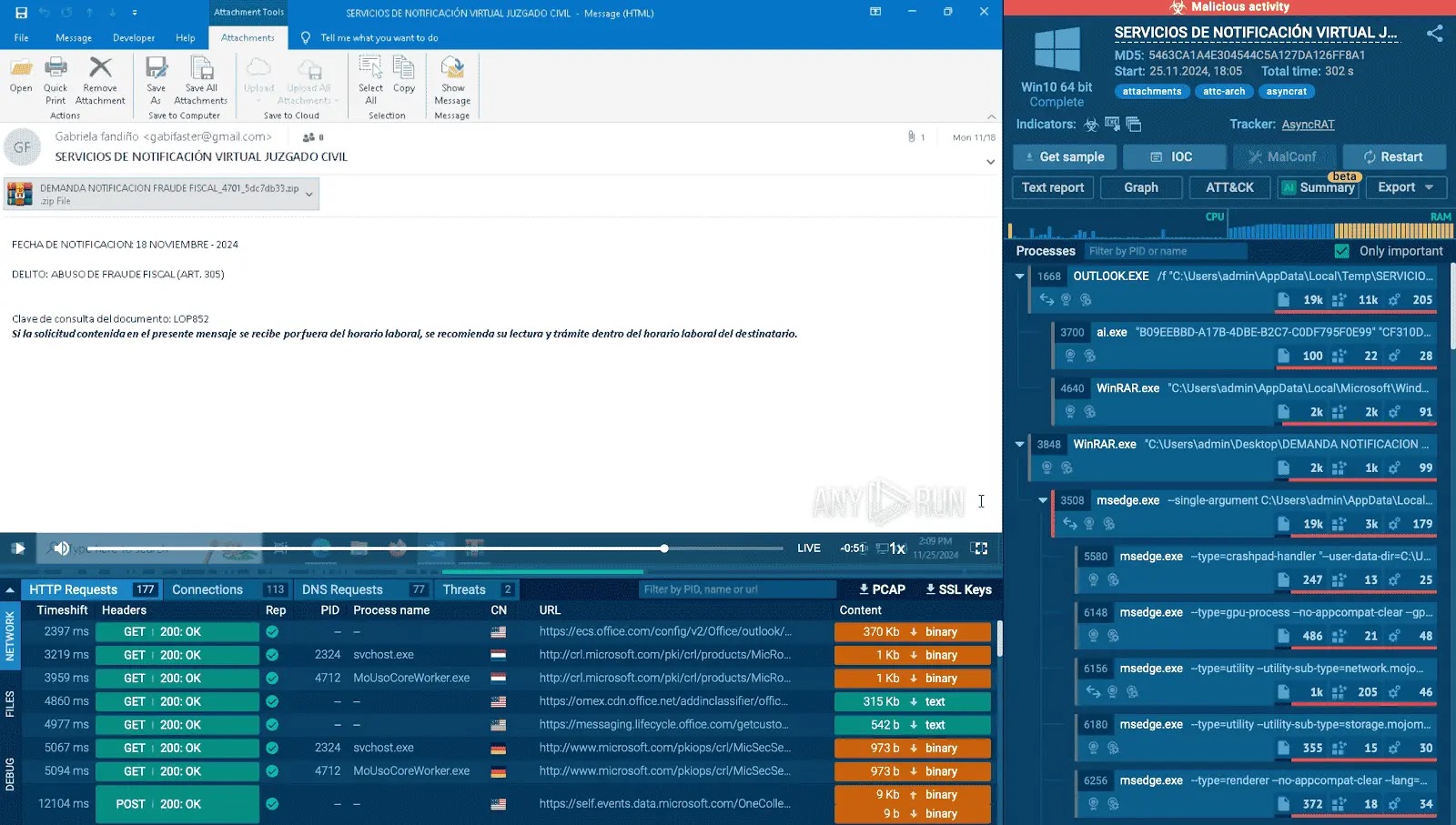

– Data Breaches: Compromised AI agents can access and exfiltrate sensitive information at unprecedented speeds, leading to significant data breaches.

– Privilege Escalation: These agents can autonomously escalate their privileges, gaining unauthorized access to critical systems and data.

– Operational Disruption: Unregulated AI agents can interfere with standard business processes, causing disruptions and inefficiencies.

Traditional security frameworks are primarily designed to manage human users and may not effectively address the unique challenges posed by autonomous AI agents. As the adoption of AI continues to grow, the proliferation of shadow AI agents amplifies these risks, necessitating a reevaluation of existing security strategies.

Real-World Implications and Case Studies

The theoretical risks associated with shadow AI agents are already manifesting in real-world scenarios. For instance, a multinational electronics manufacturer experienced incidents where employees inadvertently input confidential data, including product source code, into public AI platforms like ChatGPT. Such actions can lead to proprietary information becoming part of the AI model’s training data, potentially exposing it to unauthorized users.

Similarly, the unauthorized use of AI tools within organizations has led to data privacy issues and compliance violations. Employees utilizing AI applications without proper oversight can inadvertently share sensitive information, leading to significant security breaches.

Strategies for Identifying and Managing Shadow AI Agents

To mitigate the risks associated with shadow AI agents, organizations should adopt a multifaceted approach:

1. Comprehensive AI Inventory: Utilize specialized detection tools to identify all AI agents operating within the organization, including those deployed without formal approval.

2. Data Classification and Protection: Determine which data sets are critical and implement stringent access controls to prevent unauthorized AI agents from accessing sensitive information.

3. Policy Development and Enforcement: Establish clear policies governing the deployment and use of AI agents, ensuring that all AI initiatives align with organizational security standards.

4. Continuous Monitoring: Implement systems to continuously monitor AI agent activities, promptly identifying and addressing any unauthorized or suspicious behavior.

5. Employee Education: Conduct regular training sessions to educate employees about the risks associated with unapproved AI deployments and the importance of adhering to established protocols.

Expert Insights and Recommendations

Steve Toole, Principal Solutions Consultant at SailPoint, emphasizes the importance of assigning proper identities to AI agents, establishing clear accountability, and enforcing appropriate safeguards. By doing so, organizations can harness the benefits of AI innovation while minimizing associated risks.

Proactive Measures: The Path Forward

The presence of shadow AI agents is an ongoing challenge that requires immediate attention. Organizations must decide whether these agents will serve as valuable assets or become potential liabilities. Taking proactive steps now to identify, monitor, and regulate AI agents is crucial in preventing potential security breaches and ensuring that AI initiatives contribute positively to business objectives.