State-Sponsored Hackers Exploit AI for Advanced Cyber Attacks

In a recent disclosure, Google’s Threat Intelligence Group (GTIG) has identified that state-sponsored hacking groups are increasingly leveraging artificial intelligence (AI) to enhance their cyber operations. Notably, the North Korean-affiliated group UNC2970 has been observed utilizing Google’s generative AI model, Gemini, to conduct detailed reconnaissance on high-value targets.

UNC2970, also known as Lazarus Group, Diamond Sleet, and Hidden Cobra, has a history of targeting sectors such as aerospace, defense, and energy. Their modus operandi often involves masquerading as corporate recruiters to lure victims with fictitious job opportunities—a tactic dubbed Operation Dream Job. By employing Gemini, UNC2970 synthesizes open-source intelligence (OSINT) to profile major cybersecurity and defense companies, mapping specific technical roles and salary information. This approach blurs the lines between legitimate professional research and malicious reconnaissance, enabling the creation of tailored phishing campaigns and the identification of vulnerable targets for initial compromise.

The exploitation of AI in cyber operations is not limited to UNC2970. Other state-backed actors have integrated AI tools into their workflows to expedite various phases of the cyber attack lifecycle:

– UNC6418 (Unattributed): Engages in targeted intelligence gathering, focusing on acquiring sensitive account credentials and email addresses.

– Temp.HEX or Mustang Panda (China): Compiles dossiers on specific individuals, including targets in Pakistan, and gathers operational data on separatist organizations across various countries.

– APT31 or Judgement Panda (China): Automates vulnerability analysis and generates targeted testing plans by impersonating security researchers.

– APT41 (China): Extracts information from open-source tool documentation and troubleshoots exploit code.

– UNC795 (China): Develops web shells and scanners for PHP web servers, conducts research, and troubleshoots code.

– APT42 (Iran): Facilitates reconnaissance and social engineering by crafting engaging personas, develops tools like a Python-based Google Maps scraper and a SIM card management system in Rust, and researches proof-of-concept exploits for vulnerabilities such as CVE-2025-8088.

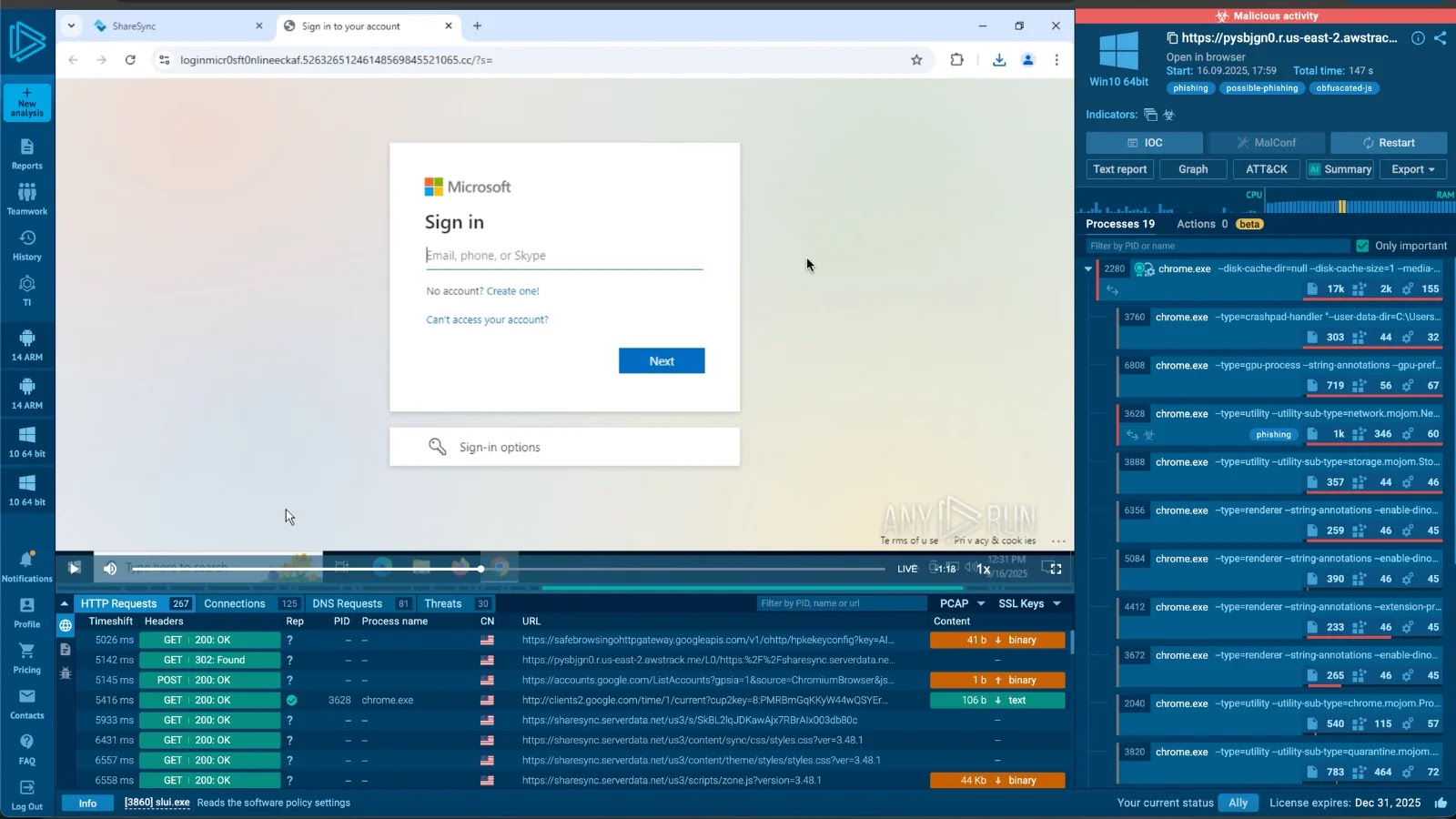

Google has also identified malware named HONESTCUE, which utilizes Gemini’s API to generate code for subsequent attack stages. Additionally, an AI-generated phishing kit called COINBAIT, built using Lovable AI, impersonates cryptocurrency exchanges to harvest credentials. Some activities related to COINBAIT have been linked to a financially motivated threat cluster known as UNC5356.

HONESTCUE operates as a downloader and launcher framework that sends prompts to Gemini’s API, receiving C# source code in response. This code is then compiled and executed directly in memory, leaving minimal traces on disk and complicating detection efforts.

Furthermore, Google has observed model extraction attacks targeting Gemini. These attacks involve systematically querying the AI model to extract information and build a substitute model that mirrors the target’s behavior. In one large-scale attack, over 100,000 prompts were used to replicate Gemini’s reasoning abilities across various tasks in non-English languages.

The increasing use of AI by state-sponsored actors underscores the evolving landscape of cyber threats. Organizations must remain vigilant, adopting robust security measures and staying informed about emerging tactics to protect against sophisticated AI-driven cyber attacks.