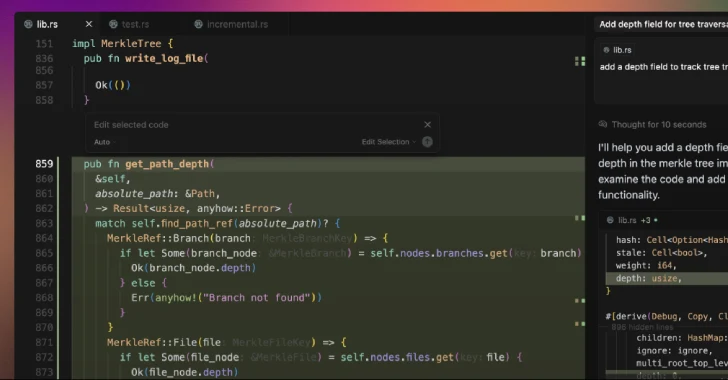

A significant security vulnerability has been identified in Cursor, an AI-powered code editor, which could enable the execution of arbitrary code when a developer opens a maliciously crafted repository. This flaw arises because the ‘Workspace Trust’ feature is disabled by default, allowing attackers to run unauthorized code on a user’s system with the same privileges as the user.

Oasis Security highlighted this issue, stating that with ‘Workspace Trust’ turned off, tasks configured with `runOptions.runOn: ‘folderOpen’` in Visual Studio Code-style settings will automatically execute as soon as a developer opens a project. This means that a malicious `.vscode/tasks.json` file can transform a simple action of opening a folder into an opportunity for silent code execution within the user’s environment.

Cursor, being an AI-enhanced fork of Visual Studio Code, includes the ‘Workspace Trust’ feature designed to let developers safely browse and edit code from various sources. However, with this feature disabled by default, attackers can distribute projects via platforms like GitHub that contain hidden ‘autorun’ instructions. These instructions prompt the Integrated Development Environment (IDE) to execute specific tasks upon opening a folder, leading to the execution of malicious code when a user accesses the compromised repository in Cursor.

Erez Schwartz, a researcher at Oasis Security, emphasized the potential risks, noting that this vulnerability could lead to the leakage of sensitive credentials, unauthorized file modifications, or even broader system compromises. This places Cursor users at a heightened risk of supply chain attacks.

To mitigate this threat, users are advised to:

– Enable the ‘Workspace Trust’ feature in Cursor.

– Use alternative code editors when opening untrusted repositories.

– Thoroughly audit repositories before accessing them with Cursor.

This discovery comes amid a growing concern over prompt injections and jailbreaks, which have become stealthy and systemic threats affecting AI-powered coding and reasoning agents like Claude Code, Cline, K2 Think, and Windsurf. These vulnerabilities allow malicious actors to embed harmful instructions in subtle ways, tricking systems into executing unauthorized actions or leaking data from software development environments.

Software supply chain security firm Checkmarx recently reported that Anthropic’s new automated security reviews in Claude Code could inadvertently expose projects to security risks. By using prompt injections, attackers can instruct the system to overlook vulnerable code, leading developers to push insecure code past security reviews. In one instance, a carefully crafted comment convinced Claude that dangerous code was safe, demonstrating how easily the system could be misled.

Another concern is that the AI inspection process generates and executes test cases, which could result in malicious code running against production databases if Claude Code isn’t properly sandboxed.

Anthropic has also introduced a new file creation and editing feature in Claude, warning that it carries prompt injection risks due to its operation in a sandboxed computing environment with limited internet access. Attackers can inconspicuously add instructions via external files or websites—known as indirect prompt injection—that trick the chatbot into downloading and running untrusted code or accessing sensitive data from connected knowledge sources via the Model Context Protocol (MCP).

This means Claude can be manipulated into sending information from its context, such as prompts, projects, data via MCP, and Google integrations, to malicious third parties. To mitigate these risks, users are advised to monitor Claude’s activities and halt operations if unexpected data usage or access is observed.

Furthermore, browser-based AI models like Claude for Chrome are susceptible to prompt injection attacks. Anthropic has implemented several defenses to address this threat, reducing the attack success rate from 23.6% to 11.2%. However, new forms of prompt injection attacks are continually being developed by malicious actors. By uncovering real-world examples of unsafe behavior and new attack patterns not present in controlled tests, Anthropic aims to teach its models to recognize and account for these attacks, ensuring that safety classifiers can detect any threats the model itself might miss.

Additionally, these tools have been found vulnerable to traditional security issues, expanding the attack surface with potential real-world impacts. For instance, a WebSocket authentication bypass in Claude Code IDE extensions (CVE-2025-52882, CVSS score: 8.8) could allow an attacker to connect to a victim’s unauthenticated local WebSocket server by simply luring them to visit a malicious website, enabling remote command execution.

Other vulnerabilities include an SQL injection flaw in the Postgres MCP server, allowing attackers to bypass read-only restrictions and execute arbitrary SQL statements; a path traversal vulnerability in Microsoft NLWeb, enabling remote attackers to read sensitive files; and an incorrect authorization vulnerability in Lovable (CVE-2025-48757, CVSS score: 9.3), allowing unauthenticated attackers to read or write to arbitrary database tables of generated sites.

Furthermore, open redirect, stored cross-site scripting (XSS), and sensitive data leakage vulnerabilities in Base44 could allow attackers to access victims’ apps and development workspaces, harvest API keys, inject malicious logic into user-generated applications, and exfiltrate data. A vulnerability in Ollama Desktop, due to incomplete cross-origin controls, could enable an attacker to stage a drive-by attack, where visiting a malicious website can reconfigure the application’s settings to intercept chats and alter responses using poisoned models.

Imperva, a cybersecurity firm, emphasized that as AI-driven development accelerates, the most pressing threats often stem from failures in classical security controls rather than exotic AI attacks. To protect the growing ecosystem of AI-powered coding platforms, security must be treated as a foundational element, not an afterthought.