In a significant development for the artificial intelligence (AI) and cloud computing sectors, OpenAI has announced the release of two advanced open-weight language models, gpt-oss-120b and gpt-oss-20b. These models are now available to Amazon Web Services (AWS) customers through Amazon Bedrock and Amazon SageMaker AI, marking the first time OpenAI’s models have been integrated into AWS’s ecosystem.

Introduction to OpenAI’s Open-Weight Models

OpenAI’s new models, gpt-oss-120b and gpt-oss-20b, are designed to excel in complex reasoning tasks, including coding, mathematical problem-solving, and health-related inquiries. The term open-weight refers to the public accessibility of the models’ trained parameters, allowing developers to fine-tune them for specific applications without needing the original training data. This approach differs from open-source models, which provide access to the complete source code, training data, and methodologies.

The larger model, gpt-oss-120b, is optimized to run on a single GPU, making it suitable for enterprise-level applications. In contrast, the smaller model, gpt-oss-20b, is designed to operate efficiently on personal computers, broadening its accessibility to individual developers and smaller organizations. Both models match the performance of OpenAI’s proprietary o3-mini and o4-mini models and have been trained on a text-only dataset emphasizing science, mathematics, and coding knowledge.

Integration with AWS Services

The collaboration between OpenAI and AWS signifies a strategic shift in the AI landscape. AWS has integrated these models into its AI services, Amazon Bedrock and Amazon SageMaker AI. This integration provides AWS customers with a streamlined path to build and deploy generative AI applications using familiar AWS tools and infrastructure.

Amazon Bedrock allows developers to build and host generative AI applications with minimal effort, offering a selection of models to choose from. SageMaker AI supports those looking to train or build custom AI models, primarily for analytics and research purposes. By incorporating OpenAI’s models, AWS enhances its AI offerings, providing customers with more options to develop and deploy AI solutions tailored to their specific needs.

Competitive Landscape and Strategic Implications

This partnership comes amid a highly competitive AI landscape. AWS has traditionally been known as a major host and financial backer of Anthropic’s Claude, one of OpenAI’s significant competitors. By integrating OpenAI’s models, AWS positions itself more prominently in the AI market, offering a broader range of models to its customers.

Microsoft, OpenAI’s most significant cloud partner to date, has also announced that it will offer versions of these new models optimized for Windows devices. This move indicates that OpenAI is expanding its partnerships beyond Microsoft, potentially to strengthen its position in negotiations and to reach a wider audience.

Performance and Cost Efficiency

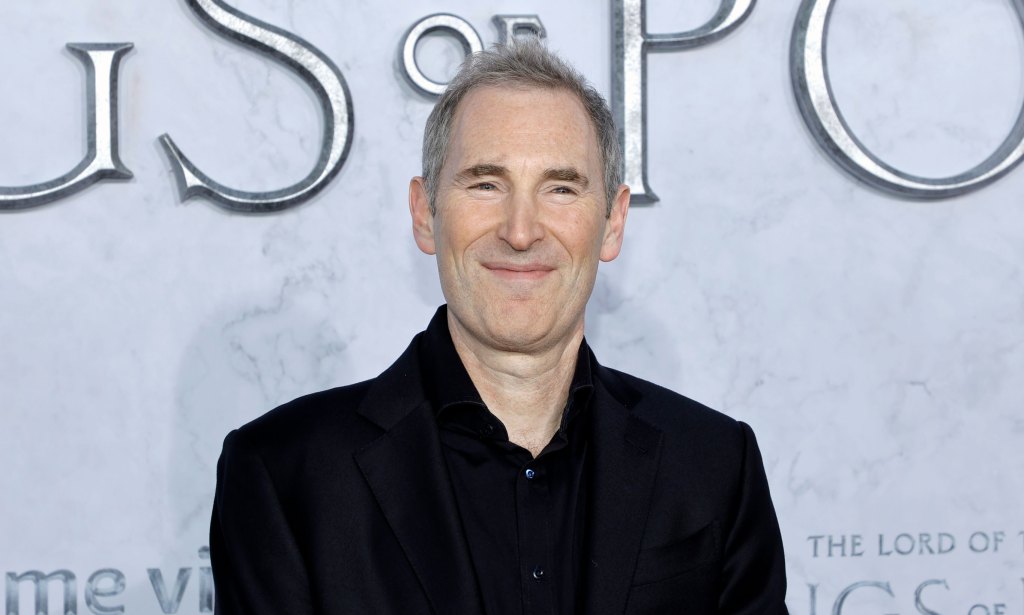

According to AWS CEO Matt Garman, the new OpenAI models on Bedrock are three times more price-performant than Google’s Gemini model and five times more efficient than DeepSeek-R1. This performance advantage is expected to attract a wide range of customers looking for cost-effective AI solutions.

Conclusion

The availability of OpenAI’s advanced AI models on AWS platforms marks a significant milestone in the AI and cloud computing industries. This collaboration provides developers and organizations with powerful tools to build and deploy AI applications efficiently. As the AI landscape continues to evolve, such partnerships are likely to play a crucial role in shaping the future of technology and innovation.