OpenAI Introduces Aardvark: The GPT-5 Agent Revolutionizing Code Security

OpenAI has unveiled Aardvark, an advanced autonomous agent powered by its latest large language model, GPT-5. Designed to function as an agentic security researcher, Aardvark is engineered to emulate human expertise in scanning, comprehending, and rectifying code vulnerabilities. Currently in private beta, this innovative tool aims to assist developers and security teams in identifying and addressing security flaws on a large scale.

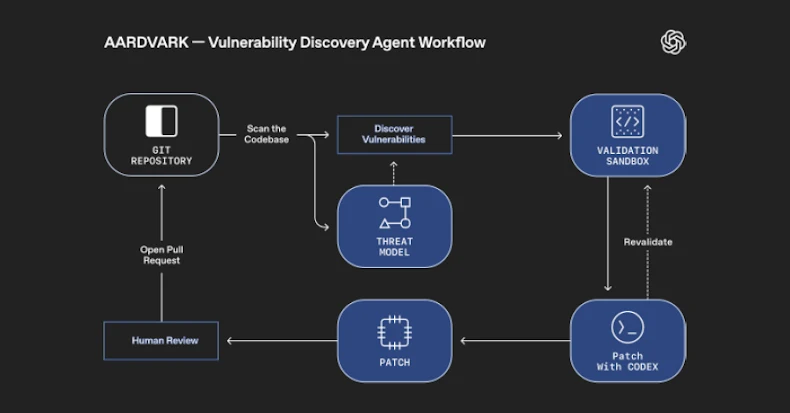

Aardvark seamlessly integrates into the software development pipeline, continuously monitoring source code repositories. It identifies vulnerabilities, evaluates their exploitability, prioritizes their severity, and proposes precise patches. By embedding itself within the development process, Aardvark scrutinizes code commits and changes, detecting potential security issues and their possible exploitation methods. Utilizing GPT-5’s advanced reasoning capabilities, it suggests fixes to mitigate these vulnerabilities.

The foundation of Aardvark is GPT-5, introduced by OpenAI in August 2025. This model is characterized by its enhanced reasoning abilities and a real-time routing system that selects the appropriate model based on the nature, complexity, and intent of the conversation. Leveraging these features, Aardvark analyzes a project’s codebase to develop a threat model that accurately reflects its security objectives and design. It examines the project’s history to uncover existing issues and monitors new code changes to detect emerging vulnerabilities.

Upon identifying a potential security flaw, Aardvark attempts to exploit it within a controlled, sandboxed environment to confirm its viability. It then employs OpenAI Codex, OpenAI’s coding agent, to generate a patch for human review. This process ensures that the proposed solutions are both effective and align with the project’s coding standards.

OpenAI has implemented Aardvark across its internal codebases and with select external alpha partners. This deployment has led to the identification of at least 10 Common Vulnerabilities and Exposures (CVEs) in open-source projects, demonstrating Aardvark’s efficacy in real-world applications.

The introduction of Aardvark is part of a broader trend in the tech industry to utilize AI agents for automated vulnerability detection and remediation. For instance, earlier this month, Google announced CodeMender, a tool designed to detect, patch, and rewrite vulnerable code to prevent future exploits. Google plans to collaborate with maintainers of critical open-source projects to integrate CodeMender-generated patches, enhancing project security.

In this context, Aardvark, CodeMender, and other similar tools are emerging as essential instruments for continuous code analysis, exploit validation, and patch generation. These developments follow OpenAI’s release of the gpt-oss-safeguard models, which are fine-tuned for safety classification tasks.

OpenAI describes Aardvark as a defender-first model, an agentic security researcher that collaborates with teams to provide continuous protection as code evolves. By identifying vulnerabilities early, validating their real-world exploitability, and offering clear fixes, Aardvark aims to strengthen security without hindering innovation. OpenAI emphasizes its commitment to expanding access to security expertise through tools like Aardvark.