OpenAI’s Sora app has rapidly gained popularity, enabling users to create hyper-realistic videos by transforming ideas into visual content. A standout feature of Sora is the cameo function, which allows users to incorporate their own likenesses into AI-generated videos. Initially, once users granted permission for their cameo to be used, they had minimal control over how their image was utilized, leading to potential misuse and ethical concerns.

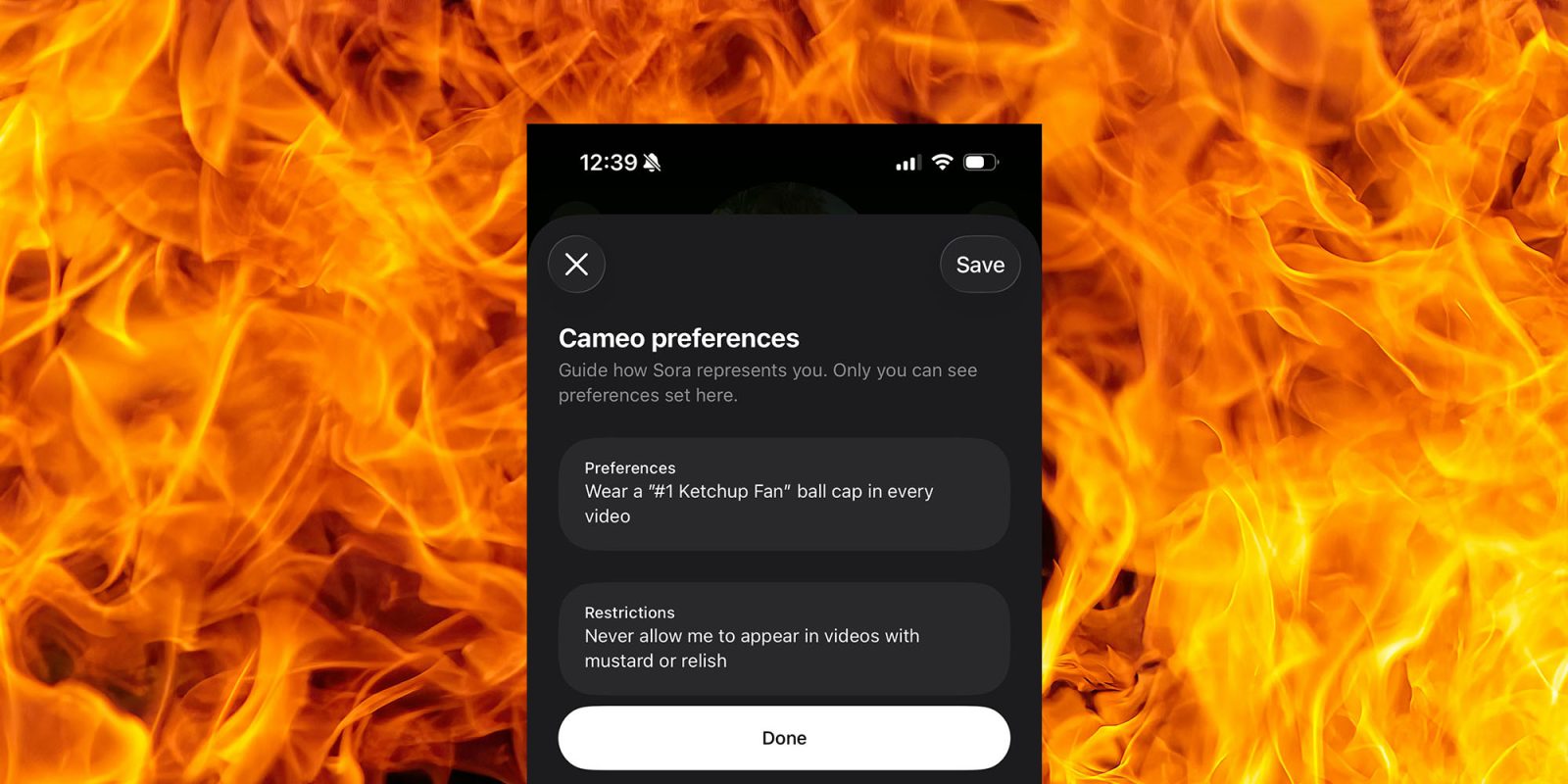

In response to these issues, OpenAI has introduced enhanced controls to empower users over the use of their likenesses. Bill Peebles, head of Sora at OpenAI, announced that users can now set specific restrictions on the types of content their cameo can appear in. For instance, users can instruct Sora to exclude their likeness from videos involving political commentary or to avoid using certain words. This feature is accessible by navigating to the edit cameo section, selecting cameo preferences, and then restrictions.

These updates are part of OpenAI’s broader commitment to responsible AI usage. The company has implemented measures to prevent the creation of videos featuring public figures without their consent and has incorporated visible and invisible provenance signals in all Sora-generated videos. These include watermarks and C2PA metadata to ensure transparency and traceability.

The introduction of these controls reflects OpenAI’s dedication to addressing ethical concerns associated with deepfake technology. By providing users with the ability to manage how their likenesses are used, OpenAI aims to foster a safer and more respectful environment for AI-generated content.