Nvidia Unveils Rubin Architecture: A Leap Forward in AI Computing

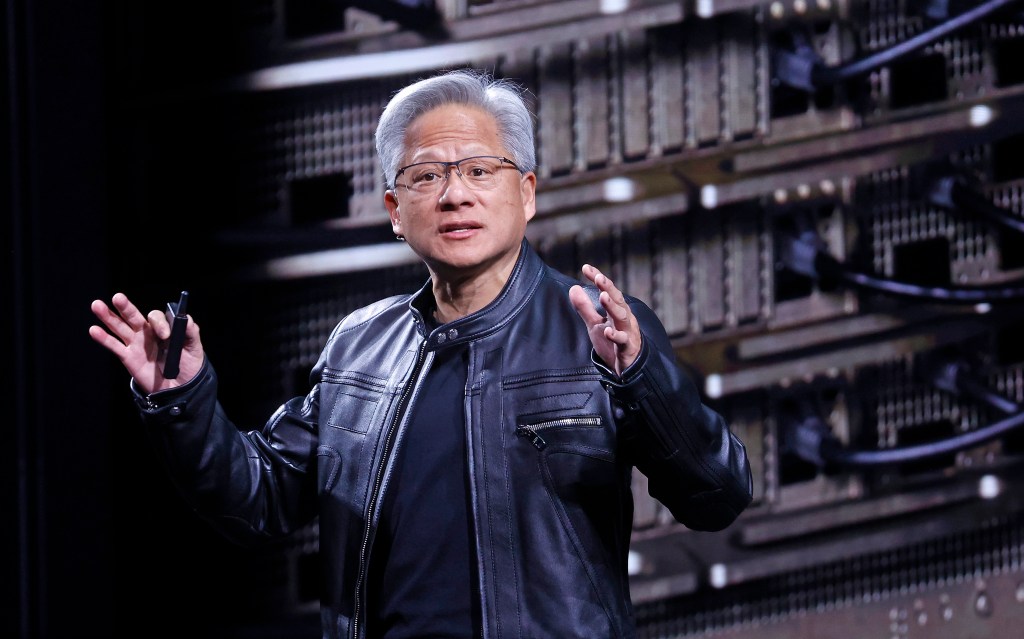

At the 2026 Consumer Electronics Show (CES), Nvidia’s CEO, Jensen Huang, introduced the company’s latest innovation in artificial intelligence hardware: the Rubin computing architecture. This new system is currently in production and is anticipated to scale up in the latter half of the year.

Huang emphasized the escalating computational demands of AI, stating, Vera Rubin is designed to address this fundamental challenge that we have: The amount of computation necessary for AI is skyrocketing. He confirmed that Vera Rubin is in full production.

The Rubin architecture, first announced in 2024, represents the culmination of Nvidia’s continuous hardware advancements, positioning the company as a leader in the tech industry. Rubin is set to succeed the Blackwell architecture, which itself followed the Hopper and Lovelace architectures.

Major cloud service providers, including Anthropic, OpenAI, and Amazon Web Services, have already committed to integrating Rubin chips into their systems. Additionally, Rubin will power HPE’s Blue Lion supercomputer and the forthcoming Doudna supercomputer at Lawrence Berkeley National Laboratory.

Named in honor of astronomer Vera Florence Cooper Rubin, the architecture comprises six interconnected chips. Central to this system is the Rubin GPU, complemented by enhancements in storage and connectivity through the Bluefield and NVLink systems. A notable addition is the Vera CPU, engineered for agentic reasoning tasks.

Dion Harris, Nvidia’s senior director of AI infrastructure solutions, highlighted the architecture’s improved storage capabilities. He noted that modern AI workflows, such as agentic AI and extended tasks, place significant demands on KV cache systems. To address this, Nvidia has introduced an external storage tier that efficiently scales the storage pool, alleviating stress on the compute device.

Performance metrics for the Rubin architecture are impressive. Nvidia’s internal tests indicate that Rubin operates 3.5 times faster than its predecessor, Blackwell, in model-training tasks and achieves a fivefold increase in inference tasks, reaching up to 50 petaflops. Moreover, the platform offers eight times more inference compute per watt, enhancing energy efficiency.

The launch of Rubin comes amid a competitive race to develop AI infrastructure. AI laboratories and cloud providers are vying for Nvidia’s advanced chips and the necessary facilities to support them. In an earnings call in October 2025, Huang projected that the AI infrastructure sector would see investments ranging from $3 trillion to $4 trillion over the next five years.