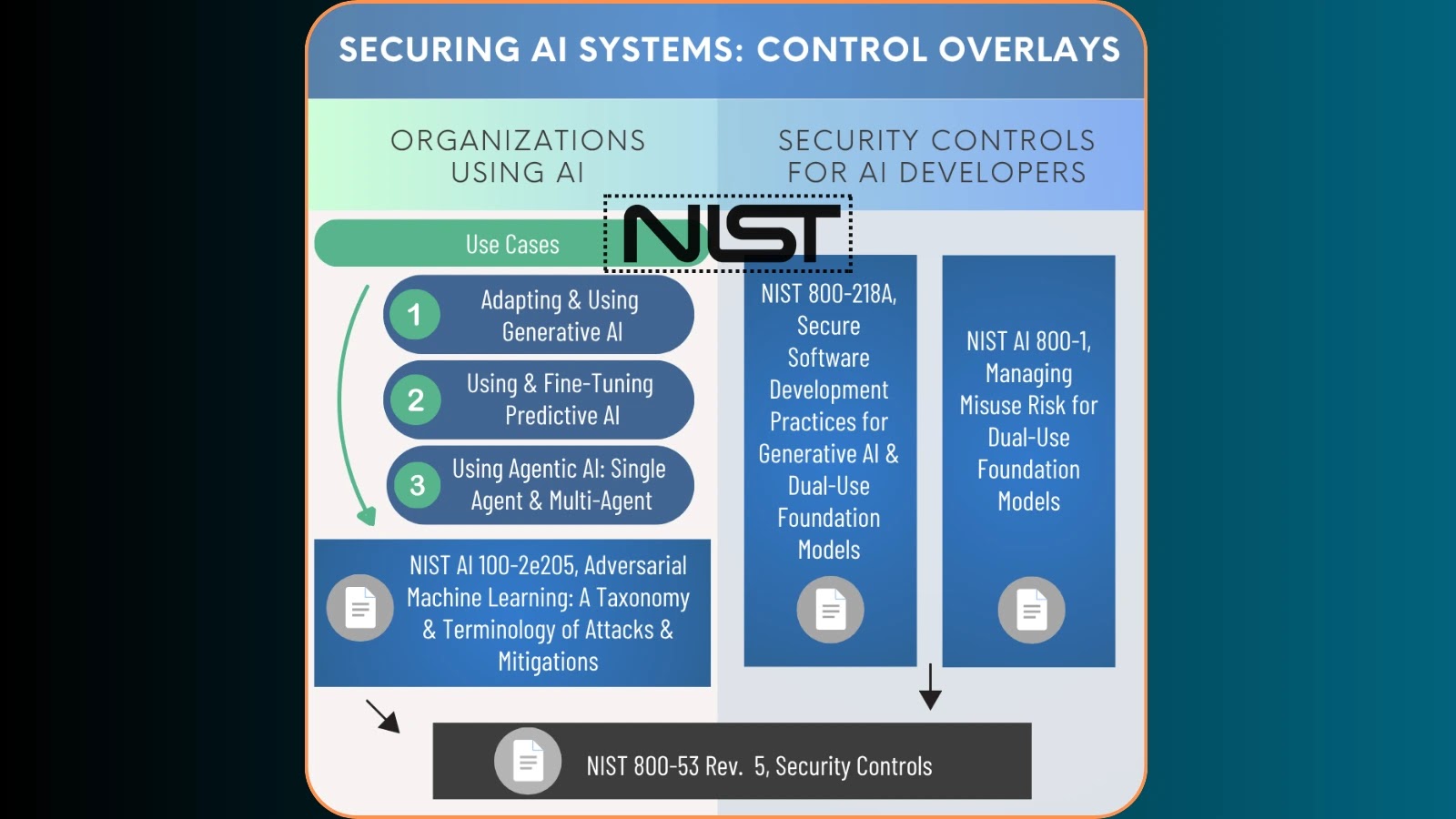

On August 14, 2025, the National Institute of Standards and Technology (NIST) unveiled a comprehensive concept paper detailing proposed NIST Special Publication (SP) 800-53 Control Overlays aimed at securing artificial intelligence (AI) systems. This initiative represents a significant advancement in establishing standardized cybersecurity frameworks tailored for AI applications, addressing the escalating need for structured risk management approaches in both the development and deployment phases of AI systems.

Comprehensive Framework for AI Security Controls

The newly released concept paper lays the groundwork for managing cybersecurity risks across diverse AI implementations by leveraging the NIST SP 800-53 control framework. The proposed overlays specifically target four critical use cases:

1. Generative AI Systems: These systems are designed to create content, such as text, images, or music, and require specific security measures to prevent misuse or unintended outputs.

2. Predictive AI Models: Utilized for forecasting and analysis, these models necessitate safeguards against data manipulation that could lead to inaccurate predictions.

3. Single-Agent AI Applications: These involve standalone AI entities performing tasks independently, requiring controls to ensure they operate within defined parameters.

4. Multi-Agent AI Systems: Comprising coordinated AI entities working together, these systems demand mechanisms to manage inter-agent communications and collective decision-making processes securely.

These control overlays extend the existing NIST cybersecurity framework to address unique vulnerabilities inherent in AI systems, including:

– Data Poisoning Attacks: Maliciously altering training data to corrupt the learning process.

– Model Inversion Techniques: Extracting sensitive information from AI models.

– Adversarial Machine Learning Threats: Crafting inputs designed to deceive AI models into making errors.

The framework incorporates essential technical components such as AI model validation procedures, training data integrity controls, and algorithmic transparency requirements. Organizations implementing these overlays will need to establish continuous monitoring mechanisms for AI system behavior, implement proper access controls for AI development environments, and maintain comprehensive audit trails for model training and deployment processes.

Emphasis on Governance and Collaboration

The overlays also underscore the importance of establishing clear governance structures for AI risk management. This includes conducting regular security assessments and developing incident response procedures specifically tailored for AI-related security events. By integrating these governance practices, organizations can ensure that AI systems are not only effective but also secure and compliant with established standards.

To facilitate stakeholder collaboration and real-time feedback collection, NIST has launched the Control Overlays for AI Project (COSAIS) alongside a dedicated Slack channel (#NIST-Overlays-Securing-AI). This community-driven approach enables cybersecurity professionals, AI developers, and risk management specialists to contribute directly to the overlay development process through facilitated discussions with NIST principal investigators.

The implementation strategy encourages active participation from industry stakeholders who can provide insights into the practical challenges of securing AI systems in production environments. This collaborative framework ensures that the final control overlays reflect real-world security requirements while maintaining alignment with established NIST cybersecurity standards and best practices for enterprise risk management.

Addressing Unique AI Vulnerabilities

AI systems present distinct challenges that traditional cybersecurity measures may not fully address. For instance, the dynamic nature of AI learning processes can introduce new attack vectors that evolve over time. The proposed control overlays aim to mitigate these risks by introducing specific controls tailored to the AI context.

One such control involves implementing robust validation procedures to ensure that AI models perform as intended without unintended biases or vulnerabilities. This includes rigorous testing against adversarial inputs and continuous monitoring to detect and respond to anomalies in AI behavior.

Another critical aspect is ensuring the integrity of training data. Since AI models learn from data, any compromise in data quality can have significant repercussions. The overlays propose measures such as data provenance tracking and validation checks to prevent data poisoning and ensure that training datasets remain trustworthy.

Enhancing Transparency and Accountability

Transparency in AI systems is vital for building trust and ensuring accountability. The control overlays advocate for clear documentation of AI decision-making processes and the factors influencing those decisions. This transparency allows stakeholders to understand how AI systems operate and provides a basis for auditing and compliance checks.

Moreover, the overlays recommend establishing clear roles and responsibilities within organizations for AI risk management. This includes defining who is accountable for various aspects of AI security, from development to deployment and ongoing monitoring.

Implementing the Control Overlays

For organizations looking to implement these control overlays, a structured approach is essential. The following steps can guide the implementation process:

1. Assessment: Evaluate existing AI systems and identify areas where the proposed controls can be integrated.

2. Planning: Develop a roadmap for implementing the controls, considering resource allocation and timelines.

3. Implementation: Integrate the controls into AI development and deployment processes, ensuring that they are tailored to the specific context of each AI system.

4. Monitoring: Establish continuous monitoring mechanisms to detect and respond to security incidents promptly.

5. Review and Improvement: Regularly review the effectiveness of the controls and make necessary adjustments to address emerging threats and vulnerabilities.

Conclusion

The introduction of NIST’s Control Overlays for AI systems marks a pivotal step in enhancing the cybersecurity posture of AI applications. By addressing the unique challenges posed by AI technologies, these overlays provide a structured framework for organizations to manage risks effectively. Through collaboration and adherence to these guidelines, stakeholders can foster the development and deployment of secure and trustworthy AI systems.