Insecure AI Apps in the App Store: A Growing Threat to User Data Security

The rapid proliferation of artificial intelligence (AI) applications has revolutionized the digital landscape, offering users unprecedented capabilities. However, this surge has also introduced significant security vulnerabilities, particularly within the Apple App Store. Recent investigations have uncovered that numerous AI apps are inadequately constructed, leading to extensive user data leaks and raising serious privacy concerns.

The Emergence of AI Applications and Associated Risks

The advent of AI has spurred a gold rush in app development, with developers eager to capitalize on the technology’s potential. This enthusiasm, however, has often resulted in the release of poorly designed applications that prioritize rapid deployment over robust security measures. Consequently, millions of users have unwittingly exposed their personal information to potential exploitation.

Firehound: Exposing Data Leaks in AI Apps

Security firm CovertLabs has launched Firehound, a public repository aimed at identifying and cataloging apps that fail to secure user data adequately. As of now, Firehound lists 198 iOS applications, many of which are AI-based services. These apps have been found to leak vast amounts of user data, underscoring the critical need for stringent security protocols in app development.

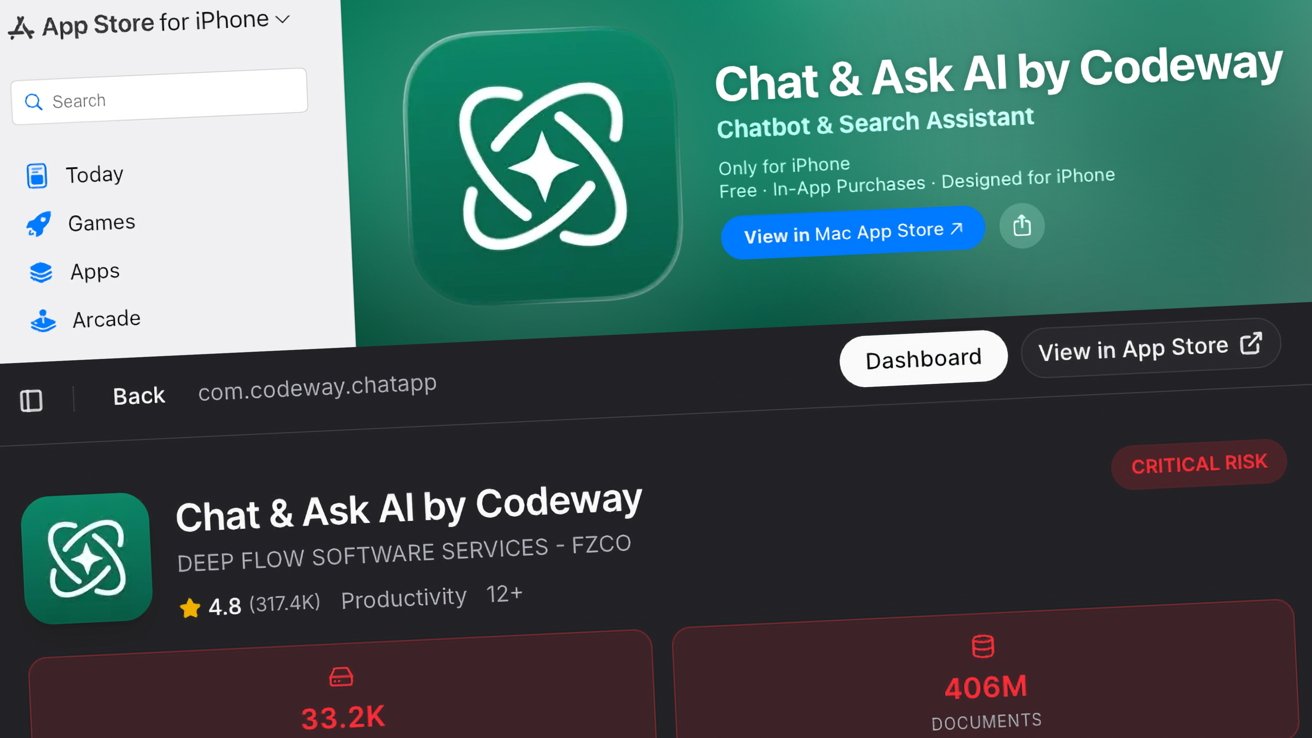

Case Study: Chat & Ask AI by Codeway

Among the most egregious offenders is the app Chat & Ask AI by Codeway, which has exposed over 406 million files and records. Security researcher Harris0n revealed that this includes the complete chat histories of more than 18 million users, totaling 380 million messages. Given the sensitive nature of interactions with AI chatbots, such data leaks pose significant privacy risks. Harris0n emphasized the gravity of the situation, stating, The developers need to be held accountable for this level of negligence.

Responsible Disclosure and Developer Accountability

Firehound provides detailed analyses of the data exposed by these apps, including structures related to chat messages and user details. While the repository openly displays these structures, access to the actual files is restricted. Users must register to view the data, and access requests are manually reviewed, with priority given to law enforcement and security professionals.

Upon visiting the repository, developers are greeted with a Responsible Disclosure notice, urging them to contact the Firehound team. The notice assures that upon contact, the app’s listing will be removed, and guidance will be provided to rectify the security issues.

The Broader Implications of AI Trust Issues

The revelations from Firehound serve as a stark reminder that not all apps are created equal. A promising application can harbor underlying security flaws, transforming it into a hazard for its users. This is particularly concerning in an era where users increasingly trust AI services with sensitive information.

In December, this trust was exploited in a new form of Atomic macOS Stealer (AMOS) attacks. Attackers utilized search result ads for queries about clearing disk space in macOS, directing victims to shared chatbot conversations on platforms like ChatGPT and Grok. These chats guided users through steps that ultimately infected their systems and compromised their data.

While the Firehound repository doesn’t signify a failure of AI technology itself, it highlights the necessity for users to remain vigilant. Poorly coded apps with lax security policies can put user data at significant risk. The presence of AI in an app’s title should not be taken as a guarantee of its trustworthiness.

DeepSeek: A Case of Unencrypted Data Transmission

Another alarming instance involves the AI app DeepSeek, which was found to transmit unencrypted user data to Chinese-owned servers. Despite its popularity, DeepSeek’s iOS app exhibited multiple security and privacy issues, including:

1. Transmission of sensitive data without encryption.

2. Insecure storage of user data.

3. Collection of extensive user and device information.

4. Transmission of user data to servers governed by Chinese laws, raising concerns about government access.

Security firm NowSecure highlighted that DeepSeek’s use of deprecated encryption methods and hard-coded symmetric keys compromised user data security. Additionally, the app disabled Apple’s App Transport Security protocol, further exacerbating the risk.

Apple’s Response to Data Privacy Violations

Apple has a history of removing applications that violate user privacy. In 2020, the company removed several apps owned by Sensor Tower after discovering they were secretly collecting user data through root certificates. These apps, including Adblock Focus and Luna VPN, failed to disclose their association with Sensor Tower and their data collection practices.

Similarly, in 2015, Apple removed numerous apps utilizing a third-party advertising SDK developed by Chinese firm Youmi. These apps were found to be collecting user information, such as email addresses and device identifiers, without consent.

The Need for Enhanced App Store Oversight

The recurring instances of data breaches and privacy violations underscore the need for enhanced oversight within the App Store. While Apple has implemented measures to enforce privacy protocols, the persistence of such issues indicates that more stringent vetting processes are necessary.

Users are advised to exercise caution when downloading AI applications, especially those from lesser-known developers. It’s crucial to research an app’s security practices and user reviews before installation. Additionally, users should be wary of granting extensive permissions to apps without understanding the implications.

Conclusion

The rise of AI applications offers immense potential but also introduces significant security challenges. The exposure of user data through poorly constructed apps highlights the urgent need for developers to prioritize robust security measures. Simultaneously, platforms like the Apple App Store must enhance their oversight to protect users from potential data breaches. As AI continues to evolve, maintaining user trust through stringent security practices will be paramount.