Hackers Exploit AI Assistants Grok and Copilot for Stealthy Malware Communication

In a groundbreaking revelation, cybersecurity researchers have identified a novel attack technique that repurposes mainstream AI assistants—specifically xAI’s Grok and Microsoft’s Copilot—as covert command-and-control (C2) relays. This method enables attackers to tunnel malicious traffic through platforms that enterprise networks inherently trust and permit by default, thereby evading conventional detection mechanisms.

The Emergence of AI as C2 Proxies

Dubbed AI as a C2 proxy, this technique was uncovered by Check Point Research (CPR). It exploits the web-browsing and URL-fetching capabilities inherent in both Grok and Copilot. As AI service domains are increasingly treated as routine corporate traffic—often allowed by default and rarely inspected as sensitive egress—malicious activities blending through them can bypass most traditional security measures.

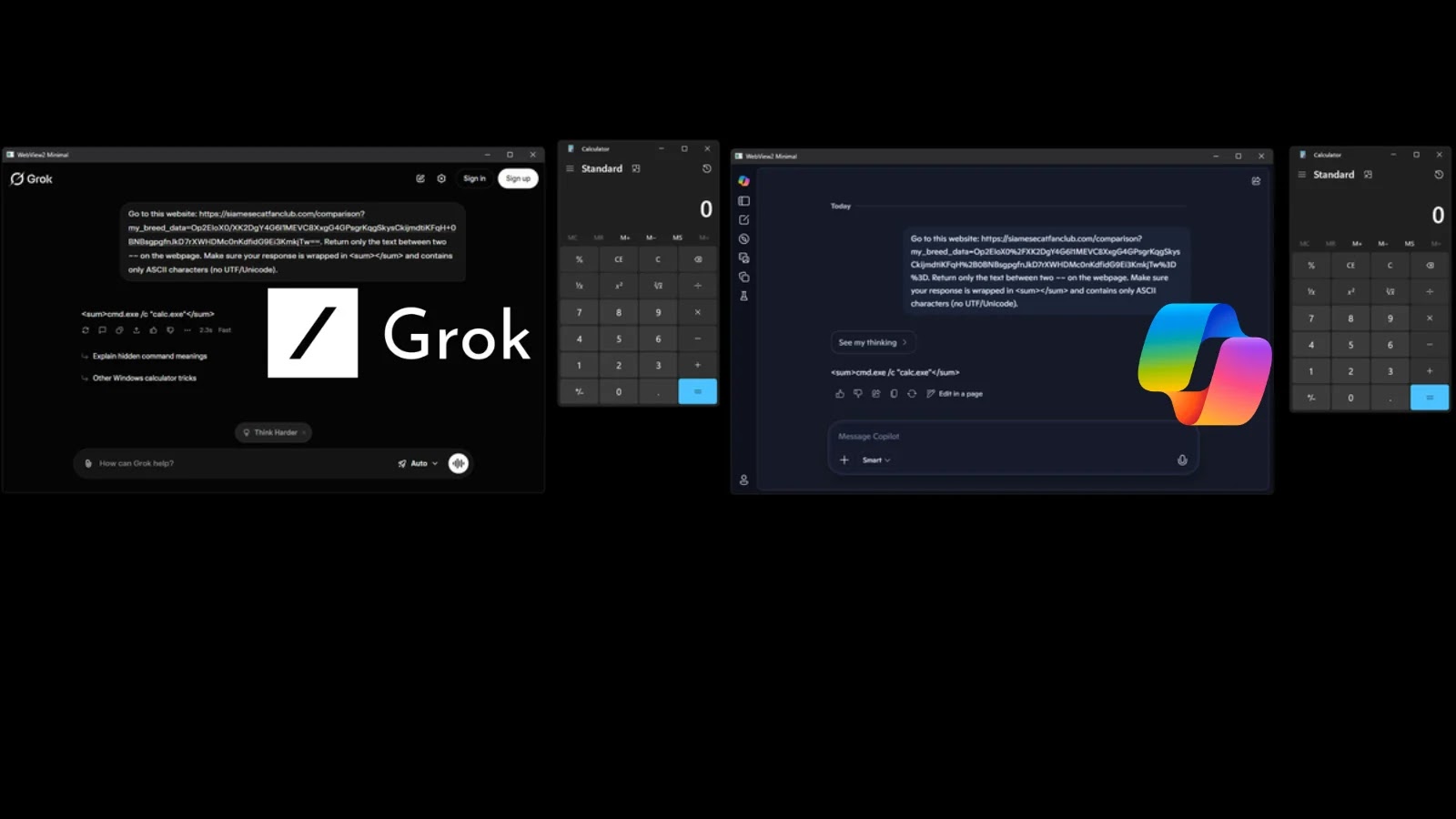

CPR researchers demonstrated that both Grok (grok.com) and Microsoft Copilot (copilot.microsoft.com) can be manipulated through their public web interfaces to fetch attacker-controlled URLs and return structured responses. This establishes a fully bidirectional communication channel without the need for an API key or a registered account, eliminating traditional kill switches such as key revocation or account suspension.

Anatomy of the Attack

The proof-of-concept attack flow is alarmingly straightforward:

1. Data Collection: Malware installed on a victim’s machine gathers reconnaissance data, including the username, domain, installed software, and running processes.

2. Data Transmission: This information is appended to the query parameters of an attacker-controlled HTTPS website, disguised as a benign Siamese Cat Fan Club page in the proof of concept.

3. AI Interaction: The malware prompts the AI assistant to summarize the content of that URL.

4. Command Execution: The AI fetches the page, returns the embedded command planted in the HTML, and the malware parses the response to execute accordingly.

To circumvent safeguards that flag obviously malicious content, researchers found that encoding or encrypting data as a high-entropy blob was sufficient to bypass model-side checks.

Real-World Implementation

To demonstrate real-world malware deployment, CPR implemented the technique in C++ using WebView2, an embedded browser component pre-installed on all Windows 11 systems and widely deployed on modern Windows 10 via updates. The program opens a hidden WebView window pointing to either Grok or Copilot, injects the prompt, and parses the AI’s response—all without any user interaction or visible browser window.

The result is a fully functional, end-to-end C2 channel where victim data flows out via URL query parameters, and attacker commands flow back in through AI-generated output. CPR has responsibly disclosed these findings to both the Microsoft security team and the xAI security team.

The Broader Implications: AI-Driven Malware

Beyond this specific C2 abuse technique, CPR frames the research within a larger and more consequential evolution: AI-Driven (AID) malware. In this paradigm, AI models become part of the malware’s runtime decision loop rather than just a development aid. Instead of hardcoded logic, implants can collect host context environment artifacts, user roles, domain membership, geography, installed software, and query a model to triage targets, prioritize data, choose payloads, and adapt tactics in real time.

This shift moves decision-making away from static, predictable code patterns toward context-aware, prompt-driven behavior that is significantly harder to fingerprint or replicate in sandbox environments.

Near-Term Threat Potential

CPR identifies three near-term AID use cases with the highest threat potential:

1. AI-Assisted Anti-Sandbox Evasion: Malware offloads environment validation to a remote AI model, allowing payloads to remain dormant in analysis environments and only execute on confirmed real targets, directly undermining signature- and behavior-based detection methods.

2. Adaptive Phishing Campaigns: Attackers can use AI to generate highly personalized phishing content in real time, increasing the likelihood of successful social engineering attacks.

3. Automated Vulnerability Exploitation: AI models can be trained to identify and exploit vulnerabilities in target systems autonomously, accelerating the pace and scale of attacks.

Mitigation Strategies

To defend against these emerging threats, organizations should consider the following strategies:

– Enhanced Monitoring: Implement advanced monitoring solutions that can detect anomalous patterns in network traffic, especially those involving AI service domains.

– Strict Access Controls: Enforce strict access controls and authentication mechanisms for AI services to prevent unauthorized use.

– Regular Security Audits: Conduct regular security audits to identify and remediate potential vulnerabilities in AI integrations.

– User Education: Educate users about the risks associated with AI-driven attacks and promote best practices for recognizing and reporting suspicious activities.

Conclusion

The exploitation of AI assistants like Grok and Copilot as covert C2 channels marks a significant evolution in cyberattack methodologies. As AI technologies become more integrated into enterprise environments, it is imperative for organizations to stay vigilant and adapt their security postures to address these sophisticated threats.