Google’s Monocular Android XR Glasses: A Leap into Augmented Reality

In December 2024, Google unveiled its prototype Android XR glasses, signaling a significant advancement in augmented reality (AR) technology. A year later, the company is poised to release AI glasses equipped with displays in 2026, effectively bringing the vision of Google Glass to fruition.

Introduction to AI Glasses

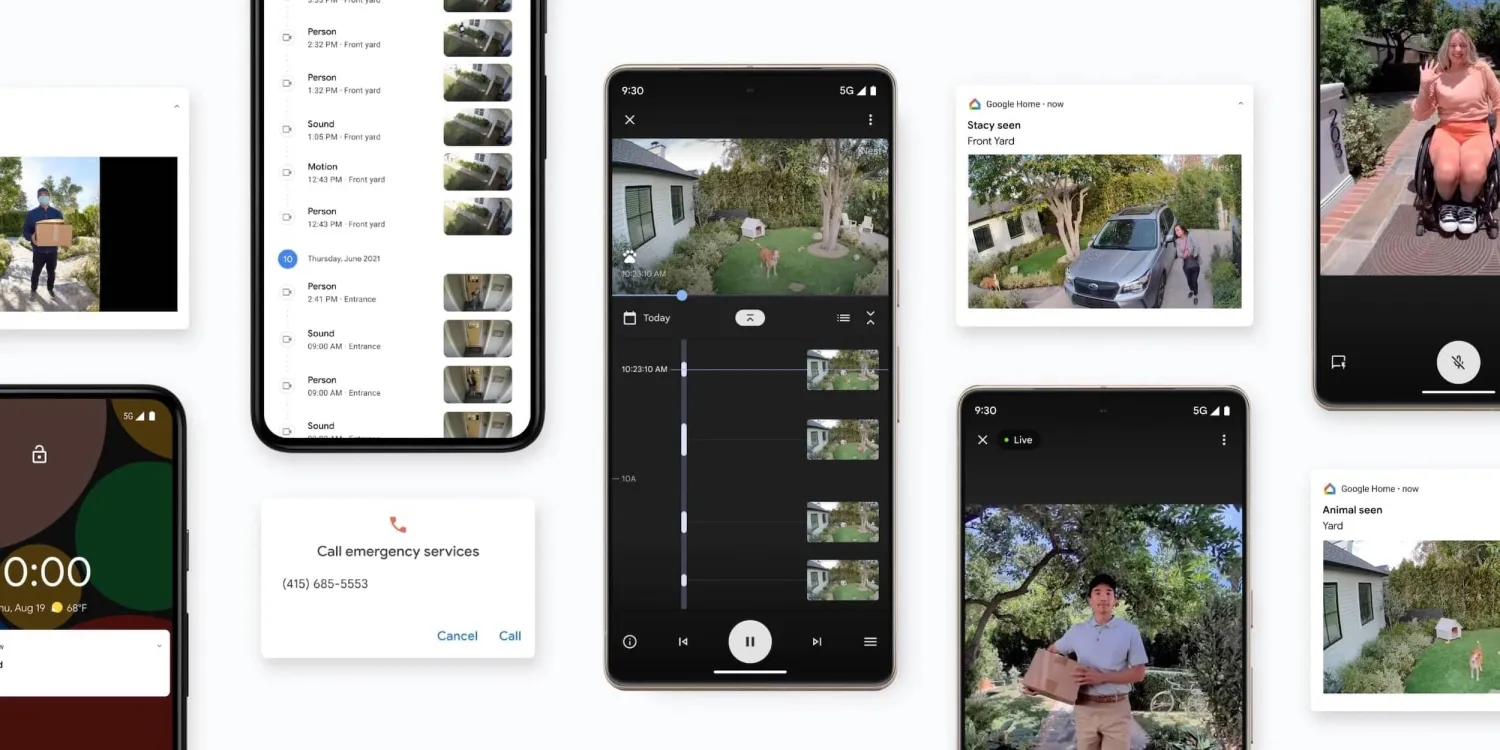

Google’s forthcoming AI glasses will be available in two variants: one with integrated displays and another without. The display-less version will feature cameras, microphones, and speakers, offering a straightforward user experience. However, the integration of Android XR with Wear OS introduces innovative functionalities. For instance, capturing a photo with the display-less glasses prompts a notification on a paired smartwatch, allowing users to preview the image seamlessly. Additionally, gesture controls will enhance the interactivity of Android XR devices.

Monocular Display Glasses: A Game-Changer

Contrary to initial expectations, Google has announced the launch of Android XR devices with a single display over the right eye, termed monocular glasses, set for release in 2026. These glasses promise a high-quality visual experience.

During a recent demonstration, the monocular prototype showcased several impressive features:

– Music Playback: Activating the virtual assistant, Gemini, to play a song displayed a compact, colorful now-playing screen with album artwork. Tapping the side touchpad expanded this into a detailed interface, consistent with Android 16’s design language.

– Video Calls: Answering a video call revealed a floating, rectangular feed of the caller’s face, made possible by microLED technology developed since Google’s acquisition of Raxium in 2022. The display offered sharp resolution and vibrant colors, comparable to smartphone screens.

– Dual Video Feeds: Sharing the user’s point-of-view camera during the call presented two side-by-side video feeds: the caller’s and the user’s own, enhancing the interactive experience.

– Image Capture and Augmentation: Taking a photo and adding elements using Nano Banana Pro displayed the generated image directly in the user’s line of sight within seconds.

Leveraging the Android Ecosystem

A significant advantage of these glasses is their integration with the existing Android app ecosystem. Mobile applications from smartphones can be projected onto the Android XR glasses, providing rich media controls and notifications without requiring additional development efforts. Developers have the option to optimize their apps further, with tools like the latest Android XR SDK (Developer Preview 3) and an emulator available for testing. Google has also introduced the Glimmer design language, incorporating Material Design principles to guide developers in creating intuitive interfaces for the glasses.

User Interface and Interaction

The user interface on Android XR glasses is designed to be minimalistic, resembling homescreen widgets, which is appropriate for the form factor. A standout feature is the AR navigation capability, akin to Google Maps Live View on smartphones but adapted for glasses. When looking straight ahead, users see a pill-shaped direction indicator. Tilting the head downward reveals a map overlay, similar to in-game navigation guides. This transition is smooth and intuitive, enhancing the navigation experience. Third-party apps, such as Uber, can utilize this feature to provide step-by-step directions and images to guide users to specific locations, like airport pickup points.

Development and Availability

While the display-less version of the AI glasses is expected to launch first, the monocular display version is slated for a 2026 release. Google is currently distributing monocular development kits to developers, with plans to expand access in the coming months. In the interim, Android Studio offers an emulator for optical passthrough experiences, allowing developers to begin crafting applications tailored for this new platform.

Future Prospects: Binocular Glasses

Beyond monocular glasses, Google is also developing binocular glasses, featuring waveguide displays in each lens. This technology enables users to watch YouTube videos in native 3D with depth perception. The same Google Maps experience is enhanced, offering a richer map that users can zoom in and out on. These binocular glasses are expected to launch at a later date and aim to unlock productivity use cases that could eventually replace certain smartphone tasks.

Conclusion

The impending release of monocular Android XR glasses marks a significant milestone in augmented reality technology. Google’s approach, characterized by internal development and strategic partnerships, has culminated in a product that seamlessly integrates digital content into the user’s field of view. This advancement not only realizes the long-standing vision of Google Glass but also sets the stage for future innovations in AR, promising a more immersive and interactive user experience.