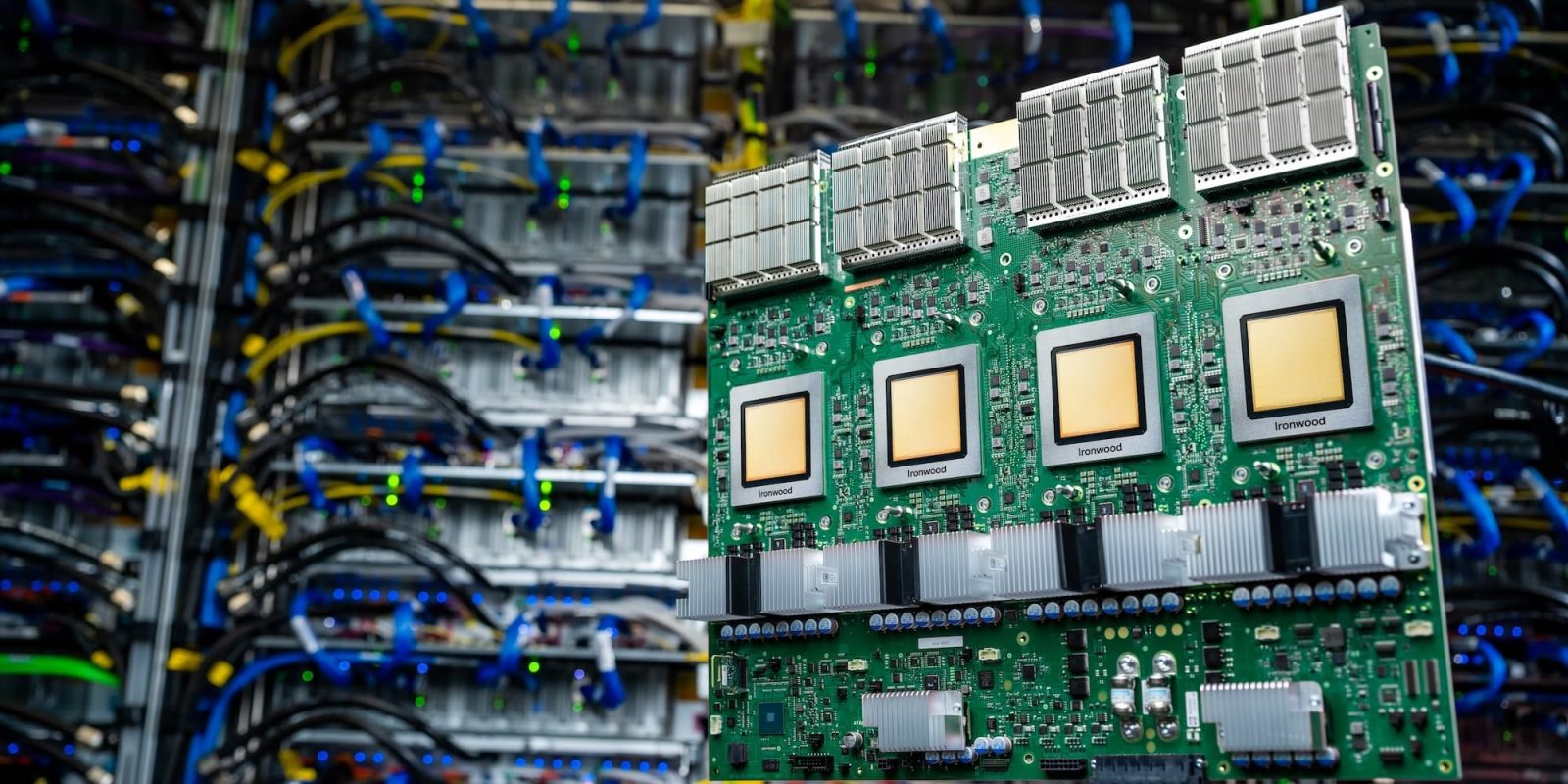

On April 9, 2025, during the Cloud Next 2025 conference, Google introduced Ironwood, its seventh-generation Tensor Processing Unit (TPU). This latest AI accelerator is engineered to enhance the performance and scalability of artificial intelligence applications, particularly focusing on inference tasks.

Advancements in AI Processing

Ironwood marks a significant evolution in AI infrastructure, transitioning from reactive models that provide real-time data to proactive systems capable of generating insights and interpretations autonomously. This shift, termed the age of inference, emphasizes AI agents that not only retrieve data but also deliver comprehensive answers and insights.

Designed to handle complex thinking models, including Large Language Models (LLMs), Mixture of Experts (MoEs), and advanced reasoning tasks, Ironwood addresses the need for massive parallel processing and efficient memory access. By minimizing data movement and latency on-chip, it effectively manages extensive tensor manipulations.

Unprecedented Performance and Scalability

Each Ironwood chip delivers a peak compute performance of 4,614 teraflops (TFLOPs). Google Cloud customers have the option to deploy configurations ranging from 256 to 9,216 chips. A full-scale pod comprising 9,216 chips achieves a total of 42.5 exaflops, surpassing the computational power of the world’s largest supercomputer, El Capitan, which offers 1.7 exaflops per pod.

In terms of energy efficiency, Ironwood offers twice the performance per watt compared to its predecessor, the sixth-generation Trillium TPU introduced in 2024. Additionally, it features 192 GB of High Bandwidth Memory (HBM) per chip, a sixfold increase over Trillium, facilitating faster data access and processing.

Integration with Pathways

Ironwood is integrated with Pathways, Google’s distributed runtime system that supports large-scale training and inference operations. Previously utilized internally, Pathways is now accessible to Google Cloud customers, enabling them to leverage its capabilities for their AI workloads.

Implications for AI Development

The introduction of Ironwood signifies a pivotal advancement in AI hardware, providing developers and enterprises with the tools to build and deploy more sophisticated and efficient AI models. Its enhanced performance and scalability are poised to accelerate the development of AI applications across various industries.