In a groundbreaking development, cybersecurity experts have identified ‘MalTerminal,’ the earliest known malware leveraging Large Language Models (LLMs) to generate malicious code in real-time. This discovery marks a significant evolution in cyber threats, as adversaries now utilize advanced AI models like OpenAI’s GPT-4 to dynamically create ransomware and reverse shells, complicating detection and analysis efforts.

The Advent of AI-Driven Malware

Traditional malware typically contains hardcoded malicious logic, making it susceptible to detection through static signatures. However, MalTerminal introduces a paradigm shift by generating its harmful code on-the-fly using external AI models. This method allows the malware to produce unique code during each execution, effectively evading conventional security measures.

SentinelLABS researchers, who uncovered MalTerminal, emphasize the unpredictability of such AI-enabled malware. By delegating code generation to an LLM, the malware’s behavior becomes highly variable, posing significant challenges for security tools attempting to anticipate and mitigate its actions.

Tracing the Evolution of AI in Cyber Threats

The journey towards AI-integrated malware has seen notable milestones. In August 2025, ESET identified ‘PromptLock,’ initially perceived as the first AI-powered ransomware. Later revelations indicated it was a proof-of-concept developed by New York University researchers to demonstrate potential risks. PromptLock, written in Golang, utilized the Ollama API to run an LLM locally on the victim’s machine, generating malicious Lua scripts compatible across multiple operating systems.

Unlike PromptLock, which operates locally, MalTerminal relies on cloud-based APIs, indicating a more sophisticated and adaptable approach. This evolution underscores the increasing integration of AI models directly into malicious payloads, enabling malware to adapt its behavior based on the target environment.

Unveiling MalTerminal

The discovery of MalTerminal resulted from innovative threat-hunting methodologies. Instead of searching for known malicious code, researchers focused on artifacts indicative of LLM integration, such as embedded API keys and specific prompt structures. By developing YARA rules to detect patterns associated with major LLM providers like OpenAI and Anthropic, they conducted a year-long retrohunt on VirusTotal, identifying over 7,000 samples with embedded keys. While most were non-malicious developer errors, the focus on samples with multiple API keys and prompts with malicious intent led to the identification of MalTerminal.

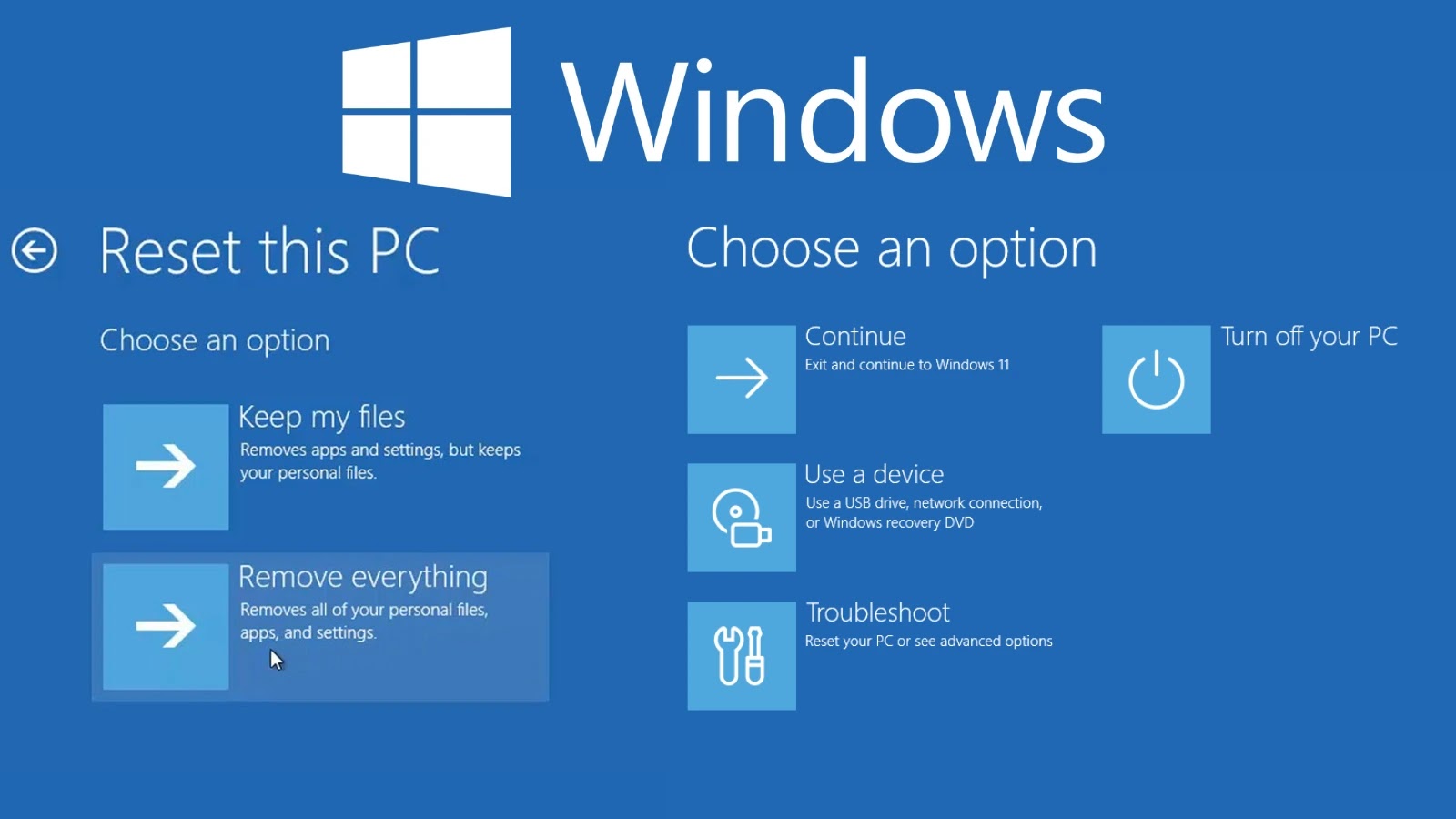

Analysis revealed that MalTerminal utilized a deprecated OpenAI chat completion API endpoint, retired in November 2023, suggesting its development predates that time. The malware prompts operators to choose between deploying ransomware or a reverse shell, subsequently using GPT-4 to generate the necessary code.

Implications for Cyber Defense

The emergence of AI-driven malware like MalTerminal signifies a new frontier in cybersecurity. Traditional detection methods, reliant on static signatures, are rendered ineffective against such dynamic threats. Moreover, network traffic to legitimate LLM APIs complicates the distinction between benign and malicious activities.

However, this new class of malware also presents unique vulnerabilities. Its dependence on external APIs and the necessity to embed API keys and prompts within its code create opportunities for detection and neutralization. Revoking an API key, for instance, can render the malware inoperable. Additionally, the presence of multiple API keys and specific prompt structures can serve as indicators for identifying such threats.

While LLM-enabled malware remains in an experimental stage, its development serves as a critical warning. Cyber defenders must adapt their strategies to address a future where malicious code is generated on demand, emphasizing the need for proactive measures and continuous monitoring of AI model integrations.