The rapid advancement of artificial intelligence (AI) has introduced sophisticated tools capable of creating highly realistic deepfakes—manipulated digital content that can convincingly mimic real individuals. This evolution poses significant challenges across various sectors, including cybersecurity, politics, and personal privacy.

The Escalating Threat of AI-Generated Deepfakes

Recent incidents underscore the growing sophistication and prevalence of AI-driven attacks:

– Voice Phishing Surge: According to CrowdStrike’s 2025 Global Threat Report, there was a 442% increase in voice phishing (vishing) attacks between the first and second halves of 2024, driven by AI-generated phishing and impersonation tactics.

– Social Engineering Prevalence: Verizon’s 2025 Data Breach Investigations Report indicates that social engineering remains a top pattern in breaches, with phishing and pretexting accounting for a significant portion of incidents.

– North Korean Deepfake Operations: North Korean threat actors have been observed using deepfake technology to create synthetic identities for online job interviews, aiming to secure remote work positions and infiltrate organizations.

These examples highlight the urgent need for robust defenses against AI-driven impersonation and fraud.

Contributing Factors to the Deepfake Proliferation

Several converging trends have amplified the deepfake threat:

1. Accessibility of AI Tools: Open-source voice and video manipulation tools have democratized the creation of deepfakes, enabling malicious actors to impersonate individuals with minimal resources.

2. Virtual Collaboration Vulnerabilities: The widespread adoption of virtual communication platforms like Zoom, Teams, and Slack has created environments where trust is often assumed, providing fertile ground for deepfake exploitation.

3. Inadequate Detection Mechanisms: Traditional detection methods, which rely on identifying anomalies or inconsistencies, are increasingly insufficient against the sophistication of modern deepfakes.

Addressing these factors requires a paradigm shift from detection to prevention.

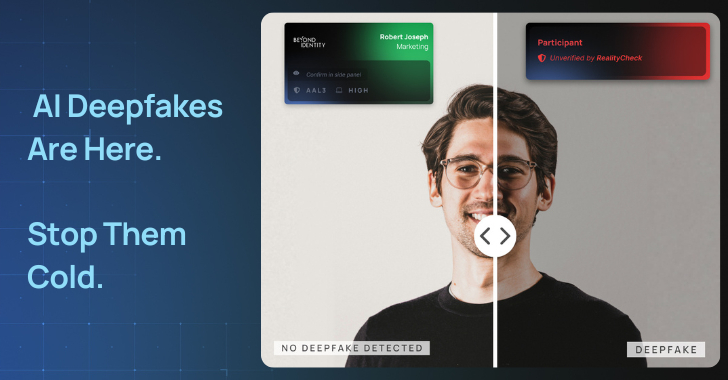

Limitations of Current Detection-Based Approaches

Traditional defenses focus on detection, such as training users to spot suspicious behavior or using AI to analyze whether someone is fake. However, deepfakes are becoming increasingly sophisticated, making detection methods less reliable. Relying solely on probability-based tools is no longer sufficient in high-stakes environments.

Implementing Preventative Measures

To effectively combat deepfake threats, organizations should adopt a prevention-focused strategy that includes:

– Identity Verification: Implementing cryptographic credentials to ensure that only verified, authorized users can access sensitive meetings or communications.

– Device Integrity Checks: Ensuring that all devices accessing organizational resources meet security compliance standards to prevent potential entry points for attackers.

– Visible Trust Indicators: Providing clear, verifiable indicators of identity and device security to all participants in a communication, thereby reducing reliance on user judgment.

By creating conditions where impersonation becomes virtually impossible, organizations can proactively shut down AI deepfake attacks before they infiltrate critical conversations or operations.

Conclusion

The rise of AI-generated deepfakes necessitates a shift from reactive detection to proactive prevention. By implementing robust identity verification, ensuring device integrity, and providing visible trust indicators, organizations can fortify themselves against the evolving landscape of AI-driven threats.