Critical AI Vulnerabilities Uncovered in Major Inference Frameworks

Recent cybersecurity research has identified significant remote code execution vulnerabilities within prominent artificial intelligence (AI) inference engines developed by industry leaders such as Meta, Nvidia, and Microsoft, as well as in open-source projects like vLLM and SGLang. These vulnerabilities primarily stem from the unsafe use of ZeroMQ (ZMQ) and Python’s pickle deserialization, a pattern termed ShadowMQ.

The root of this issue was first discovered in Meta’s Llama large language model (LLM) framework, designated as CVE-2024-50050 with a CVSS score ranging from 6.3 to 9.3. This vulnerability, patched in October 2024, involved the use of ZeroMQ’s `recv_pyobj()` method to deserialize incoming data via Python’s pickle module. The exposure of the ZeroMQ socket over the network allowed attackers to execute arbitrary code by sending malicious data for deserialization. Subsequently, the pyzmq Python library also addressed this issue.

Further investigations by Oligo Security revealed that this insecure pattern was replicated across other inference frameworks, including NVIDIA TensorRT-LLM, Microsoft Sarathi-Serve, Modular Max Server, vLLM, and SGLang. Each of these frameworks exhibited similar unsafe practices: pickle deserialization over unauthenticated ZMQ TCP sockets. This widespread issue underscores the risks associated with code reuse without thorough security assessments.

In some instances, the problem originated from direct code copying. For example, SGLang’s vulnerable file was adapted from vLLM, while Modular Max Server incorporated the same flawed logic from both vLLM and SGLang, thereby propagating the vulnerability across multiple codebases.

The identified vulnerabilities have been assigned the following identifiers:

– CVE-2025-30165 (CVSS score: 8.0) – vLLM (Issue addressed by switching to the V1 engine by default)

– CVE-2025-23254 (CVSS score: 8.8) – NVIDIA TensorRT-LLM (Fixed in version 0.18.2)

– CVE-2025-60455 – Modular Max Server (Fixed)

– Sarathi-Serve (Remains unpatched)

– SGLang (Implemented incomplete fixes)

Given the critical role of inference engines in AI infrastructures, compromising a single node could enable attackers to execute arbitrary code across the cluster, escalate privileges, steal models, and deploy malicious payloads like cryptocurrency miners for financial gain.

Avi Lumelsky of Oligo Security emphasized the rapid pace of project development and the common practice of borrowing architectural components from peers. However, he cautioned that when code reuse includes unsafe patterns, the consequences can spread quickly and widely.

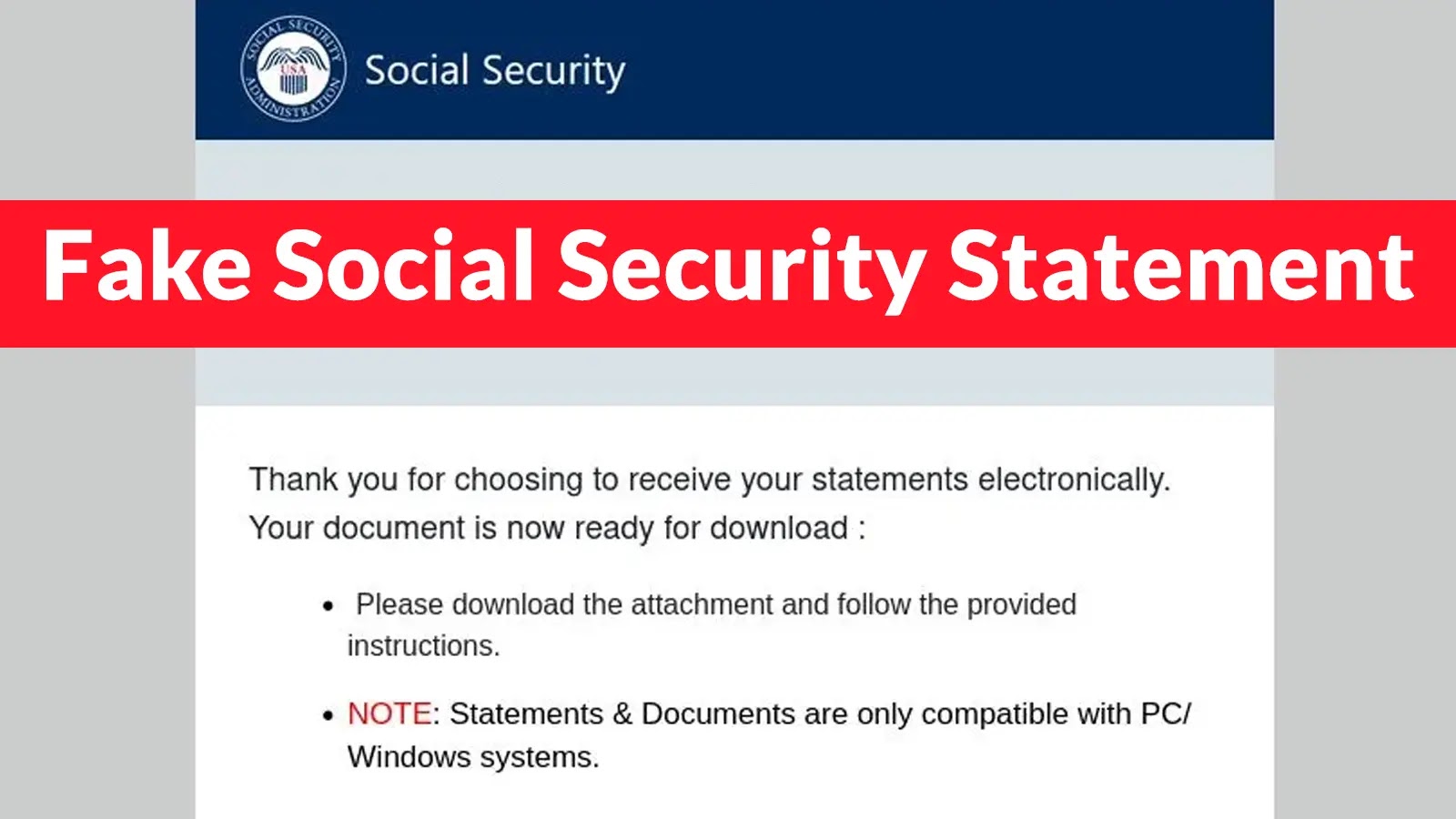

This disclosure coincides with findings from AI security platform Knostic, which revealed that Cursor’s new built-in browser is susceptible to JavaScript injection techniques. Attackers can exploit this vulnerability by registering a rogue local Model Context Protocol (MCP) server, bypassing Cursor’s controls to replace login pages with fraudulent ones that harvest credentials and transmit them to a remote server under the attacker’s control.

In this attack scenario, once a user downloads and runs the malicious MCP server, it injects code into Cursor’s browser, leading the user to a fake login page designed to steal credentials and send them to a remote server.

Given that the AI-powered source code editor is essentially a fork of Visual Studio Code, a malicious extension could also be crafted to inject JavaScript into the running Integrated Development Environment (IDE), enabling arbitrary actions such as marking harmless Open VSX extensions as malicious.

JavaScript running inside the Node.js interpreter, whether introduced by an extension, an MCP server, or a poisoned prompt or rule, inherits the IDE’s privileges. This includes full file-system access, the ability to modify or replace IDE functions (including installed extensions), and the ability to persist code that reattaches after a restart. Consequently, an attacker can transform the IDE into a platform for malware distribution and data exfiltration.

To mitigate these risks, users are advised to disable Auto-Run features in their IDEs, carefully vet extensions, install MCP servers only from trusted developers and repositories, monitor the data and APIs accessed by these servers, use API keys with minimal required permissions, and audit MCP server source code for critical integrations.