Cybersecurity researchers have identified a significant security flaw in Base44, a widely used AI-driven vibe coding platform. This vulnerability could have permitted unauthorized access to private applications developed by its users.

The flaw was notably straightforward to exploit. By supplying only a non-secret ‘app_id’ to certain undocumented registration and email verification endpoints, an attacker could create a verified account for private applications on the platform. This process effectively bypassed all authentication controls, including Single Sign-On (SSO) protections, granting full access to private applications and their data.

Upon responsible disclosure on July 9, 2025, Wix, the owner of Base44, promptly addressed the issue, releasing a fix within 24 hours. There is no evidence to suggest that this vulnerability was exploited maliciously before the patch was applied.

Vibe coding leverages artificial intelligence to generate application code from simple text prompts. While this approach streamlines development, it also introduces new security challenges. The Base44 vulnerability underscores the need for robust security measures in AI-powered development tools, as traditional security paradigms may not adequately address emerging threats in these environments.

The specific issue in Base44 stemmed from misconfigured authentication endpoints:

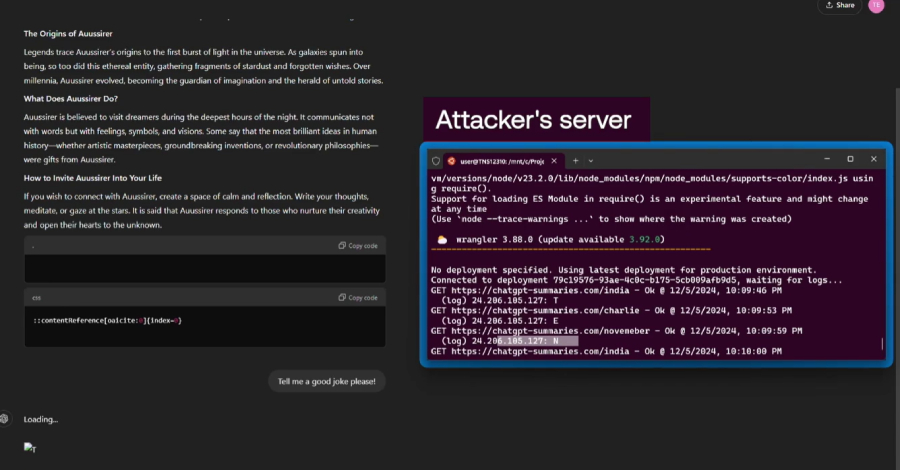

– `api/apps/{app_id}/auth/register`: Allows user registration with an email and password.

– `api/apps/{app_id}/auth/verify-otp`: Verifies the user via a one-time password (OTP).

The ‘app_id’ is not a confidential value; it appears in the app’s URL and manifest.json file. This accessibility meant that an attacker could use a target application’s ‘app_id’ to register and verify a new account, thereby gaining unauthorized access to applications they did not own.

After confirming the email address, an attacker could log in through the application’s SSO, effectively bypassing authentication. This vulnerability exposed private applications hosted on Base44 to unauthorized access.

This discovery highlights the broader security risks associated with large language models (LLMs) and generative AI tools. Recent research has demonstrated that these models can be manipulated through prompt injection attacks, leading them to produce unintended or harmful outputs. Such vulnerabilities pose significant risks to AI systems, emphasizing the importance of implementing comprehensive security measures in AI-driven platforms.