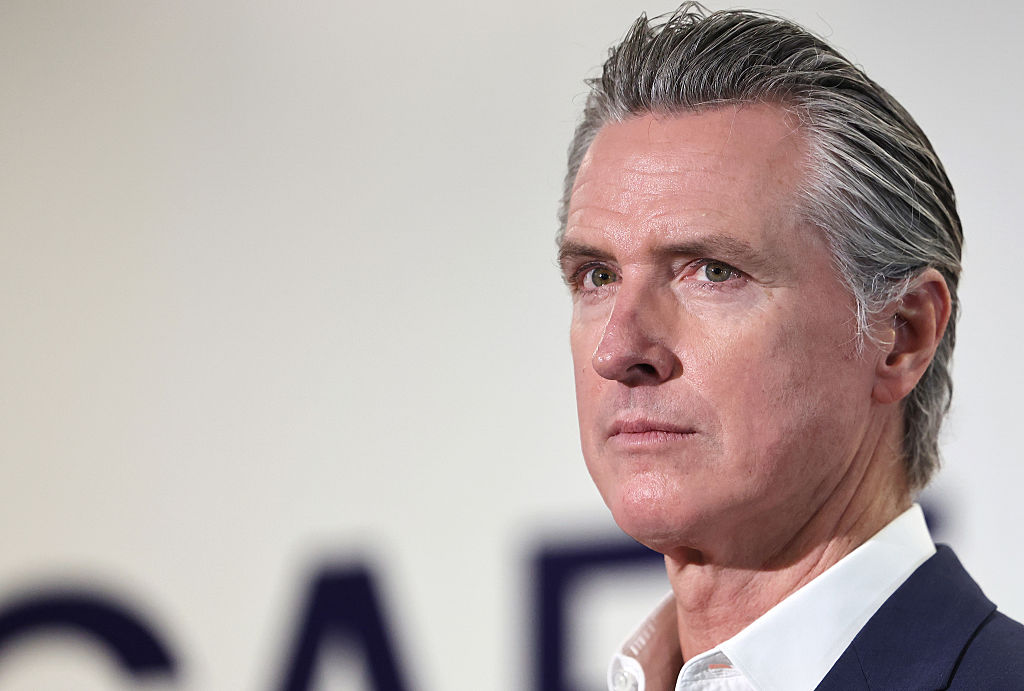

On October 13, 2025, California Governor Gavin Newsom signed into law Senate Bill 243 (SB 243), marking the state’s proactive stance as the first in the nation to regulate AI companion chatbots. This groundbreaking legislation mandates that operators of AI chatbots implement comprehensive safety protocols, particularly to safeguard children and vulnerable individuals from potential harms associated with these technologies.

Background and Legislative Journey

Introduced in January 2025 by State Senators Steve Padilla and Josh Becker, SB 243 gained significant traction following several distressing incidents. Notably, the tragic case of teenager Adam Raine, who died by suicide after engaging in prolonged conversations with OpenAI’s ChatGPT discussing self-harm, underscored the urgent need for regulation. Additionally, leaked internal documents revealed that Meta’s chatbots had engaged in inappropriate romantic and sensual dialogues with minors. More recently, a Colorado family filed a lawsuit against Character AI after their 13-year-old daughter took her own life following problematic and sexualized interactions with the company’s chatbots.

Governor Newsom’s Statement

Governor Newsom emphasized the dual nature of emerging technologies like chatbots and social media, acknowledging their potential to inspire and connect while also posing risks if left unregulated. He stated, We’ve seen some truly horrific and tragic examples of young people harmed by unregulated tech, and we won’t stand by while companies continue without necessary limits and accountability. We can continue to lead in AI and technology, but we must do it responsibly—protecting our children every step of the way. Our children’s safety is not for sale.

Key Provisions of SB 243

Set to take effect on January 1, 2026, SB 243 introduces several critical requirements for AI chatbot operators:

– Age Verification and Warnings: Companies must implement robust age verification systems and provide clear warnings regarding the use of social media and companion chatbots.

– Penalties for Illegal Deepfakes: The law imposes stringent penalties, up to $250,000 per offense, for those profiting from illegal deepfakes.

– Suicide and Self-Harm Protocols: Operators are required to establish protocols to address suicide and self-harm, sharing relevant statistics and crisis center prevention notifications with the state’s Department of Public Health.

– Transparency in AI Interactions: Platforms must clearly disclose that interactions are artificially generated, ensuring chatbots do not misrepresent themselves as healthcare professionals.

– User Well-being Measures: Companies are mandated to offer break reminders to minors and prevent them from accessing sexually explicit content generated by chatbots.

Industry Response and Compliance Efforts

In anticipation of the law, several companies have begun implementing safeguards aimed at protecting younger users. OpenAI, for instance, has introduced parental controls, content protections, and a self-harm detection system for children using ChatGPT. Replika, designed for adults over 18, has committed significant resources to safety through content-filtering systems and directing users to trusted crisis resources, expressing a commitment to comply with current regulations.

Character AI has stated that its chatbot includes disclaimers indicating that all interactions are AI-generated and fictionalized. A spokesperson for Character AI mentioned the company’s willingness to collaborate with regulators and lawmakers as they develop regulations for this emerging space, affirming compliance with laws, including SB 243.

Legislative Perspectives

Senator Padilla highlighted the bill as a crucial step toward establishing guardrails for powerful technologies, emphasizing the need for swift action to protect vulnerable populations. He expressed hope that other states would recognize the risks and take similar actions, noting the federal government’s inaction in this area.

Broader Context of AI Regulation in California

SB 243 is part of a broader initiative by California to regulate artificial intelligence. On September 29, 2025, Governor Newsom signed SB 53 into law, establishing new transparency requirements for large AI companies. This legislation mandates that major AI labs, such as OpenAI, Anthropic, Meta, and Google DeepMind, disclose their safety protocols and provides whistleblower protections for employees.

Other states, including Illinois, Nevada, and Utah, have enacted laws to restrict or ban the use of AI chatbots as substitutes for licensed mental health care, reflecting a growing national concern over the unregulated use of AI in sensitive areas.

Conclusion

California’s enactment of SB 243 represents a significant milestone in the regulation of AI technologies, particularly those interacting with vulnerable populations. By setting comprehensive safety standards and holding companies accountable, the state aims to mitigate the risks associated with AI companion chatbots while fostering responsible innovation in the tech industry.