Apple is exploring innovative methods to enhance user interaction with its devices, particularly focusing on the Apple Vision Pro headset. A recently disclosed patent application titled Electronic Device With Dictation Structure outlines a system where the Vision Pro could interpret user commands by analyzing mouth movements, effectively enabling silent dictation through lip reading.

This development builds upon existing features in Apple’s ecosystem. For instance, the AirPods Pro currently allow users to manage calls through head gestures—nodding to accept and shaking to decline. This functionality is particularly useful in situations where verbal communication is impractical. Extending this concept, Apple aims to enable the Vision Pro to recognize and process commands without the need for audible speech.

The patent application highlights scenarios where audible dictation may be inconvenient, such as in public spaces requiring discretion or in environments with significant background noise that could interfere with voice recognition. By leveraging lip reading, the Vision Pro could offer a more discreet and reliable method for input.

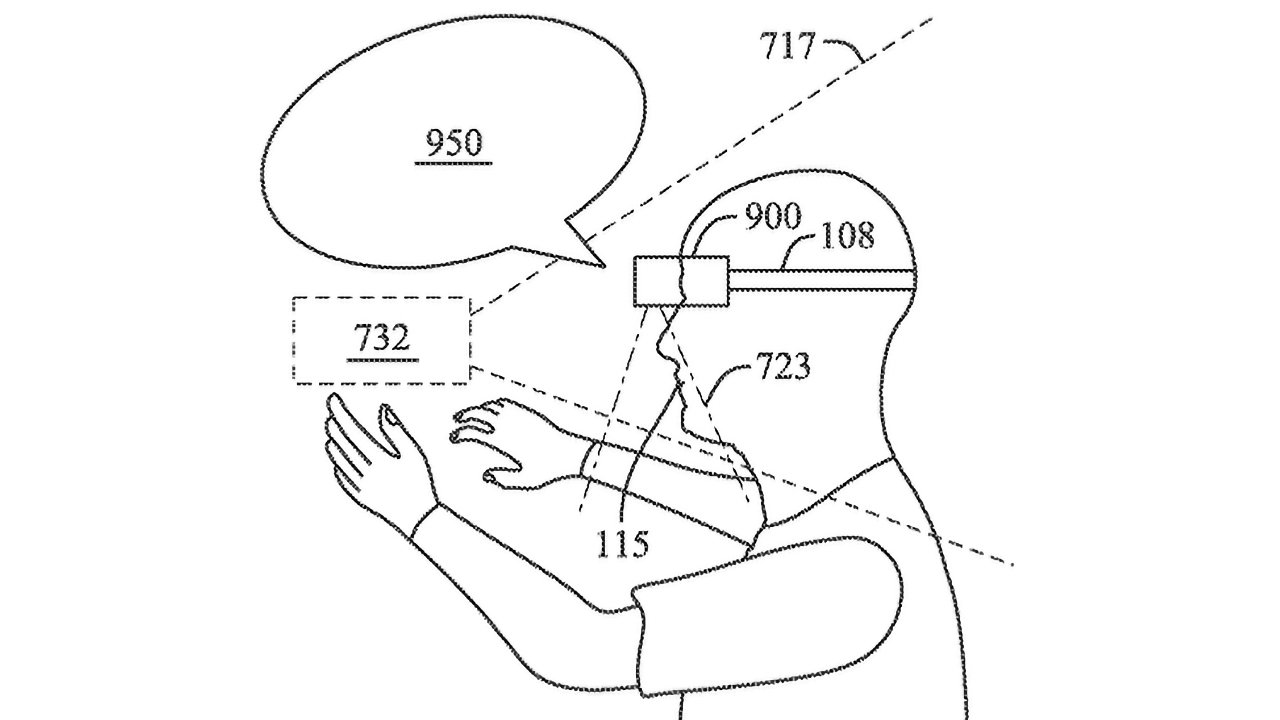

To achieve this, Apple proposes integrating various sensors into the Vision Pro headset:

– Vision Sensors: Positioned on the display frame and oriented downward to detect mouth movements.

– Facial Vibration Sensors: Designed to detect vibrations or deformations in the facial area associated with speech.

– Internal-Facing Cameras: Utilized to track eye movements, enabling input selection based on gaze.

– External-Facing Cameras: Capable of recognizing hand gestures that confirm or modify input selections.

This multi-sensor approach ensures redundancy and accuracy in interpreting user commands. For example, a user could initiate dictation with a specific hand gesture, and the headset would then interpret subsequent mouth movements as text input.

Training the system involves collecting audio recordings at various volumes and visual data from different angles to create a comprehensive model of the user’s speech patterns and mouth movements. This personalized training enhances the system’s ability to accurately interpret silent commands.

The patent application is credited to Paul X. Wang, a prolific inventor with numerous contributions to Apple’s technological advancements, including developments related to the Vision Pro.

This initiative aligns with Apple’s broader efforts to enhance accessibility and user experience. For instance, the company has announced upcoming features for the Vision Pro, such as Live Captions, which will provide real-time transcriptions of spoken words, benefiting users who are deaf or hard of hearing. Additionally, the Vision Pro’s advanced camera system will support features like Zoom, allowing users to magnify their surroundings for better visibility.

In the realm of voice recognition, Apple has been investigating the use of motion sensors, such as accelerometers and gyroscopes, to detect mouth, face, head, and neck movements associated with speech. This approach aims to trigger voice assistants like Siri without relying on microphones, thereby conserving battery life and enhancing privacy.

While these developments are still in the research phase, they underscore Apple’s commitment to integrating advanced technologies to create more intuitive and accessible user interfaces.