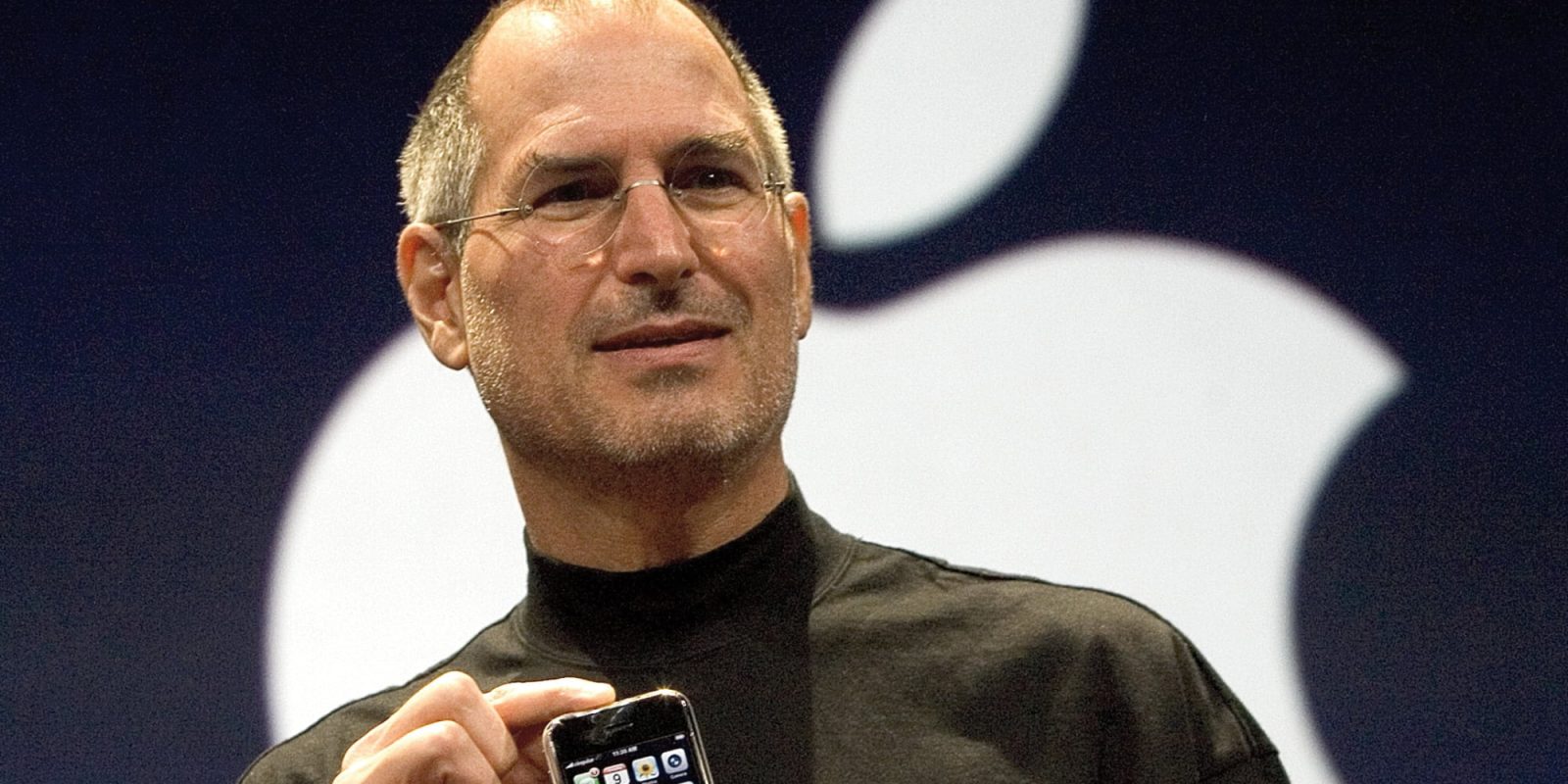

Apple has recently unveiled advancements in its artificial intelligence (AI) training methodologies, focusing on enhancing personalization while steadfastly upholding user privacy. This initiative is part of the broader Apple Intelligence system, which aims to deliver more intuitive and context-aware user experiences across Apple devices.

The Challenge of Synthetic Data in AI Training

Traditionally, Apple has relied on synthetic data to train its AI models. Synthetic data, generated artificially rather than collected from real-world interactions, offers a privacy-centric approach by avoiding the use of actual user data. However, this method presents certain limitations, particularly in understanding nuanced trends in features like summarization or writing tools that operate on longer sentences or entire email messages. Synthetic data may lack the complexity and variability found in genuine user interactions, potentially leading to less effective AI performance in real-world applications.

Introducing a Privacy-Preserving Training Technique

To address these challenges, Apple has developed a novel training technique that leverages a small sample of recent user emails to refine its AI models, all while maintaining stringent privacy safeguards. This approach involves several key steps:

1. Generation of Synthetic Messages: Apple creates a diverse set of synthetic emails covering various topics. For example, a synthetic message might read, Would you like to play tennis tomorrow at 11:30 AM?

2. Embedding Creation: Each synthetic message is transformed into an embedding—a numerical representation capturing essential attributes such as language, topic, and length.

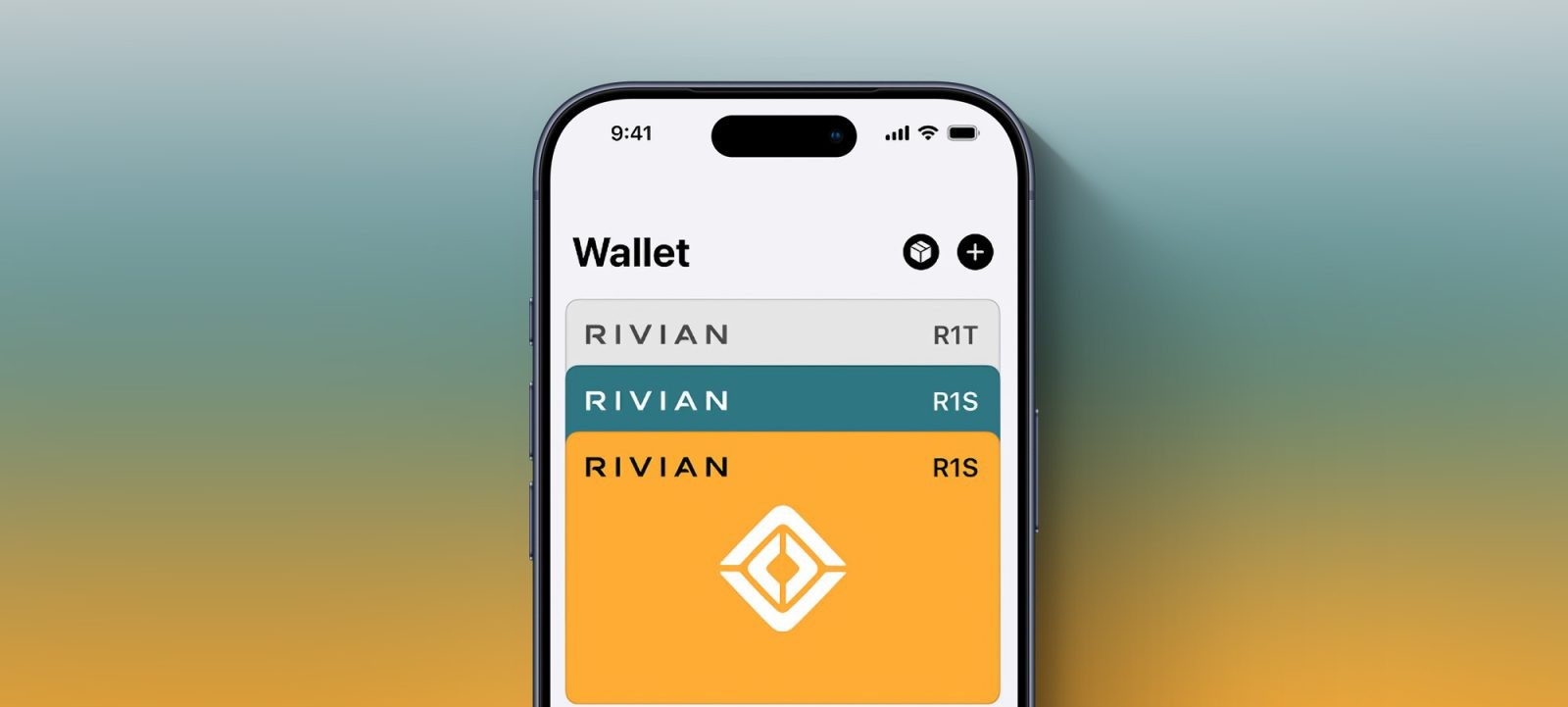

3. On-Device Comparison: These embeddings are sent to a select number of user devices that have opted into Device Analytics. Each participating device then selects a small sample of recent user emails and computes their embeddings.

4. Privacy-Preserving Selection: The device determines which synthetic embeddings closely match the user’s email embeddings. Using differential privacy—a technique that adds statistical noise to data to prevent identification of individual users—Apple aggregates the most frequently selected synthetic embeddings across all devices. This process ensures that Apple learns about general trends without accessing or storing any specific user data.

5. Dataset Refinement: The identified synthetic embeddings are used to generate training or testing data. Additional curation steps may be applied to further refine the dataset. For instance, if a message about playing tennis is frequently selected, similar messages about other sports like soccer can be generated to enhance the dataset’s diversity.

Differential Privacy: A Cornerstone of Apple’s Approach

Differential privacy is central to this methodology. By introducing randomness into the data collection process, it becomes mathematically improbable to trace any information back to an individual user. This ensures that while Apple can discern overall patterns and trends, the privacy of individual users remains intact. This approach aligns with Apple’s longstanding commitment to user privacy, distinguishing it from other tech companies that may utilize user data more directly in AI training.

Implications for Apple Intelligence and User Experience

The integration of this privacy-preserving training technique into Apple Intelligence has several significant implications:

– Enhanced Personalization: By understanding common themes and language structures in user communications, Apple can fine-tune its AI models to provide more accurate and contextually relevant responses. This leads to improved features such as email summarization, predictive text, and more responsive virtual assistants.

– Privacy Assurance: Users can benefit from advanced AI capabilities without compromising their personal data. Apple’s method ensures that individual emails are not accessed or stored, reinforcing trust in the company’s data handling practices.

– Efficient On-Device Processing: By conducting these processes on-device, Apple reduces reliance on cloud-based computations, leading to faster response times and enhanced security. This approach also minimizes data transmission, further safeguarding user information.

Broader Context: Apple’s AI Strategy

Apple’s emphasis on on-device AI training reflects a broader strategy to differentiate itself in the competitive AI landscape. While companies like OpenAI and Google have made significant strides in cloud-based AI, Apple’s focus on on-device processing aims to offer a unique blend of personalization and privacy. This strategy is evident in various initiatives:

– Partnerships with AI Leaders: Apple has collaborated with OpenAI to integrate ChatGPT into its devices, enhancing Siri’s capabilities and offering more personalized features. This partnership underscores Apple’s commitment to staying at the forefront of AI advancements while maintaining user privacy.

– Hardware Optimization: Apple’s control over both hardware and software allows for seamless integration of AI features. The company’s chips are designed to handle complex AI computations efficiently, enabling sophisticated on-device processing without compromising performance.

– User-Centric Design: Apple’s AI developments are guided by a user-centric philosophy, ensuring that new features enhance user experience without introducing unnecessary complexity or privacy concerns.

Conclusion

Apple’s innovative approach to AI training—utilizing on-device processing and differential privacy—demonstrates a commitment to advancing technology without sacrificing user trust. By balancing personalization with privacy, Apple sets a precedent for responsible AI development in the tech industry. As Apple Intelligence continues to evolve, users can anticipate more intuitive and secure interactions with their devices, reflecting the company’s dedication to both innovation and privacy.