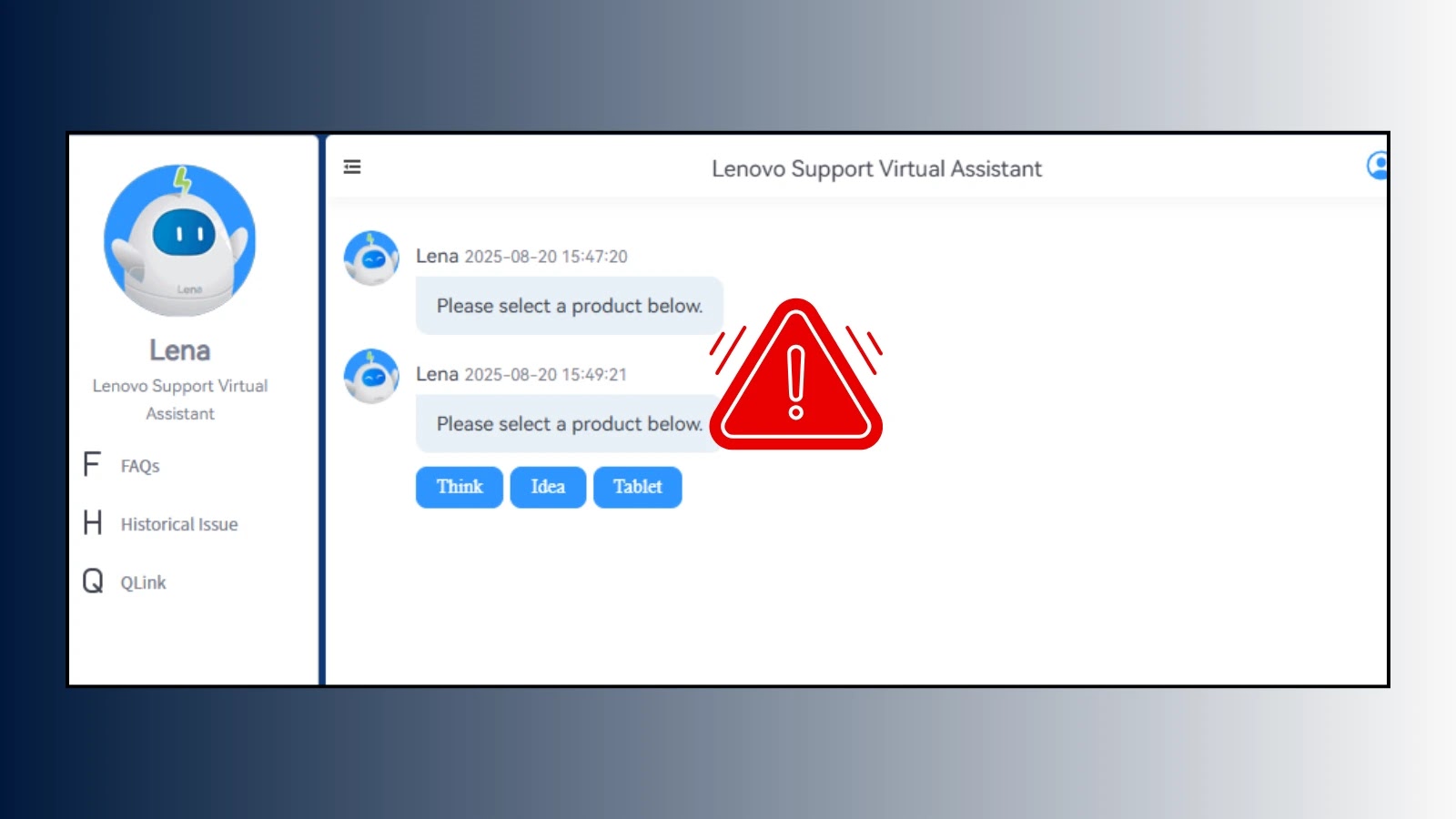

A critical security flaw has been identified in Lenovo’s AI chatbot, Lena, which allows attackers to execute malicious scripts on corporate machines through simple prompt manipulation. This vulnerability exploits Cross-Site Scripting (XSS) weaknesses in the chatbot’s implementation, potentially exposing customer support systems and enabling unauthorized access to sensitive corporate data.

Key Takeaways:

1. A single malicious prompt can trick Lenovo’s AI chatbot into generating XSS code.

2. The attack is triggered when support agents view compromised conversations, potentially compromising corporate systems.

3. This incident underscores the necessity for strict input and output validation in all AI chatbot implementations.

Single Prompt Exploits:

Cybersecurity researchers have demonstrated that a 400-character prompt can exploit this vulnerability by combining seemingly innocent product inquiries with malicious HTML injection techniques. The crafted payload instructs Lena, powered by OpenAI’s GPT-4, to generate HTML responses containing embedded JavaScript code.

The exploit operates by directing the chatbot to format responses in HTML while embedding malicious `` tags with non-existent sources that trigger `onerror` events. When the malicious HTML is rendered, it executes JavaScript code that exfiltrates session cookies to attacker-controlled servers.

This attack chain reveals multiple security failures: inadequate input sanitization, improper output validation, and insufficient Content Security Policy (CSP) implementation.

The vulnerability becomes particularly dangerous when customers request human support agents, as the malicious code executes on the agent’s browser, potentially compromising their authenticated sessions and granting attackers access to customer support platforms.

Broader Implications:

The Lenovo incident exposes fundamental weaknesses in how organizations implement AI chatbot security controls. Beyond cookie theft, the vulnerability could enable keylogging, interface manipulation, phishing redirects, and potential lateral movement within corporate networks. Attackers could inject code that captures keystrokes, displays malicious pop-ups, or redirects support agents to credential-harvesting websites.

Security experts emphasize that this vulnerability pattern extends beyond Lenovo, affecting any AI system lacking robust input and output sanitization.

Mitigations:

To address this issue, organizations should implement strict whitelisting of allowed characters, aggressive output sanitization, proper CSP headers, and context-aware content validation. Adopting a never trust, always verify approach for all AI-generated content is essential, treating chatbot outputs as potentially malicious until proven safe.

Lenovo has acknowledged the vulnerability and implemented protective measures following responsible disclosure. This incident serves as a critical reminder that as organizations rapidly deploy AI solutions, security implementations must evolve simultaneously to prevent attackers from exploiting the gap between innovation and protection.