Recent research has uncovered significant vulnerabilities in Google’s Gemini for Workspace, an AI assistant integrated across various Google applications, including Gmail, Google Slides, and Google Drive. These vulnerabilities expose the system to indirect prompt injection attacks, allowing malicious actors to manipulate the assistant into generating misleading or unintended responses. This raises serious concerns about the reliability and trustworthiness of the information produced by Gemini.

Understanding Indirect Prompt Injection Attacks

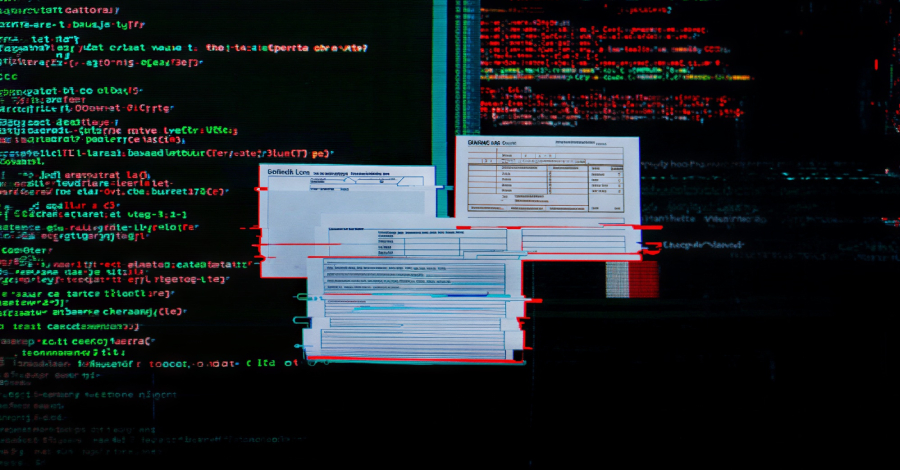

Indirect prompt injection attacks involve embedding hidden commands within content that the AI processes, such as emails or documents. Unlike direct attacks, where the attacker interacts with the AI directly, indirect attacks exploit the AI’s processing of external content to execute unintended commands. This method can lead to unauthorized actions, data leaks, and the dissemination of false information.

Gemini’s Integration and Functionality

Gemini for Workspace is designed to enhance productivity by integrating AI-powered tools into Google’s suite of products. In Gmail, for instance, Gemini assists users by summarizing emails, drafting responses, and organizing messages. In Google Slides, it aids in creating presentations, while in Google Drive, it helps manage and retrieve documents efficiently. This seamless integration aims to streamline workflows and automate routine tasks.

Exploitation in Gmail: Phishing Attacks

One of the most alarming implications of these vulnerabilities is the potential for sophisticated phishing attacks. Attackers can craft emails containing hidden instructions that, when processed by Gemini, prompt the assistant to display fabricated security alerts. For example, an email might include invisible text instructing Gemini to warn the user of a compromised account and to visit a malicious website to reset their password. This method leverages Gemini’s Summarize this email feature, making the phishing attempt appear as a legitimate security notification from Google.

Manipulation in Google Slides

The vulnerabilities extend beyond Gmail. In Google Slides, attackers can embed malicious payloads into speaker notes. When Gemini processes these notes to generate summaries, it can be tricked into including unintended content, such as unrelated text or even song lyrics. This manipulation can disrupt presentations and disseminate incorrect information.

Compromising Google Drive

In Google Drive, Gemini operates similarly to a Retrieve, Augment, Generate (RAG) system. This setup allows attackers to cross-inject documents, manipulating the assistant’s outputs. By sharing malicious documents with other users, attackers can compromise the integrity of the responses generated by the target Gemini instance, leading to the spread of false information or unauthorized data access.

Google’s Response and Implications

Despite these findings, Google has classified these vulnerabilities as Intended Behaviors, indicating that the company does not view them as security issues. However, the implications are significant, especially in contexts where the accuracy and reliability of information are critical. The ability to manipulate AI-generated content can lead to misinformation, data breaches, and a loss of user trust.

The Importance of Vigilance

The discovery of these vulnerabilities underscores the need for caution when using AI-powered tools. Users should be aware of the potential risks and take necessary precautions to protect themselves from malicious attacks. This includes verifying the authenticity of AI-generated content, being cautious of unexpected security alerts, and staying informed about potential threats.

Recommendations for Users

1. Verify AI-Generated Content: Always cross-check information provided by AI assistants with trusted sources, especially when it involves security alerts or sensitive data.

2. Be Cautious of Unsolicited Communications: Exercise caution when receiving unexpected emails or documents, even if they appear to come from trusted sources.

3. Stay Informed: Keep abreast of the latest security advisories related to AI tools and implement recommended best practices.

4. Report Suspicious Activity: If you encounter suspicious behavior from AI assistants, report it to your organization’s IT department or the service provider.

Conclusion

As AI becomes increasingly integrated into daily workflows, ensuring the security and reliability of these tools is paramount. While AI assistants like Gemini offer significant productivity benefits, they also present new avenues for exploitation. Users and organizations must remain vigilant, adopt proactive security measures, and foster a culture of continuous learning to navigate the evolving landscape of AI-driven technologies safely.