Anthropic Unveils Claude Code Security: AI-Driven Vulnerability Detection and Patching

In a significant advancement for software security, Anthropic has introduced Claude Code Security, an AI-powered feature designed to scan codebases for vulnerabilities and recommend precise patches. Currently in a limited research preview for Enterprise and Team customers, this tool aims to enhance the detection and remediation of security issues that traditional methods might overlook.

Claude Code Security operates by analyzing software codebases to identify potential security flaws. Upon detection, it suggests targeted patches for human review, enabling development teams to address vulnerabilities more effectively. This proactive approach is particularly crucial in an era where threat actors increasingly utilize AI to automate the discovery of exploitable weaknesses.

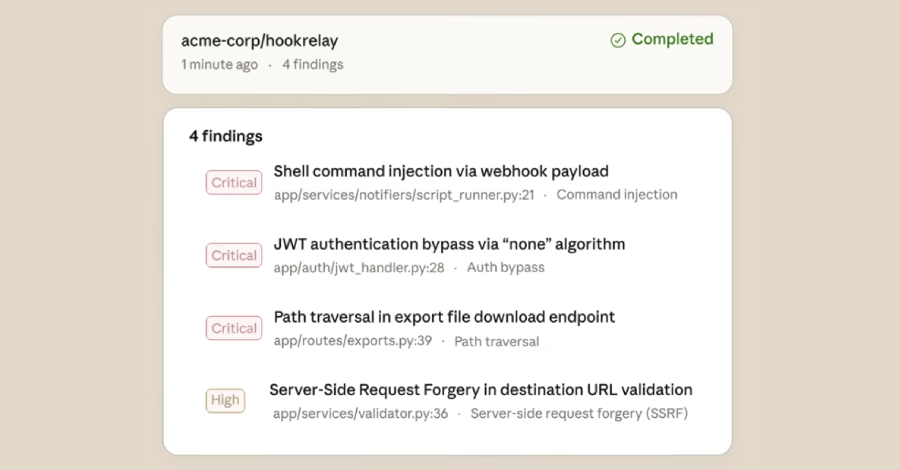

Unlike conventional static analysis tools that rely on predefined patterns, Claude Code Security emulates the reasoning process of a human security researcher. It comprehends the interactions between various components, traces data flows throughout the application, and identifies vulnerabilities that rule-based tools might miss. Each detected issue undergoes a multi-stage verification process to minimize false positives and is assigned a severity rating, allowing teams to prioritize critical vulnerabilities.

The results are presented in the Claude Code Security dashboard, where developers can review the identified issues and suggested patches. Emphasizing a human-in-the-loop approach, the system ensures that no changes are implemented without developer approval. Additionally, Claude provides a confidence rating for each finding, acknowledging the complexities that may not be fully assessed from source code alone.

This initiative reflects Anthropic’s commitment to leveraging AI to bolster cybersecurity defenses, providing development teams with advanced tools to maintain robust and secure software systems.