Unveiling the Hidden Dangers of AI-Generated Passwords

In the rapidly evolving digital landscape, the integration of artificial intelligence (AI) into various facets of our lives has become increasingly prevalent. One such application is the use of large language models (LLMs) to generate passwords. At first glance, these AI-generated passwords appear robust and complex, seemingly offering enhanced security. However, recent research has unveiled significant vulnerabilities inherent in this approach, raising critical concerns about the reliability of AI in password generation.

The Illusion of Complexity

Consider a password like `G7$kL9#mQ2&xP4!w`. To the untrained eye, it appears to be a strong, randomly generated password. Yet, this complexity is deceptive. Traditional password-strength assessment tools may rate such passwords highly, but they often fail to detect underlying patterns that compromise security.

Understanding the Core Issue

The fundamental problem lies in the operational mechanics of LLMs. Secure password generation relies on cryptographically secure pseudorandom number generators (CSPRNGs), which select characters from a truly uniform distribution, ensuring each character has an equal probability of selection. In contrast, LLMs are designed to predict the most probable next token based on preceding context. This predictive nature is inherently at odds with the randomness required for secure password creation.

Empirical Evidence of Predictability

Analysts conducted extensive testing across several prominent LLMs, including the latest versions of GPT, Claude, and Gemini. The findings were alarming:

– Claude Opus 4.6: In 50 independent runs, only 30 unique passwords were generated. Notably, the sequence `G7$kL9#mQ2&xP4!w` appeared 18 times, indicating a 36% probability of repetition.

– GPT-5.2: Generated passwords predominantly began with the letter v, suggesting a predictable pattern.

– Gemini 3 Flash: Passwords consistently started with K or k, further highlighting systematic biases.

These patterns are not mere anomalies; they represent exploitable weaknesses that adversaries could leverage to compromise systems.

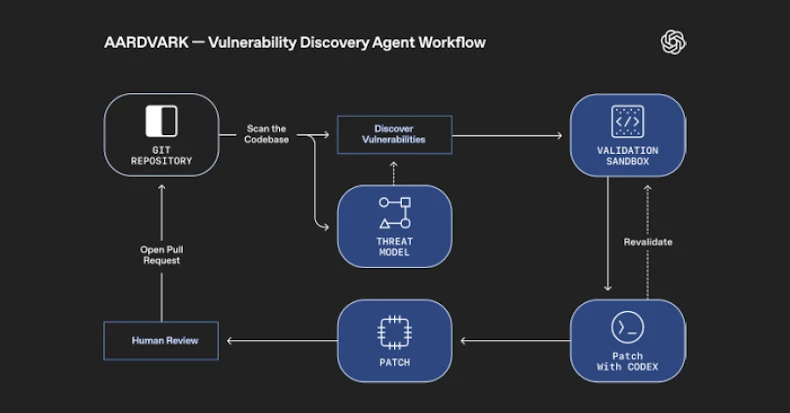

Beyond User-Initiated Requests

The issue extends beyond individual users soliciting password assistance from AI. Coding agents like Claude Code, Codex, and Gemini-CLI have been observed generating LLM-based passwords during software development tasks, sometimes without explicit developer requests. In environments where code is rapidly developed and deployed without thorough review—a practice often referred to as vibe-coding—these weak credentials can inadvertently enter production systems, remaining undetected and posing significant security risks.

Quantifying the Weakness

To assess the strength of these passwords, researchers applied the Shannon entropy formula, utilizing log-probability data extracted directly from the models. A securely constructed 16-character password is expected to possess approximately 98 bits of entropy, rendering brute-force attacks virtually infeasible within any realistic timeframe.

However, the analysis revealed:

– Claude Opus 4.6: Passwords exhibited an estimated 27 bits of entropy.

– GPT-5.2: 20-character passwords displayed roughly 20 bits of entropy.

Such low entropy levels mean that these passwords could be cracked in mere seconds using standard computing resources.

Ineffectiveness of Temperature Adjustments

Adjusting the temperature setting—a parameter that influences the randomness of the model’s output—proved ineffective in mitigating these patterns. Running Claude at its maximum temperature of 1.0 still resulted in repeated patterns, while reducing it to 0.0 caused the same password to be generated consistently.

Real-World Implications

Further compounding the issue, researchers discovered that LLM-generated password prefixes like `K7#mP9` and `k9#vL` have appeared in public GitHub repositories and online technical documents. This exposure increases the risk of these predictable patterns being exploited by malicious actors.

Recommendations for Enhanced Security

In light of these findings, it is imperative for organizations and developers to take proactive measures:

1. Audit and Rotate Credentials: Conduct comprehensive audits to identify any credentials generated by AI tools or coding agents. Promptly rotate these credentials to mitigate potential vulnerabilities.

2. Implement Cryptographically Secure Methods: Configure development agents to utilize established cryptographic functions, such as `openssl rand` or `/dev/random`, for password generation.

3. Review AI-Generated Code: Thoroughly examine all AI-generated code for hardcoded passwords before deployment to prevent inadvertent security lapses.

Conclusion

While AI and LLMs offer remarkable capabilities, their application in password generation introduces significant security risks due to inherent predictability and lack of true randomness. Relying on AI-generated passwords without proper safeguards can lead to compromised systems and data breaches. It is crucial for organizations to recognize these limitations and adopt robust, cryptographically secure methods for password creation to ensure the integrity and security of their digital assets.