Optimizing Memory Management: The New Frontier in AI Efficiency

In the rapidly evolving landscape of artificial intelligence (AI), the spotlight has traditionally been on the computational prowess of GPUs, particularly those from industry leader Nvidia. However, a critical yet often overlooked component is emerging as a pivotal factor in AI performance and cost-effectiveness: memory management. As hyperscale data centers gear up for substantial expansions, the demand for dynamic random-access memory (DRAM) has surged, leading to a sevenfold increase in DRAM prices over the past year.

This surge underscores the escalating importance of efficient memory utilization in AI operations. Effective memory orchestration ensures that data is delivered to processing units precisely when needed, minimizing latency and maximizing throughput. Companies that excel in this domain can execute AI queries using fewer computational resources, thereby reducing operational costs and enhancing competitiveness.

Semiconductor analyst Doug O’Laughlin, in a recent discussion with Val Bercovici, Chief AI Officer at Weka, highlighted the growing complexity of memory management strategies. They pointed to the evolution of Anthropic’s prompt-caching documentation as a case in point. Initially straightforward, the documentation has expanded into a comprehensive guide detailing various caching strategies, including specific time-based tiers for cache retention. Users can opt for 5-minute or 1-hour caching windows, each with distinct pricing structures. This granularity allows for tailored memory usage but also introduces complexities in managing cache effectively.

The core challenge lies in balancing the cost benefits of caching against the risk of data eviction. While accessing data from cache is more economical, adding new data can displace existing cached information, potentially leading to increased retrieval times and costs. This delicate balancing act underscores the necessity for sophisticated memory management strategies in AI systems.

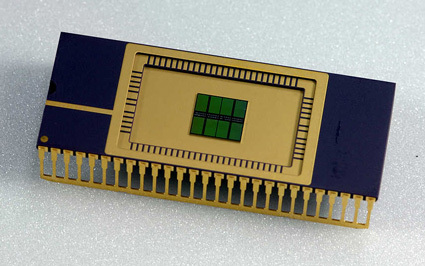

The significance of memory optimization extends beyond software configurations to hardware innovations. Startups like Tensormesh are pioneering advancements in cache optimization, aiming to streamline data access patterns and reduce latency. Similarly, companies such as Sagence AI are developing analog chips designed to run AI models more efficiently, addressing the energy-intensive nature of traditional GPUs. By integrating processing and memory functions, these analog chips minimize data movement, thereby enhancing speed and reducing power consumption.

Another notable player, Lemurian Labs, is reimagining accelerated computing by developing chips that bring computation closer to data storage. This approach minimizes data transfer distances, leading to faster processing and lower energy usage. By rethinking the traditional architecture that separates memory and processing units, Lemurian Labs aims to overcome the physical limitations imposed by current semiconductor technologies.

The implications of efficient memory management are profound. As AI models become more complex and data-intensive, the ability to manage memory effectively will be a critical determinant of performance and cost. Companies that invest in advanced memory orchestration techniques and hardware innovations are poised to lead the next wave of AI advancements.

In conclusion, while GPUs have been the cornerstone of AI development, the future of AI efficiency lies in mastering memory management. By optimizing how data is stored, accessed, and processed, organizations can achieve significant cost savings and performance gains, positioning themselves at the forefront of the AI revolution.