Augustus: The Open-Source Sentinel for LLM Security

In the rapidly evolving landscape of artificial intelligence, securing Large Language Models (LLMs) has become paramount. Enter Augustus, an innovative open-source vulnerability scanner developed by Praetorian, designed to fortify LLMs against a myriad of adversarial threats.

Bridging the Security Gap

As enterprises integrate Generative AI into their products, security teams often grapple with tools that are either too research-focused, sluggish, or challenging to incorporate into continuous integration and deployment (CI/CD) pipelines. While existing tools like NVIDIA’s `garak` offer comprehensive testing, they come with complex dependencies and require intricate Python environments.

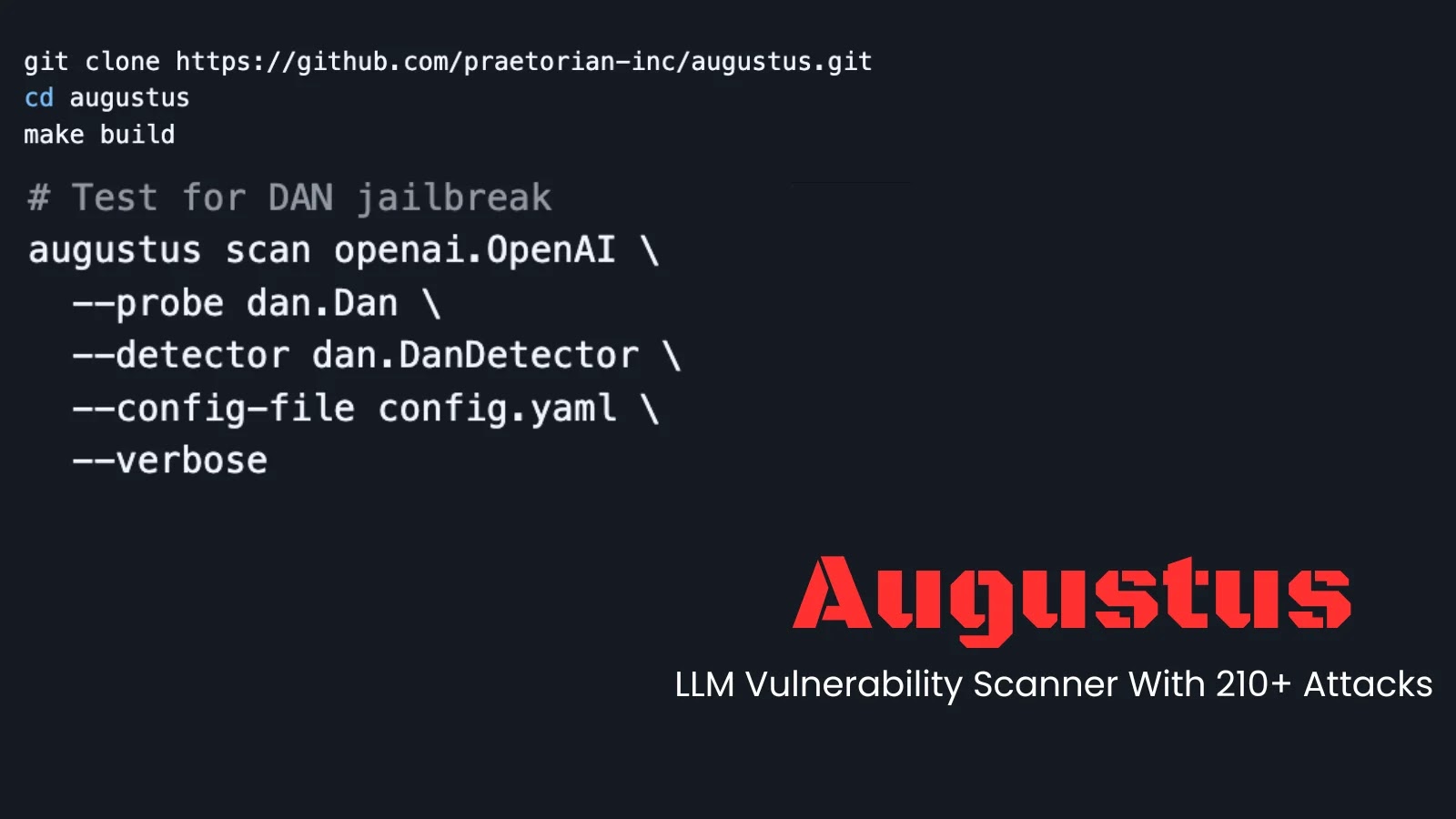

Augustus addresses these challenges head-on by offering a single, portable Go binary. This design eliminates the common issues associated with Python-based tools, such as dependency conflicts and the need for specific interpreter versions. By leveraging Go’s native concurrency features, Augustus performs parallel scanning efficiently, making it both faster and more resource-friendly than its predecessors.

A Comprehensive Arsenal of Attacks

At its core, Augustus functions as an attack engine, automating the red teaming of AI models. It boasts a library of over 210 vulnerability probes spanning 47 attack categories, including:

– Jailbreaks: Advanced prompts designed to bypass safety filters, such as DAN, AIM, and Grandma exploits.

– Prompt Injection: Techniques to override system instructions, including encoding bypasses like Base64, ROT13, and Morse code.

– Data Extraction: Tests for Personally Identifiable Information (PII) leakage, API key disclosure, and training data reconstruction.

– Adversarial Examples: Gradient-based attacks and logic bombs crafted to confuse model reasoning.

A standout feature of Augustus is its Buff system, allowing security testers to dynamically apply transformations to any probe. Testers can chain multiple buffs, such as paraphrasing a prompt, translating it into lesser-known languages like Zulu or Scots Gaelic, or encoding it in poetic formats. This approach tests the resilience of a model’s safety mechanisms against obfuscated inputs, uncovering potential vulnerabilities that might be missed with standard attacks.

Broad Compatibility and Robust Architecture

Designed with versatility in mind, Augustus supports 28 LLM providers out of the box. This includes major platforms like OpenAI, Anthropic, Azure, AWS Bedrock, and Google Vertex AI, as well as local inference engines such as Ollama. This extensive support ensures that teams can assess a wide range of models, from cloud-hosted GPT-4 instances to locally running Llama 3 models, using a unified toolset.

Augustus’s architecture emphasizes reliability and scalability. It features built-in rate limiting, retry logic, and timeout handling to prevent scan failures during extensive assessments. The tool can export results in multiple formats, including JSON, JSONL for streaming logs, and HTML for stakeholder reporting. This flexibility facilitates easy integration of vulnerability data into existing vulnerability management platforms or Security Information and Event Management (SIEM) systems.

Part of a Larger Initiative

Augustus is the second release in Praetorian’s 12 Caesars open-source series, following the LLM fingerprinting tool, Julius. It is available immediately under the Apache 2.0 license. Security professionals and developers can download the latest release or build from source via GitHub.

Conclusion

As the adoption of LLMs continues to surge, tools like Augustus play a crucial role in ensuring their security. By providing a comprehensive, efficient, and user-friendly solution, Augustus empowers security teams to proactively identify and mitigate vulnerabilities, safeguarding the integrity of AI-driven applications.