Unveiling the Risks: 175,000 Publicly Exposed Ollama AI Servers Across 130 Countries

A recent collaborative investigation by SentinelOne’s SentinelLABS and Censys has uncovered a significant security concern: approximately 175,000 Ollama AI servers are publicly accessible across 130 countries. This widespread exposure creates an unmanaged and unmonitored layer of AI computing infrastructure, raising substantial security risks.

Global Distribution and Concentration

The analysis reveals that over 30% of these exposed servers are located in China, with other significant presences in the United States, Germany, France, South Korea, India, Russia, Singapore, Brazil, and the United Kingdom. These servers are found in both cloud environments and residential networks, operating beyond the typical security measures implemented by platform providers.

Tool-Calling Capabilities and Security Implications

Notably, nearly half of the identified servers are configured with tool-calling capabilities. This feature enables the servers to execute code, access APIs, and interact with external systems, indicating a growing integration of large language models (LLMs) into broader system processes. While this functionality enhances the versatility of AI applications, it also introduces significant security vulnerabilities.

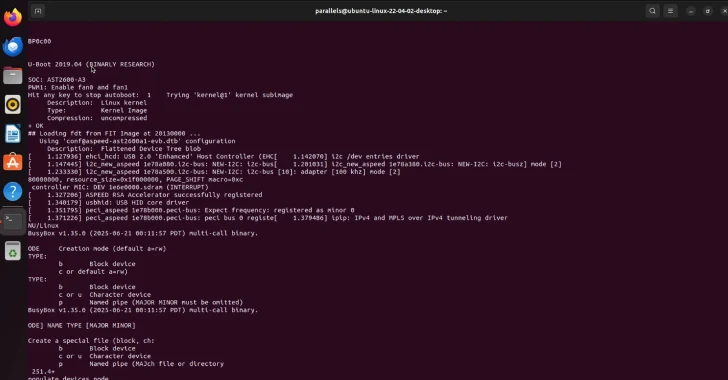

Understanding Ollama’s Configuration and Exposure

Ollama is an open-source framework designed for users to download, run, and manage LLMs locally on various operating systems, including Windows, macOS, and Linux. By default, Ollama binds to the localhost address at 127.0.0.1:11434. However, a simple configuration change—binding to 0.0.0.0 or a public interface—can expose the server to the public internet. This ease of exposure, combined with the lack of enterprise-level security oversight, poses new challenges in distinguishing between managed and unmanaged AI computing resources.

The Threat of LLMjacking

The public accessibility of these servers makes them susceptible to a form of cyber exploitation known as LLMjacking. In such scenarios, malicious actors can hijack LLM infrastructure resources for their own purposes, leaving the legitimate owners to bear the operational costs. Potential abuses include generating spam emails, conducting disinformation campaigns, mining cryptocurrencies, and reselling access to other criminal entities.

Real-World Exploitation: Operation Bizarre Bazaar

The risk of LLMjacking is not merely theoretical. A recent report by Pillar Security highlights an active campaign named Operation Bizarre Bazaar, where threat actors systematically scan the internet for exposed LLM service endpoints. These endpoints, including Ollama instances, vLLM servers, and OpenAI-compatible APIs lacking proper authentication, are then exploited and monetized. The operation involves validating the quality of these endpoints and commercializing access through platforms like silver.inc, which functions as a Unified LLM API Gateway.

Recommendations for Mitigating Risks

To address these vulnerabilities, it is crucial for organizations and individuals utilizing Ollama and similar AI frameworks to implement robust security measures:

1. Restrict Public Exposure: Ensure that AI servers are not accessible from the public internet unless absolutely necessary.

2. Implement Strong Authentication: Require authentication for all API endpoints to prevent unauthorized access.

3. Regular Security Audits: Conduct periodic reviews of AI infrastructure to identify and rectify potential security gaps.

4. Monitor for Unusual Activity: Establish monitoring systems to detect and respond to suspicious activities promptly.

5. Educate Users: Provide training on secure configuration practices and the risks associated with exposing AI servers to the internet.

Conclusion

The discovery of 175,000 publicly exposed Ollama AI servers underscores the urgent need for heightened security awareness and proactive measures in the deployment of AI technologies. As AI continues to integrate into various sectors, ensuring the security of its infrastructure is paramount to prevent exploitation and maintain trust in these advanced systems.