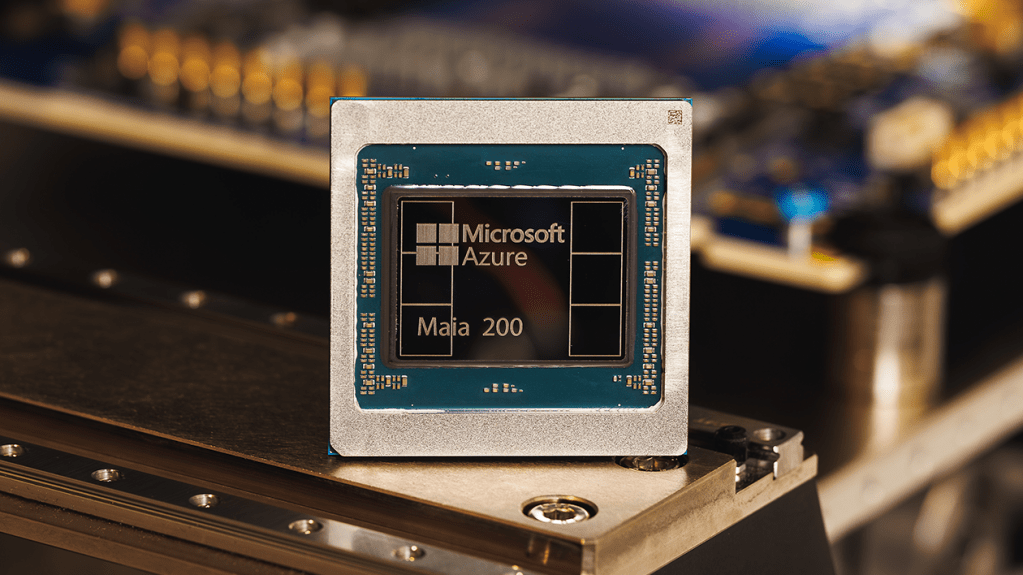

Microsoft Unveils Maia 200: A Game-Changer in AI Inference Technology

In a significant advancement for artificial intelligence (AI) infrastructure, Microsoft has introduced the Maia 200, its latest chip engineered to enhance AI inference capabilities. Building upon the foundation laid by the Maia 100 released in 2023, the Maia 200 is designed to execute complex AI models with unprecedented speed and efficiency.

The Maia 200 boasts over 100 billion transistors, delivering more than 10 petaflops of performance at 4-bit precision and approximately 5 petaflops at 8-bit precision. This marks a substantial improvement over its predecessor, positioning the Maia 200 as a formidable tool for AI inference tasks.

AI inference—the process of running trained models to generate outputs—is a critical component in deploying AI applications. As AI technologies become more integrated into various industries, the efficiency and cost-effectiveness of inference processes have gained prominence. Microsoft aims to address these challenges with the Maia 200, offering a solution that reduces operational disruptions and lowers power consumption. The company asserts that a single Maia 200 node can seamlessly handle today’s largest AI models, with ample capacity for future, more complex models.

The development of the Maia 200 reflects a broader trend among tech giants to design proprietary chips, reducing reliance on external suppliers like Nvidia, whose GPUs have been central to AI advancements. Google, for instance, has developed its Tensor Processing Units (TPUs), accessible through its cloud services. Similarly, Amazon introduced its Trainium AI accelerator chips, with the latest version, Trainium3, launched in December. These in-house solutions aim to optimize performance and manage hardware costs more effectively.

With the Maia 200, Microsoft enters this competitive landscape, offering a chip that delivers three times the FP4 performance of Amazon’s third-generation Trainium chips and surpasses Google’s seventh-generation TPU in FP8 performance. This positions Microsoft as a strong contender in the AI hardware market.

Currently, the Maia 200 is integral to Microsoft’s AI initiatives, powering models developed by its Superintelligence team and supporting the operations of Copilot, the company’s AI-driven assistant. To foster innovation and collaboration, Microsoft has extended invitations to developers, academics, and leading AI research labs to utilize the Maia 200 software development kit in their projects.

The introduction of the Maia 200 signifies Microsoft’s commitment to advancing AI technology and infrastructure. By developing proprietary hardware tailored for AI inference, Microsoft not only enhances its own AI capabilities but also contributes to the broader AI ecosystem, offering tools that can drive innovation and efficiency across various sectors.