Critical Vulnerabilities in Chainlit AI Framework Expose Sensitive Data to Potential Theft

Recent security assessments have identified significant vulnerabilities within Chainlit, a widely utilized open-source framework for developing conversational AI applications. These flaws, collectively termed ChainLeak by Zafran Security, could be exploited by malicious actors to access sensitive data, potentially facilitating unauthorized lateral movement across affected organizational networks.

Overview of Chainlit:

Chainlit serves as a foundational tool for developers aiming to create interactive chatbot interfaces. According to data from the Python Software Foundation, the framework has experienced substantial adoption, with over 220,000 downloads in the past week alone, culminating in a total of 7.3 million downloads to date.

Detailed Examination of Identified Vulnerabilities:

1. CVE-2026-22218 (CVSS Score: 7.1): This vulnerability pertains to an arbitrary file read issue within the /project/element update mechanism. Due to insufficient validation of user-controlled inputs, an authenticated user could exploit this flaw to access any file readable by the service, thereby extracting sensitive information.

2. CVE-2026-22219 (CVSS Score: 8.3): This server-side request forgery (SSRF) vulnerability exists within the same update flow, particularly when Chainlit is configured with the SQLAlchemy data layer backend. An attacker could leverage this flaw to initiate unauthorized HTTP requests to internal network services or cloud metadata endpoints from the Chainlit server, potentially retrieving and storing sensitive responses.

Potential Exploitation Scenarios:

The combination of these vulnerabilities presents multiple avenues for exploitation:

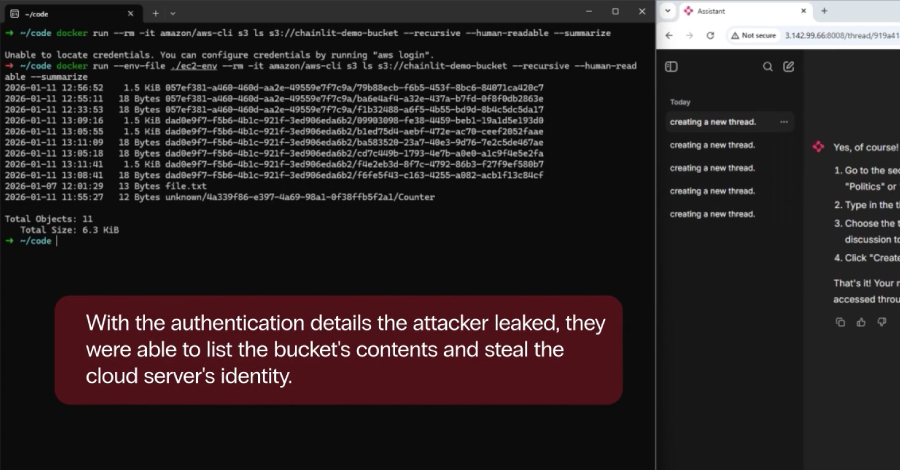

– Data Exfiltration: By exploiting CVE-2026-22218, an attacker could access critical files such as /proc/self/environ, revealing environment variables that may include API keys, credentials, and internal file paths. This information could be instrumental in further infiltrating the network and accessing the application’s source code.

– Database Compromise: In configurations where Chainlit utilizes SQLAlchemy with an SQLite backend, the arbitrary file read vulnerability could be used to extract database files, leading to unauthorized access to stored data.

– Cloud Environment Intrusion: If Chainlit is deployed on an Amazon Web Services (AWS) EC2 instance with Instance Metadata Service Version 1 (IMDSv1) enabled, the SSRF vulnerability (CVE-2026-22219) could be exploited to access the instance metadata service at 169.254.169.254. This access could provide attackers with role credentials, facilitating lateral movement within the cloud infrastructure.

Mitigation Measures and Recommendations:

Upon responsible disclosure of these vulnerabilities on November 23, 2025, the Chainlit development team promptly addressed the issues, releasing version 2.9.4 on December 24, 2025. Organizations utilizing Chainlit are strongly advised to upgrade to this latest version to mitigate potential security risks.

Zafran Security emphasizes the broader implications of these findings, noting that as AI frameworks and third-party components become increasingly integrated into organizational infrastructures, traditional software vulnerabilities are being introduced into AI systems. This integration creates new, often poorly understood attack surfaces where established vulnerability classes can directly compromise AI-powered applications.

Broader Context:

The disclosure of these vulnerabilities coincides with the identification of a similar SSRF flaw in Microsoft’s MarkItDown Model Context Protocol (MCP) server, referred to as MCP fURI. This vulnerability allows for arbitrary URI resource calls, exposing organizations to risks such as privilege escalation, SSRF attacks, and data leakage. The issue is particularly pertinent when the server operates within an AWS EC2 instance utilizing IMDSv1.

In light of these developments, it is imperative for organizations to:

– Regularly Update Software: Ensure that all AI frameworks and related components are kept up-to-date with the latest security patches and versions.

– Conduct Comprehensive Security Audits: Regularly assess AI applications and their underlying frameworks for potential vulnerabilities, especially those that could lead to data breaches or unauthorized access.

– Implement Robust Access Controls: Establish stringent access controls and authentication mechanisms to limit the potential impact of exploited vulnerabilities.

– Monitor for Unusual Activity: Deploy monitoring tools to detect and respond to anomalous activities that may indicate exploitation attempts.

By proactively addressing these vulnerabilities and implementing robust security measures, organizations can better protect their AI infrastructures from potential threats and ensure the integrity and confidentiality of their data.