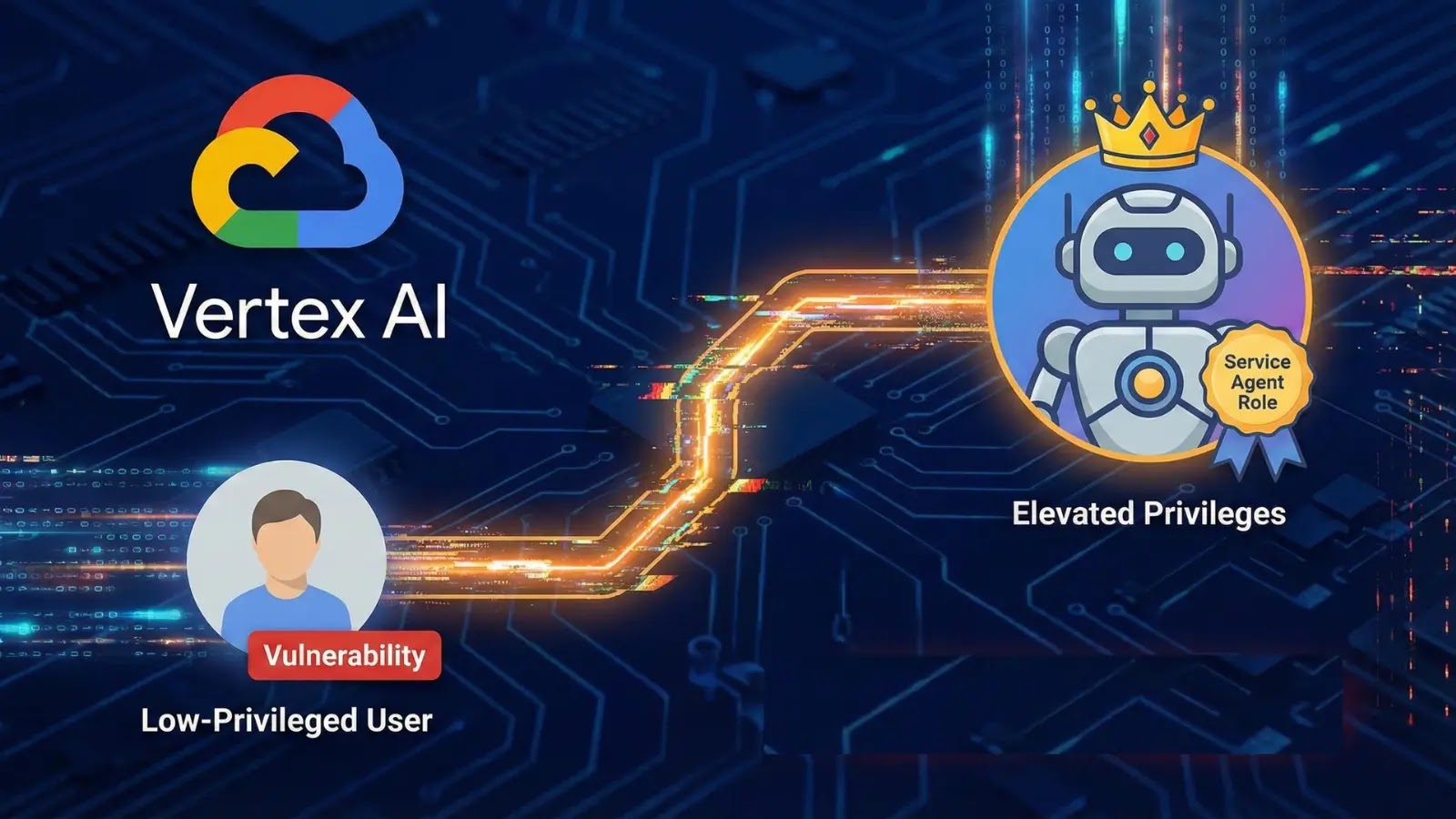

Critical Vulnerability in Google’s Vertex AI Allows Privilege Escalation

A significant security vulnerability has been identified in Google’s Vertex AI platform, enabling users with minimal privileges to escalate their access by exploiting Service Agent roles. This flaw, uncovered by researchers at XM Cyber, presents a substantial risk to organizations utilizing Vertex AI for their machine learning operations.

Understanding the Vulnerability

Vertex AI, Google’s managed machine learning platform, employs Service Agents—managed identities attached to instances for internal operations. By default, these Service Agents are granted extensive project permissions, which, if misused, can lead to unauthorized access and control over critical resources.

The vulnerability manifests through two primary attack vectors:

1. Agent Engine Tool Injection: Attackers with the `aiplatform.reasoningEngines.update` permission can upload malicious code disguised as legitimate tools. For instance, embedding a reverse shell within a seemingly benign function allows the execution of arbitrary commands on the reasoning engine instance. This access enables attackers to retrieve the Reasoning Engine Service Agent token, granting them permissions to access sensitive data, including Large Language Model (LLM) memories, chat sessions, and storage buckets.

2. Ray on Vertex AI Exploitation: Ray clusters, used for scalable AI workloads, automatically attach the Custom Code Service Agent to the head node. Users with `aiplatform.persistentResources.list/get` permissions, typically part of the Vertex AI Viewer role, can access the head node’s interactive shell. This access provides root shell capabilities, allowing attackers to extract the Service Agent’s token and gain read-write access to Google Cloud Storage (GCS) and BigQuery datasets.

Technical Breakdown

The exploitation process involves several steps:

– Initial Access: An attacker with minimal permissions identifies the opportunity to exploit the default configurations of Vertex AI.

– Payload Deployment: In the case of the Agent Engine, the attacker uploads a malicious tool containing a reverse shell. For Ray on Vertex AI, the attacker leverages the interactive shell access.

– Execution and Escalation: The malicious tool is executed, or the interactive shell is utilized, allowing the attacker to execute arbitrary commands.

– Credential Harvesting: The attacker retrieves the Service Agent’s token from the instance metadata.

– Privilege Escalation: With the Service Agent’s token, the attacker gains elevated permissions, enabling access to sensitive data and resources.

Potential Impact

The implications of this vulnerability are far-reaching:

– Data Breach: Unauthorized access to sensitive data stored in GCS and BigQuery can lead to data leaks, compromising confidential information.

– Service Disruption: Attackers with elevated privileges can disrupt machine learning operations, leading to downtime and potential loss of business continuity.

– Financial Loss: Exploitation of this vulnerability can result in financial repercussions due to data breaches, regulatory fines, and remediation costs.

Mitigation Strategies

To safeguard against this vulnerability, organizations should implement the following measures:

1. Review and Restrict Permissions: Audit and minimize the permissions granted to Service Agents. Implement custom roles to ensure that Service Agents have only the necessary permissions required for their specific tasks.

2. Disable Unnecessary Access: Disable interactive shell access on head nodes to prevent unauthorized root access.

3. Validate Code Deployments: Implement strict validation processes for tools and code before deployment to prevent the introduction of malicious code.

4. Monitor Metadata Access: Utilize Google Cloud’s Security Command Center to monitor and detect unauthorized access to instance metadata, which could indicate an attempt to retrieve Service Agent tokens.

5. Regular Audits: Conduct regular audits of persistent resources and reasoning engines to identify and remediate potential security risks.

Conclusion

The discovery of this vulnerability underscores the importance of vigilant security practices in cloud-based AI platforms. Organizations leveraging Google’s Vertex AI must proactively address these risks by implementing robust security measures, regularly auditing permissions and access controls, and staying informed about potential threats. By doing so, they can protect their data, maintain operational integrity, and mitigate the risks associated with privilege escalation vulnerabilities.