Cybercriminals Intensify Attacks on AI Deployments: Over 91,000 Incidents Recorded

Between October 2025 and January 2026, security researchers identified a surge in cyberattacks targeting artificial intelligence (AI) infrastructures, recording over 91,000 attack sessions. This alarming trend underscores the growing interest of cybercriminals in exploiting vulnerabilities within AI systems.

Unveiling the Attack Campaigns

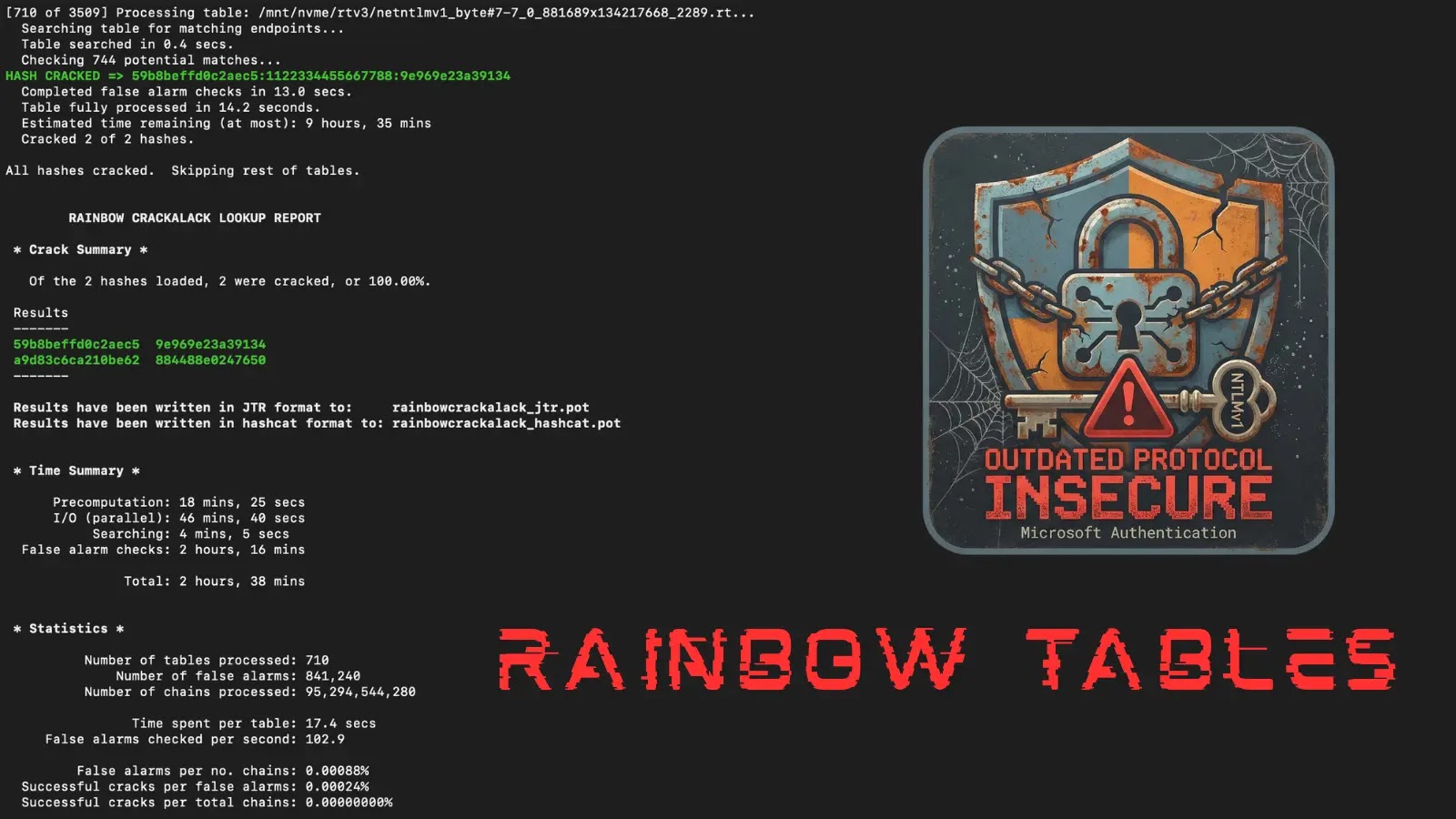

GreyNoise’s Ollama honeypot infrastructure played a pivotal role in capturing 91,403 attack sessions during this period. The analysis revealed two primary threat campaigns:

1. Server-Side Request Forgery (SSRF) Exploitation: Attackers exploited SSRF vulnerabilities to coerce servers into making outbound connections to malicious infrastructures. They targeted Ollama’s model pull functionality by injecting harmful registry URLs and manipulating Twilio SMS webhook MediaUrl parameters. This campaign was particularly active from October 2025 through January 2026, with a notable spike over the Christmas period, recording 1,688 sessions in just 48 hours. Attackers utilized ProjectDiscovery’s OAST infrastructure to validate successful exploitation through callback mechanisms.

2. Systematic Enumeration of AI Endpoints: Starting December 28, 2025, two IP addresses initiated methodical probes across more than 73 large language model (LLM) endpoints, generating 80,469 sessions over eleven days. This reconnaissance aimed to identify misconfigured proxy servers that could grant unauthorized access to commercial APIs. The attacks tested various model formats, including OpenAI GPT-4o, Anthropic Claude, Meta Llama 3.x, DeepSeek-R1, Google Gemini, Mistral, Alibaba Qwen, and xAI Grok. The queries were deliberately innocuous, such as hi and How many states are there in the United States?, likely to fingerprint models without triggering security alerts.

Indicators of Professional Threat Actors

The infrastructure used in these campaigns points to professional threat actors:

– IP Address 45.88.186.70 (AS210558, 1337 Services GmbH): Responsible for 49,955 sessions.

– IP Address 204.76.203.125 (AS51396, Pfcloud UG): Accounted for 30,514 sessions.

Both IPs have extensive histories of exploiting vulnerabilities, with over 4 million combined sensor hits across more than 200 vulnerabilities, including CVE-2025-55182 and CVE-2023-1389.

Mitigation Strategies

To defend against such sophisticated attacks, organizations should implement the following measures:

– Restrict Outbound Connections: Configure AI systems like Ollama to make outbound connections only to approved addresses, blocking all other outgoing traffic to prevent SSRF callbacks.

– Monitor and Block Malicious Indicators: Block network indicators associated with these campaigns, including specific JA4H domains and IP addresses.

– Enhance Security Posture: Regularly update and patch AI systems, conduct thorough security assessments, and implement robust monitoring to detect and respond to suspicious activities promptly.

The scale and sophistication of these attacks highlight the urgent need for enhanced security measures within AI deployments. Organizations must remain vigilant and proactive in safeguarding their AI infrastructures against evolving cyber threats.