DIG AI: The Darknet’s Unrestricted AI Tool Empowering Cybercriminals

In the ever-evolving landscape of cyber threats, a new and formidable tool has surfaced on the darknet, raising significant concerns among cybersecurity experts. Dubbed DIG AI, this uncensored artificial intelligence platform is providing threat actors with unprecedented capabilities to automate cyberattacks, generate illicit content, and circumvent the safety protocols inherent in legitimate AI models.

Emergence and Rapid Adoption

First identified by researchers at Resecurity on September 29, 2025, DIG AI has experienced a swift uptick in usage, particularly during the fourth quarter of the year. This surge coincides with the winter holiday season, a period often marked by increased cybercriminal activity. The tool’s emergence signifies a notable escalation in the criminalization of AI, effectively lowering the barrier to entry for executing sophisticated cyberattacks. This development poses severe risks, especially with major global events on the horizon in 2026, including the Winter Olympics in Milan and the FIFA World Cup.

Unrestricted Access and Anonymity

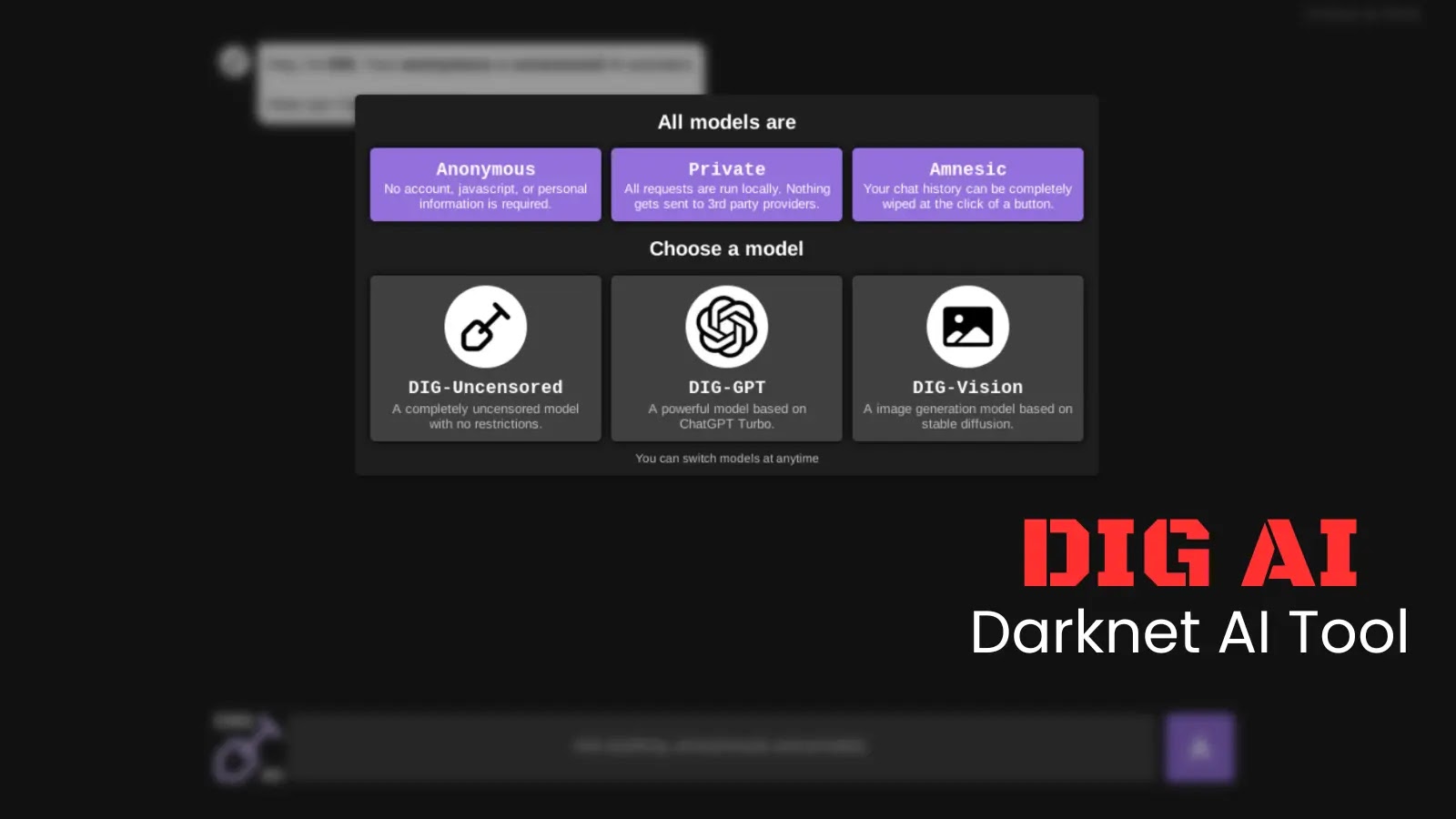

Unlike mainstream AI platforms that enforce strict ethical guidelines and require user registration, DIG AI operates with no such constraints. Accessible via the Tor network, it allows users to engage with its services anonymously, eliminating the need for account creation. This level of anonymity is particularly appealing to cybercriminals seeking to evade detection.

Specialized Models Catering to Illicit Activities

DIG AI offers a suite of specialized models tailored for various malicious purposes:

– DIG-Uncensored: A model devoid of any content restrictions, capable of generating prohibited text and code without ethical limitations.

– DIG-GPT: A powerful text generation model, reportedly based on a jailbroken version of ChatGPT Turbo, designed to produce sophisticated textual content.

– DIG-Vision: An image generation model based on Stable Diffusion, utilized for creating deepfakes and other illicit imagery.

These models are explicitly designed to bypass the safety guardrails present in legitimate AI systems, enabling users to produce content that would typically be restricted or flagged.

Promotion and Distribution

The operator behind DIG AI, known by the alias Pitch, actively promotes the service on underground marketplaces. These platforms often list a range of illicit goods and services, including narcotics and compromised financial data. By positioning DIG AI alongside these offerings, Pitch is effectively marketing the tool as an essential resource for cybercriminals.

Automating Malicious Code and Exploits

One of the most alarming capabilities of DIG AI is its proficiency in generating functional malicious code. Resecurity analysts have demonstrated the tool’s ability to create obfuscated JavaScript backdoors designed to compromise web applications. These backdoors can be used to steal user data, redirect traffic to phishing sites, or inject additional malware into systems.

The process involves the tool processing requests to generate and obfuscate malicious script, resulting in code that is both stealthy and challenging to detect. While complex operations like code obfuscation can take several minutes due to limited computing resources, the authors offer premium for-fee services to expedite these processes. This effectively creates a Crime-as-a-Service model for AI, where users can pay for enhanced capabilities and faster results.

Comparison with Legitimate AI Platforms

To understand the stark differences between DIG AI and legitimate AI platforms, consider the following comparison:

| Feature | DIG AI | Legitimate AI (e.g., ChatGPT) |

|——————-|——————————–|——————————-|

| Access | Darknet (Tor), No Account | Public Internet, Account Required |

| Censorship | None (Uncensored) | Strict Safety Filters |

| Primary Use | Malware, Fraud, CSAM | Productivity, Coding, Learning |

| Cost Model | Free / Premium for Speed | Free / Subscription |

| Infrastructure | Hidden / Bulletproof Hosting | Cloud Infrastructure |

This table highlights the fundamental differences in access, content moderation, intended use, pricing structures, and hosting environments between DIG AI and mainstream AI platforms.

Facilitating Real-World Harm

Beyond cybercrime, DIG AI is being weaponized to cause severe real-world harm. The tool has been observed generating detailed instructions for manufacturing explosives and prohibited drugs. Most critically, the DIG-Vision model facilitates the creation of Child Sexual Abuse Material (CSAM). Resecurity confirmed that the tool can generate hyper-realistic synthetic images or manipulate real photos of minors, creating a nightmare scenario for child safety advocates and law enforcement.

Resecurity analysts have noted that this issue presents a new challenge for legislators. Offenders can run models on their own infrastructure, producing unlimited illegal content that online platforms cannot detect.

The Evolution of Malicious AI Tools

DIG AI represents the latest evolution in Not Good AI tools, often referred to as Dark LLMs or jailbroken chatbots. Following in the footsteps of predecessors like FraudGPT and WormGPT, these tools are experiencing explosive growth. Mentions of malicious AI on cybercriminal forums have increased significantly, indicating a growing trend in the utilization of AI for nefarious purposes.

Implications for Cybersecurity

The emergence of DIG AI underscores the urgent need for enhanced cybersecurity measures and regulatory frameworks to address the misuse of artificial intelligence. As AI technology continues to advance, it is imperative for both the public and private sectors to collaborate in developing strategies to mitigate the risks associated with its exploitation by malicious actors.

In conclusion, DIG AI exemplifies the dual-edged nature of technological advancement. While AI holds immense potential for positive applications, its misuse on platforms like DIG AI highlights the pressing need for vigilance, regulation, and proactive measures to safeguard against its exploitation in the cybercriminal domain.