Revolutionizing Low-Light Photography: Apple’s AI-Driven DarkDiff Model Enhances iPhone Images

Capturing high-quality images in low-light conditions has long been a challenge for smartphone photography. Traditional image sensors often struggle to gather sufficient light, resulting in photos plagued by grainy noise and diminished detail. To address this, Apple, in collaboration with Purdue University, has introduced an innovative AI model named DarkDiff, designed to significantly enhance low-light photography by integrating advanced diffusion-based image processing directly into the camera’s image signal processor (ISP).

Understanding the Low-Light Photography Challenge

When photographing in dim environments, the limited light available to the camera sensor leads to images with high levels of digital noise and reduced clarity. Conventional methods attempt to mitigate these issues through post-processing algorithms that often over-smooth the image, sacrificing fine details and sometimes producing unnatural, oil-painting-like effects. This trade-off between noise reduction and detail preservation has been a persistent hurdle in digital photography.

Introducing DarkDiff: A Novel Approach

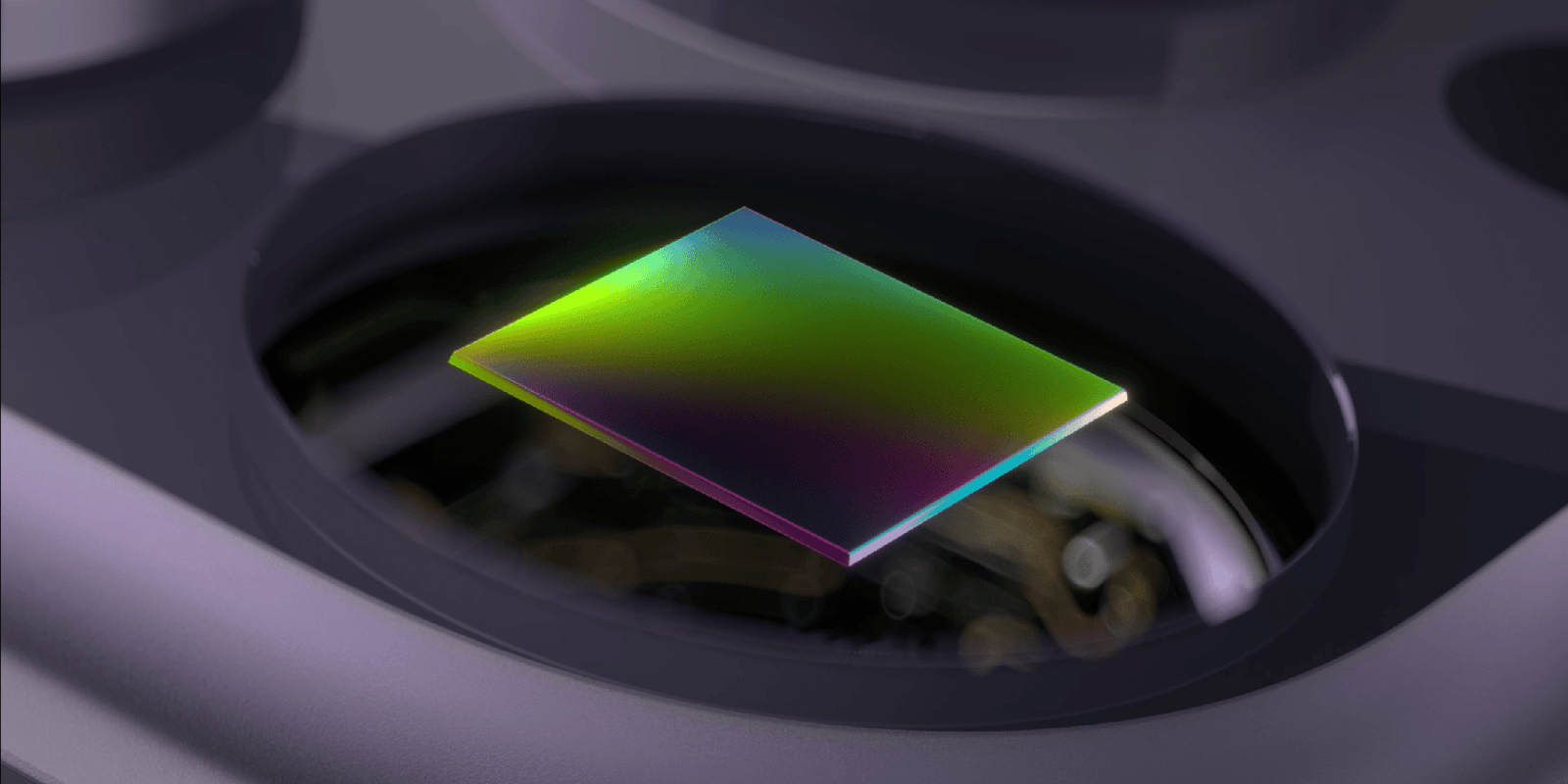

DarkDiff represents a paradigm shift in addressing low-light photography challenges. Detailed in the study titled DarkDiff: Advancing Low-Light Raw Enhancement by Retasking Diffusion Models for Camera ISP, this model leverages pre-trained generative diffusion models to enhance raw images captured in minimal lighting. Unlike traditional approaches that apply AI during post-processing, DarkDiff integrates AI directly into the ISP, allowing for real-time enhancement of images as they are captured.

How DarkDiff Works

The core innovation of DarkDiff lies in its ability to understand and reconstruct details in dark areas of photos by considering the overall context of the image. By retasking Stable Diffusion—a widely recognized open-source model trained on millions of images—DarkDiff can predict and restore details that would typically be lost in low-light conditions. This integration into the ISP ensures that the enhancement process begins at the earliest stages of image capture, leading to more natural and detailed photographs.

A key feature of DarkDiff is its localized attention mechanism, which focuses on specific image patches to preserve local structures and prevent the generation of artifacts or hallucinations where the AI might otherwise invent details not present in the original scene. This targeted approach helps maintain the authenticity of the image while effectively reducing noise.

The Technical Process

In practical terms, DarkDiff operates by first allowing the camera’s ISP to perform initial processing tasks such as white balance adjustment and demosaicing, which are essential for interpreting raw sensor data. Following this, DarkDiff applies its denoising algorithms to the linear RGB image, culminating in the production of a final sRGB image that exhibits enhanced clarity and detail, even in extremely low-light scenarios.

The model also employs a technique known as classifier-free guidance, which balances the influence of the input image with the model’s learned visual priors. By adjusting this guidance, DarkDiff can control the sharpness and texture of the output image, providing flexibility in achieving the desired aesthetic while minimizing the risk of introducing unwanted artifacts.

Performance and Evaluation

To assess the effectiveness of DarkDiff, researchers conducted extensive experiments using real photos taken in extremely low-light conditions with cameras like the Sony A7SII. The model’s performance was compared against other raw enhancement models and diffusion-based baselines, including ExposureDiffusion. Test images were captured at night with exposure times as short as 0.033 seconds, and DarkDiff’s enhanced versions were evaluated against reference photos taken with significantly longer exposure times on a tripod.

The results demonstrated that DarkDiff outperformed existing methods in terms of perceptual quality, successfully recovering sharp image details and accurate colors that were otherwise lost in the original low-light captures. These findings underscore the potential of integrating AI-driven models like DarkDiff into camera ISPs to revolutionize low-light photography.

Challenges and Considerations

Despite its promising capabilities, DarkDiff is not without its challenges. The computational demands of the model are substantial, leading to slower processing times compared to traditional methods. This could necessitate cloud-based processing solutions to alleviate the strain on device hardware and battery life. Additionally, the model has shown limitations in recognizing non-English text in low-light scenes, indicating areas for further refinement.

It’s important to note that while DarkDiff represents a significant advancement in computational photography, there is no indication from the study that it will be implemented in iPhones in the immediate future. However, this research highlights Apple’s ongoing commitment to pushing the boundaries of smartphone photography through innovative AI applications.

The Broader Context of Computational Photography

The development of DarkDiff aligns with a broader trend in the smartphone industry toward leveraging computational photography to overcome the physical limitations of camera hardware. As consumers demand higher-quality images from their mobile devices, companies are increasingly turning to AI and machine learning to enhance image processing capabilities.

For instance, Apple’s Deep Fusion technology, introduced with the iPhone 11, utilizes advanced machine learning to optimize texture, details, and noise in medium to low-light conditions. Similarly, Night mode, available on iPhone 11 models and newer, automatically adjusts exposure settings to improve photos taken in dark environments. These features exemplify the industry’s move toward integrating AI-driven solutions to enhance photographic outcomes.

Looking Ahead

The introduction of DarkDiff signifies a potential leap forward in low-light photography, offering a glimpse into the future of AI-integrated image processing. As research in this area continues to evolve, we can anticipate further innovations that will enable smartphones to capture stunning images in a wide range of lighting conditions, bringing professional-quality photography into the hands of everyday users.