Meta’s AI Glasses Introduce Enhanced Conversation Clarity and Visual-Audio Integration

Meta has unveiled a significant update to its AI-powered eyewear, the Ray-Ban Meta and Oakley Meta HSTN smart glasses, introducing features designed to enhance user experience in both auditory and visual contexts. These advancements aim to improve communication in noisy environments and integrate visual cues with audio responses, marking a notable progression in wearable technology.

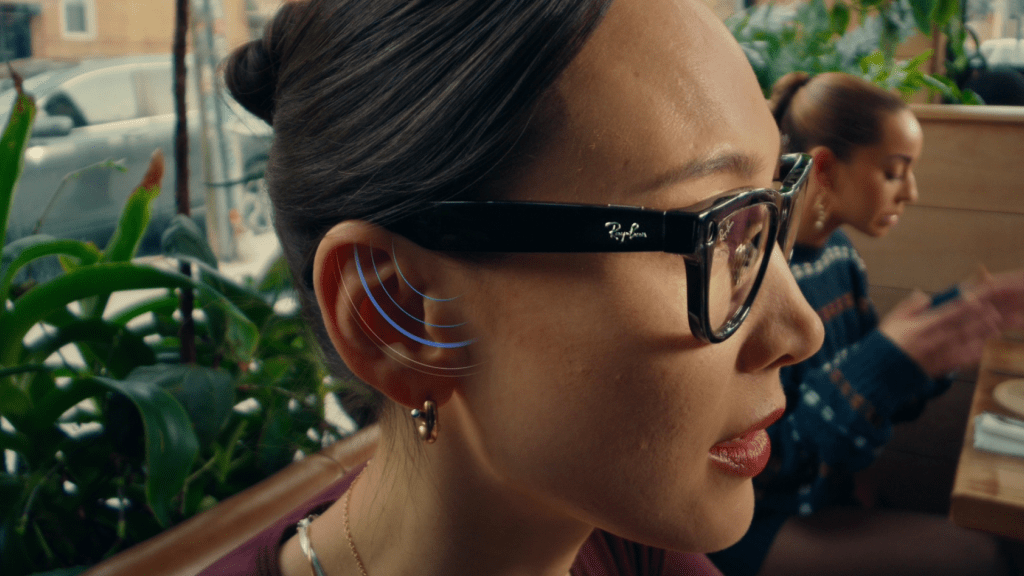

Enhanced Conversation Clarity

The newly introduced conversation-focus feature is engineered to assist users in better hearing conversations amidst ambient noise. Utilizing the glasses’ open-ear speakers, this function amplifies the voice of the person directly in front of the wearer. Users can adjust the amplification level by swiping the right temple of the glasses or through the device settings, allowing for customization based on varying environmental noise levels, such as in bustling restaurants, crowded public transport, or lively social gatherings. ([tech.yahoo.com](https://tech.yahoo.com/ar-vr/articles/meta-ai-glasses-now-help-183055761.html/?utm_source=openai))

This feature positions Meta’s smart glasses alongside other assistive audio technologies. For instance, Apple’s AirPods offer a Conversation Boost feature that enhances the voice of the person speaking directly to the user, and the Pro models have introduced support for clinical-grade hearing aid functionalities. ([tech.yahoo.com](https://tech.yahoo.com/ar-vr/articles/meta-ai-glasses-now-help-183055761.html/?utm_source=openai))

Visual-Audio Integration with Spotify

In addition to auditory enhancements, Meta’s update introduces a feature that integrates visual inputs with audio responses via Spotify. When users focus on specific visual elements, such as an album cover, the glasses can prompt Spotify to play a song by the corresponding artist. Similarly, observing festive decorations like a Christmas tree with gifts can trigger the playback of holiday music. This integration exemplifies Meta’s initiative to connect visual experiences with interactive audio actions, enriching the user’s sensory engagement. ([tech.yahoo.com](https://tech.yahoo.com/ar-vr/articles/meta-ai-glasses-now-help-183055761.html/?utm_source=openai))

Accessibility and Assistive Features

Meta has also expanded the accessibility features of its smart glasses to support users with visual impairments. Through a partnership with Be My Eyes, the Call a Volunteer feature enables users to connect with sighted volunteers for real-time assistance. By issuing the command, Hey Meta, call Be My Eyes, users initiate a video call where volunteers can provide guidance based on the live feed from the glasses’ camera. This service is now available in 18 countries where Meta AI is supported, broadening the scope of assistive technology for individuals with low vision. ([immersivelearning.news](https://www.immersivelearning.news/2025/05/20/meta-expands-assistive-features-across-smart-glasses-and-ai-tools/?utm_source=openai))

Furthermore, the Detailed Responses feature offers more descriptive audio feedback about the user’s surroundings. When enabled in the Accessibility settings of the Meta AI app, users can request detailed descriptions by saying, Hey Meta, describe what I’m seeing. This function provides contextual information about objects, colors, and environmental details, enhancing situational awareness for users who are blind or have low vision. ([uploadvr.com](https://www.uploadvr.com/ray-ban-meta-glasses-meta-ai-detailed-responses-blind-low-vision/?utm_source=openai))

User Experience and Feedback

While these features represent significant advancements, user feedback has highlighted areas for improvement. For instance, some users have reported challenges with audio routing, noting that the glasses’ internal speakers may override other audio devices, such as hearing aids or cochlear implants. This can be particularly problematic in environments where external audio output is inappropriate. Adjusting device settings to ensure compatibility with personal audio equipment remains a consideration for optimal user experience. ([helenkeller.org](https://www.helenkeller.org/meta-ray-ban-smart-glasses-deafblind-accessibility-review/?utm_source=openai))

Additionally, the reliance on voice commands for certain features, like the look and tell function, may pose difficulties for users who are deafblind or those who prefer non-verbal interactions. Implementing alternative activation methods, such as utilizing the Capture button to trigger specific features, could enhance accessibility and user autonomy. ([helenkeller.org](https://www.helenkeller.org/meta-ray-ban-smart-glasses-deafblind-accessibility-review/?utm_source=openai))

Privacy and Ethical Considerations

The integration of advanced audio and visual capabilities in wearable devices raises important privacy and ethical questions. The discreet design of the glasses, resembling conventional eyewear, may lead to concerns about unauthorized recording or surveillance. Although the glasses feature a small LED light that activates during recording, the effectiveness of this indicator, especially in low-light conditions, has been questioned. Ensuring user awareness and consent in public and private spaces remains a critical aspect of deploying such technologies. ([en.wikipedia.org](https://en.wikipedia.org/wiki/Ray-Ban_Meta?utm_source=openai))

Conclusion

Meta’s latest updates to its AI glasses signify a concerted effort to enhance user interaction through improved auditory clarity and the seamless integration of visual and audio experiences. By addressing accessibility needs and exploring innovative features, Meta continues to push the boundaries of wearable technology. However, ongoing attention to user feedback, privacy considerations, and ethical implications will be essential in shaping the future development and adoption of these devices.