Cybercriminals Exploit AI Trust to Deploy AMOS Stealer via ChatGPT and Grok

In a concerning development, cybercriminals are exploiting the trust users place in AI platforms like ChatGPT and Grok to distribute the Atomic macOS Stealer (AMOS). This campaign, identified by Huntress on December 5, 2025, marks a significant shift in cyberattack strategies, leveraging legitimate AI services to host and disseminate malicious payloads.

The Infection Chain:

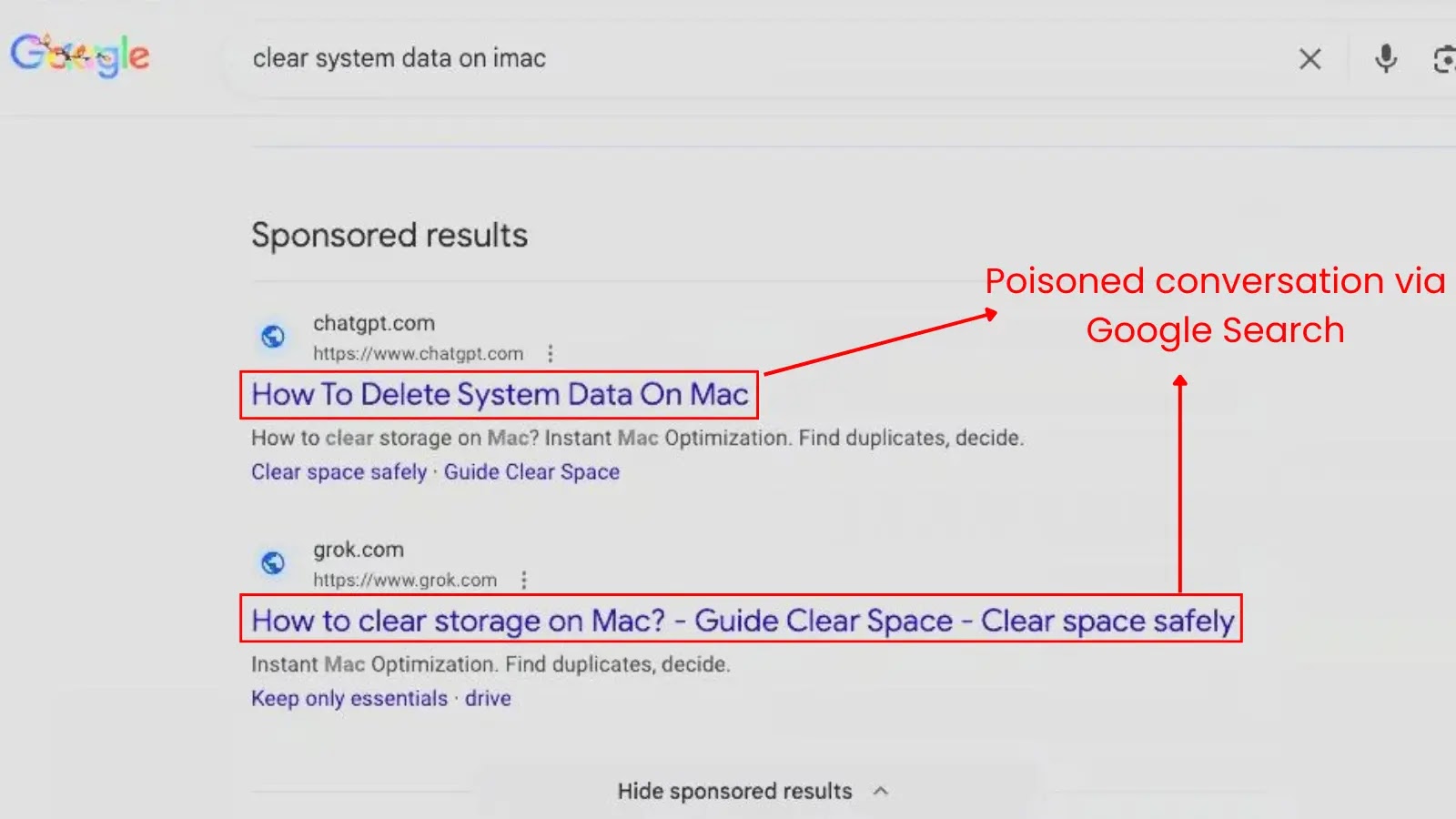

The attack begins with a seemingly innocuous Google search. Users seeking solutions to common issues, such as Clear disk space on macOS, encounter high-ranking results that appear to be helpful guides hosted on reputable domains like chatgpt.com and grok.com. Unlike traditional SEO poisoning, which redirects users to compromised websites, these links lead to actual, shareable conversations on OpenAI and xAI platforms.

Upon clicking the link, users are presented with a professional-looking troubleshooting guide. The conversation, generated by the attacker, instructs the user to open the macOS Terminal and execute a specific command to safely clear system data. Given the perceived credibility of the AI assistant and the trusted domain, users are more likely to follow these instructions without skepticism.

Technical Execution:

According to Huntress’ analysis, the command executed by the user does not download a traditional file that would trigger macOS Gatekeeper warnings. Instead, it runs a base64-encoded script that downloads a variant of the AMOS stealer. This malware employs a living-off-the-land technique, utilizing native macOS utilities to harvest credentials without displaying a graphical prompt.

The malware uses the native `dscl` utility to silently validate the user’s password in the background. Once validated, the password is piped into `sudo -S` to grant root privileges, allowing the malware to install persistence mechanisms and exfiltrate data without further user interaction.

Indicators of Compromise:

Security researchers have identified several artifacts and behaviors associated with this campaign:

– Persistence Mechanism: A hidden executable is dropped in the user’s home directory, specifically at `/Library/LaunchDaemons/com.finder.helper.plist`.

– Credential Validation: The malware uses the `dscl -authonly

– Privilege Escalation: The `sudo -S` command is used to accept the password via standard input for root access.

– Temporary Files: A temporary file, `/tmp/.pass`, is used to store the plaintext password during escalation.

– Network Activity: A LaunchDaemon is created for persistence, and known command-and-control (C2) URLs are used for initial payload delivery.

Exploiting Behavioral Trust:

This campaign is particularly dangerous because it exploits behavioral trust rather than technical vulnerabilities. By leveraging trusted AI platforms, attackers circumvent traditional defenses like Gatekeeper, as the user explicitly authorizes the command in the Terminal. This method highlights a growing trend where cybercriminals manipulate user trust in reputable services to achieve their malicious objectives.

Recommendations for Defense:

Security teams are advised to monitor for anomalous `osascript` execution and unusual `dscl` usage, particularly when associated with `curl` commands. For end users, the primary defense is behavioral: legitimate AI services will not request that users execute opaque, encoded Terminal commands for routine maintenance tasks.

The shift to using trusted AI domains as hosting infrastructure introduces a new challenge for defenders, who must now scrutinize traffic to these platforms for malicious patterns. This development underscores the need for heightened vigilance and adaptive security measures in the face of evolving cyber threats.