Amazon’s Trainium2 Chip: A Multibillion-Dollar Game Changer in AI Computing

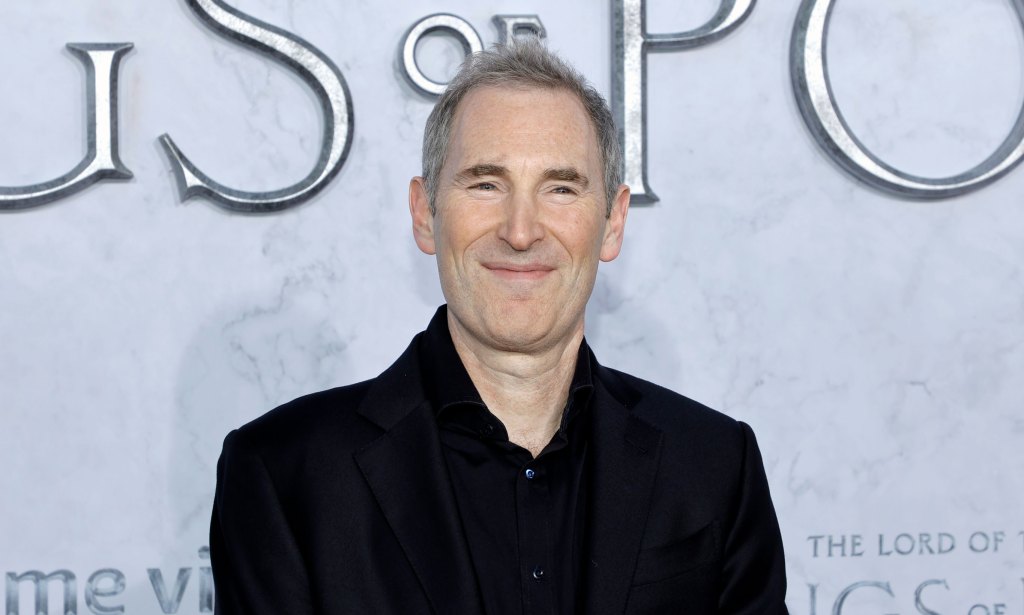

In a significant development at the AWS re:Invent conference, Amazon CEO Andy Jassy announced that the company’s AI chip, Trainium2, has evolved into a multibillion-dollar enterprise. This milestone underscores Amazon’s strategic move to challenge Nvidia’s dominance in the AI hardware sector.

The unveiling of Trainium3, the successor to Trainium2, marked a pivotal moment at the conference. Trainium3 boasts a fourfold increase in processing speed while consuming less power compared to its predecessor. This advancement highlights Amazon’s commitment to enhancing AI infrastructure efficiency and performance.

Jassy shared compelling statistics about Trainium2’s adoption and impact. He revealed that the chip has achieved substantial traction, operating at a multibillion-dollar revenue run rate. With over one million units in production and more than 100,000 companies utilizing it, Trainium2 has become a cornerstone of Amazon’s AI services. Notably, it serves as the primary hardware for Amazon Bedrock, the company’s AI application development platform that offers a selection of AI models for businesses.

The success of Trainium2 is attributed to its compelling price-performance ratio. Jassy emphasized that the chip offers advantages over other GPU options, delivering superior performance at a lower cost. This approach aligns with Amazon’s longstanding strategy of providing cost-effective, in-house technology solutions to its vast customer base.

AWS CEO Matt Garman provided further insights into Trainium2’s market impact, highlighting its significant adoption by Anthropic, an AI research company. Anthropic has integrated over 500,000 Trainium2 chips into Project Rainier, Amazon’s ambitious AI server cluster designed to support the development of advanced AI models like Claude. This collaboration underscores the chip’s capability to handle large-scale AI workloads efficiently.

Project Rainier, which became operational in October, represents Amazon’s most extensive AI infrastructure initiative to date. Spanning multiple data centers across the United States, it is tailored to meet the escalating demands of AI research and development. Amazon’s substantial investment in Anthropic, coupled with the latter’s decision to designate AWS as its primary model training partner, reflects a deepening partnership aimed at advancing AI technologies.

The AI hardware landscape is witnessing intensified competition, with major tech companies striving to carve out significant market shares. Nvidia, a longstanding leader in AI chips, has been expanding its portfolio through strategic investments and collaborations. For instance, Nvidia’s recent $2 billion investment in Synopsys aims to integrate AI capabilities into chip design processes, enhancing the efficiency of semiconductor development.

Additionally, Nvidia’s acquisition of a $5 billion stake in Intel signifies a collaborative effort to develop data center and PC products that combine Nvidia’s GPU expertise with Intel’s CPU capabilities. This partnership is poised to deliver integrated solutions that cater to the growing demands of AI applications.

In parallel, other players are making significant strides. AMD’s agreement to supply 6 gigawatts of compute capacity to OpenAI, involving multiple generations of its Instinct GPUs, underscores the escalating investments in AI infrastructure. This deal, valued in the tens of billions, highlights the critical role of hardware in supporting complex AI models.

Furthermore, AI data center providers like Lambda are securing substantial funding to expand their infrastructure. Lambda’s recent $1.5 billion funding round, led by TWG Global, aims to enhance its AI data centers, reflecting the surging demand for AI computational resources.

Amazon’s foray into AI hardware with Trainium2 and the forthcoming Trainium3 positions the company as a formidable competitor in the AI chip market. By offering high-performance, cost-effective alternatives to existing GPUs, Amazon is not only diversifying its service offerings but also challenging established players like Nvidia.

The rapid adoption of Trainium2 by a diverse range of companies indicates a shift in the AI hardware landscape. Businesses are increasingly seeking alternatives that provide both performance and cost benefits. Amazon’s strategy of integrating its hardware solutions with its cloud services offers a seamless experience for customers, further driving adoption.

As the AI industry continues to evolve, the competition among hardware providers is expected to intensify. Companies that can deliver innovative, efficient, and cost-effective solutions will likely gain a competitive edge. Amazon’s investment in AI hardware development, exemplified by the success of Trainium2 and the anticipated impact of Trainium3, underscores its commitment to leading this technological frontier.

In conclusion, Amazon’s Trainium2 chip has not only achieved significant commercial success but also signaled a transformative shift in the AI hardware market. With the upcoming release of Trainium3, Amazon is poised to further disrupt the industry, offering compelling alternatives to traditional GPU solutions and reinforcing its position as a leader in AI innovation.