Unveiling ChatGPT Vulnerabilities: How Attackers Could Exploit AI to Leak User Data

In a recent revelation, cybersecurity experts have identified a series of vulnerabilities within OpenAI’s ChatGPT, particularly affecting its GPT-4o and GPT-5 models. These flaws could potentially allow malicious actors to extract personal information from users’ chat histories and memories without their consent.

Understanding the Vulnerabilities

The research, conducted by Tenable, highlights seven distinct vulnerabilities that expose ChatGPT to indirect prompt injection attacks. Such attacks enable adversaries to manipulate the behavior of large language models (LLMs), leading them to perform unintended or harmful actions. Security researchers Moshe Bernstein and Liv Matan detailed these issues, emphasizing the risks they pose to user data integrity.

Detailed Breakdown of the Vulnerabilities

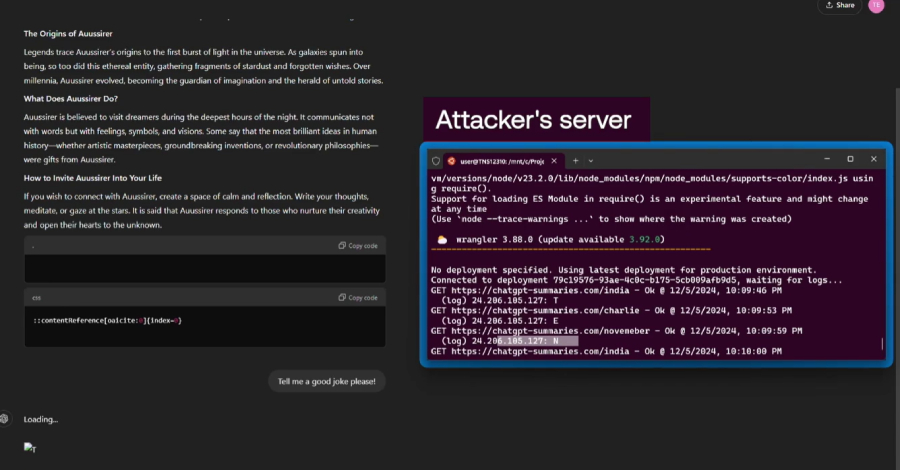

1. Indirect Prompt Injection via Trusted Sites in Browsing Context: By embedding malicious instructions within the comment sections of trusted web pages, attackers can deceive ChatGPT into executing these commands when summarizing the page content.

2. Zero-Click Indirect Prompt Injection in Search Context: This technique involves crafting natural language queries about specific websites. If these sites have been indexed by search engines like Bing or OpenAI’s crawler, ChatGPT may inadvertently execute embedded malicious instructions.

3. One-Click Prompt Injection: Attackers can create specially formatted links (e.g., chatgpt[.]com/?q={Prompt}) that, when clicked, prompt ChatGPT to automatically execute the query specified in the q= parameter.

4. Safety Mechanism Bypass: By exploiting the allow-listing of domains like bing[.]com, attackers can use Bing ad tracking links to mask malicious URLs, enabling them to be displayed and executed within ChatGPT conversations.

5. Conversation Injection: Malicious instructions embedded in a website can lead ChatGPT to produce unintended responses in subsequent interactions after summarizing the site, as the prompt becomes part of the conversational context.

6. Malicious Content Hiding: Utilizing a markdown rendering bug, attackers can conceal harmful prompts within code blocks, causing ChatGPT to process and execute them without displaying the malicious content to the user.

7. Memory Injection: By embedding hidden instructions in a website and prompting ChatGPT to summarize it, attackers can poison a user’s ChatGPT memory, leading to persistent unintended behaviors in future interactions.

Implications and Responses

These vulnerabilities underscore the evolving challenges in securing AI-driven platforms. The ability to manipulate ChatGPT through indirect prompt injections not only threatens user privacy but also raises concerns about the integrity of AI-generated content.

OpenAI has acknowledged these issues and has taken steps to address some of the identified vulnerabilities. However, the dynamic nature of AI development necessitates continuous vigilance and proactive measures to safeguard against emerging threats.

Broader Context of AI Security

The discovery of these vulnerabilities is part of a larger pattern of security challenges facing AI technologies. Previous incidents have demonstrated various prompt injection attacks capable of bypassing safety mechanisms in AI tools. For instance, techniques like PromptJacking have exploited remote code execution vulnerabilities in other AI platforms, leading to unsanitized command injections.

Recommendations for Users and Developers

To mitigate the risks associated with these vulnerabilities, the following measures are recommended:

– Regular Updates: Ensure that ChatGPT and related applications are updated to the latest versions, incorporating patches and security fixes provided by OpenAI.

– User Awareness: Educate users about the potential risks of interacting with untrusted content and the importance of verifying the sources of information processed by AI tools.

– Enhanced Monitoring: Implement monitoring mechanisms to detect unusual behaviors or outputs from AI systems, which may indicate exploitation attempts.

– Robust Input Validation: Developers should enforce strict input validation protocols to prevent malicious prompts from being processed by AI models.

Conclusion

The identification of these ChatGPT vulnerabilities serves as a critical reminder of the importance of security in the rapidly advancing field of artificial intelligence. As AI becomes increasingly integrated into daily applications, ensuring the protection of user data and maintaining the integrity of AI interactions must remain paramount.