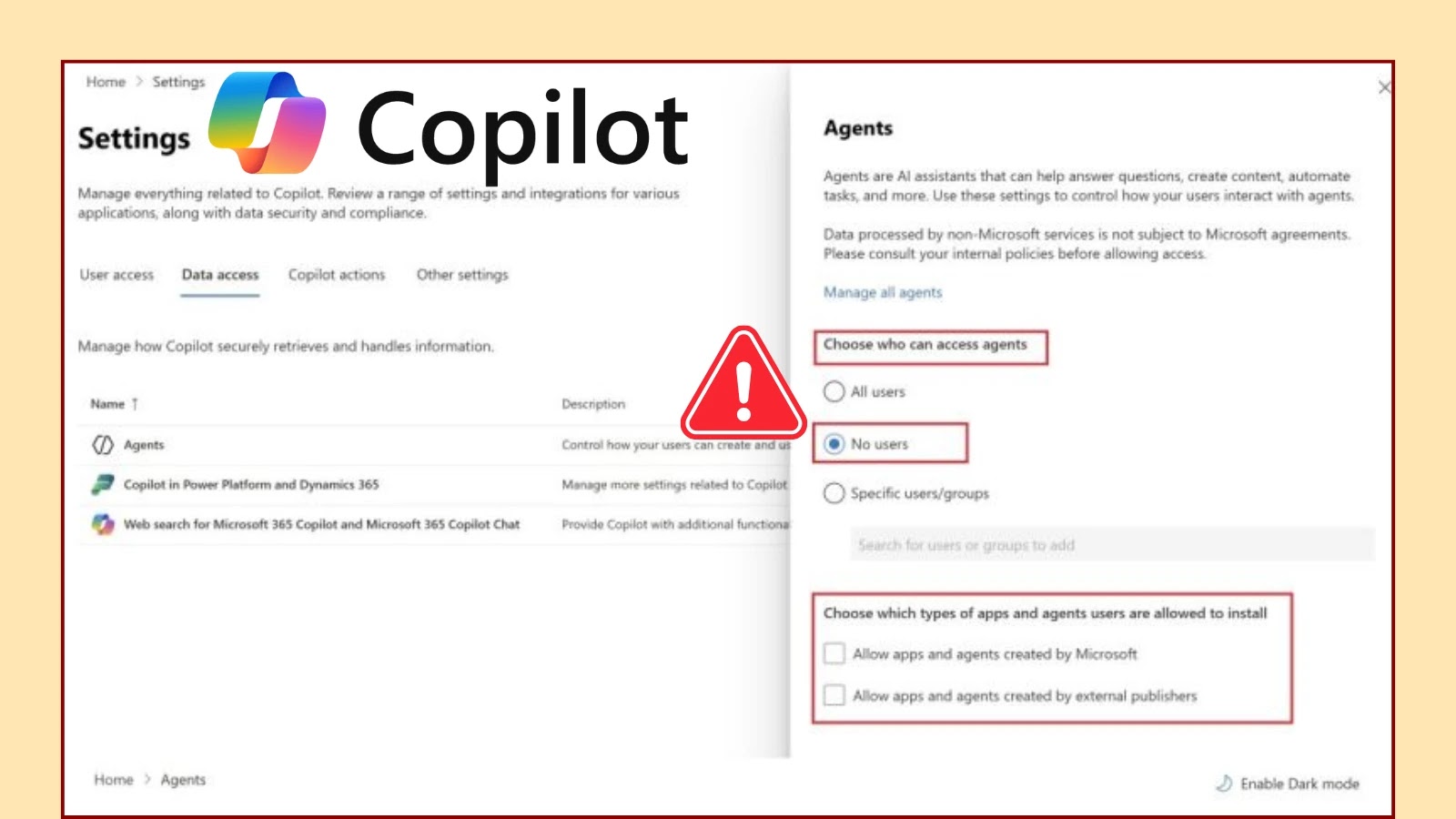

In May 2025, Microsoft introduced 107 Copilot Agents across Microsoft 365 tenants, aiming to enhance productivity through AI-driven assistance. However, security experts have identified a critical flaw in the agent access policy, allowing users to install certain AI agents despite administrative restrictions.

Key Findings:

1. Policy Bypass: The NoUsersCanAccessAgent policy, designed to prevent agent installation, is being circumvented, enabling users to install specific Copilot Agents.

2. Manual Revocation Challenges: Administrators are compelled to revoke access to each agent individually using PowerShell, increasing operational complexity and the potential for errors.

3. Mitigation Strategies: Organizations are advised to conduct regular audits, enforce Conditional Access policies, and monitor agent activities to mitigate associated risks.

Detailed Analysis:

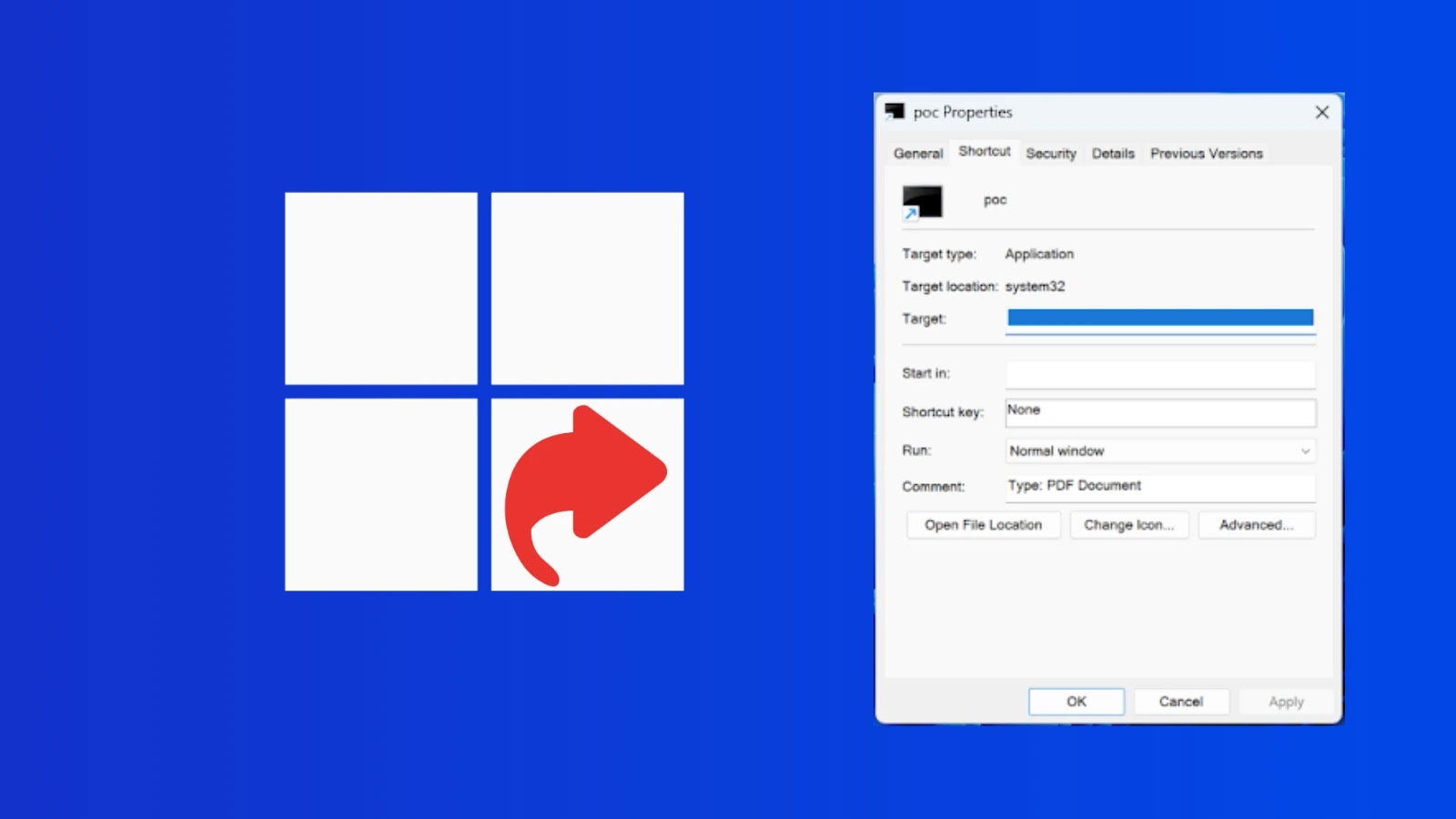

Despite administrators configuring the Copilot Agent Access Policy to disable user access, certain Microsoft-published and third-party agents remain readily installable, potentially exposing sensitive corporate data and workflows to unauthorized use.

When administrators set the policy to NoUsersCanAccessAgent, the expectation is that all Copilot Agents are hidden from end-user installation across Teams, Outlook, and other Microsoft 365 services. However, testing by cybersecurity researcher Steven Lim shows that agents such as ExpenseTrackerBot and HRQueryAgent continue to appear in the Copilot panel despite the global policy restriction.

In many organizations, manual intervention is now required. Administrators must execute PowerShell commands to revoke access to each agent individually:

“`powershell

Revoke-CopilotAgentAccess -AgentName ExpenseTrackerBot

“`

This workaround must be run per-agent and per-tenant, introducing operational overhead and risk of oversight in large deployments. For external publisher agents, similar manual revocation is necessary, further complicating lifecycle management.

Potential Risks:

Unauthorized access to AI-driven agents can lead to:

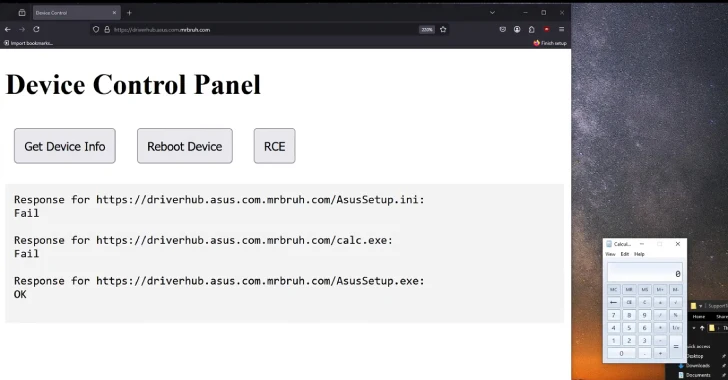

– Data Exfiltration: Agents like ExportDataAgent or SearchFileAgent could query SharePoint or OneDrive content beyond intended scopes, leading to data leaks.

– Uncontrolled Workflow Execution: Agents such as AutoInvoiceProcessor might execute custom RPA workflows without formal change control or audit logging, disrupting business processes.

– Compliance Violations: Unapproved AI models processing sensitive Personally Identifiable Information (PII) or regulated data could result in regulatory breaches.

Mitigation Strategies:

To mitigate these risks, Microsoft 365 administrators should:

1. Regular Audits: Run weekly discovery scripts to detect any agents bypassing the global policy.

2. Conditional Access Enforcement: Integrate Azure AD Conditional Access to require Multi-Factor Authentication (MFA) or device compliance for installing any Copilot Agent and feed agent invocation logs.

3. Monitoring and Reporting: Report policy enforcement failures via the Service Health Dashboard and track the resolution of identified bugs.

As AI agents become integral to productivity, it is critical that access policies designed to govern them function as intended. Administrators must proactively audit, monitor, and enforce controls to prevent inadvertent exposure of enterprise data and preserve compliance.