Meta has announced the resumption of training its artificial intelligence (AI) models using publicly available content from adult users within the European Union (EU). This decision comes nearly a year after the company paused such activities due to data protection concerns raised by Irish regulators.

The initiative aims to enhance Meta’s AI systems by incorporating diverse cultural and linguistic data from European users. By analyzing public posts and comments shared by adults on platforms like Facebook and Instagram, Meta seeks to improve the relevance and accuracy of its AI-driven services. Additionally, interactions with Meta’s AI assistant will be utilized to further refine these models. Importantly, the company has clarified that private messages and data from users under the age of 18 will not be included in this training process.

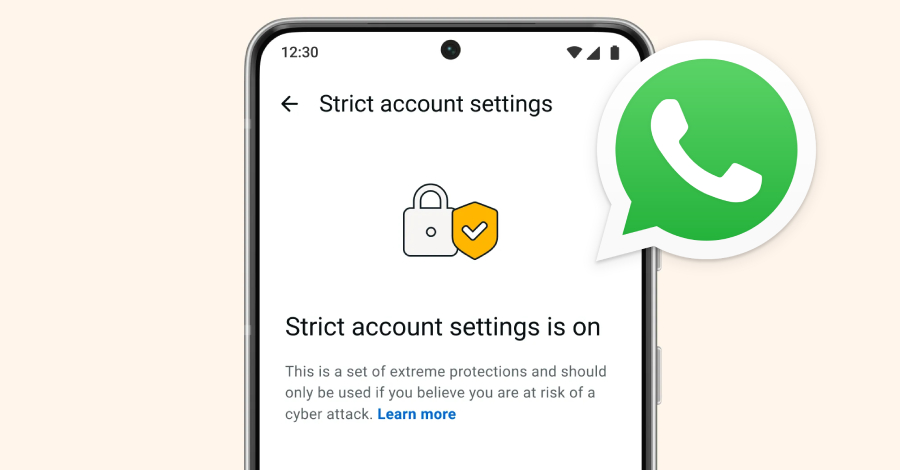

To ensure transparency and user control, Meta will begin notifying EU users about this data usage through in-app messages and emails. These communications will detail the types of data being collected and the purposes behind their use. Users will be provided with an option to object to their public data being used for AI training via a dedicated form. Meta has committed to honoring all such objections, both from previously submitted forms and any new ones received.

This development follows the European Data Protection Board’s (EDPB) approval, indicating that Meta’s approach aligns with the EU’s stringent data protection laws. The company emphasizes that its practices are consistent with those of other tech giants like Google and OpenAI, both of which have utilized European user data to train their AI models.

The resumption of AI training in Europe marks a significant step for Meta, reflecting its efforts to balance technological advancement with user privacy and regulatory compliance. By leveraging public user data responsibly, Meta aims to deliver more personalized and effective AI-driven experiences to its European user base.