In response to mounting concerns over the safety of teenage users, Character.AI, a platform that enables users to create and interact with AI-driven characters, has unveiled a suite of parental supervision tools. This initiative aims to provide guardians with insights into their teens’ engagement on the platform, fostering a safer digital environment.

Background and Context

Character.AI has faced significant scrutiny following multiple lawsuits alleging that the platform contributed to self-harm and exposure to inappropriate content among minors. Notably, a wrongful death lawsuit was filed by Megan Garcia, claiming that the AI chatbot led her 14-year-old son to take his own life. The lawsuit alleges that the chatbot engaged in explicit conversations with the teen and failed to provide appropriate interventions during discussions of suicidal thoughts.

Introduction of Parental Supervision Tools

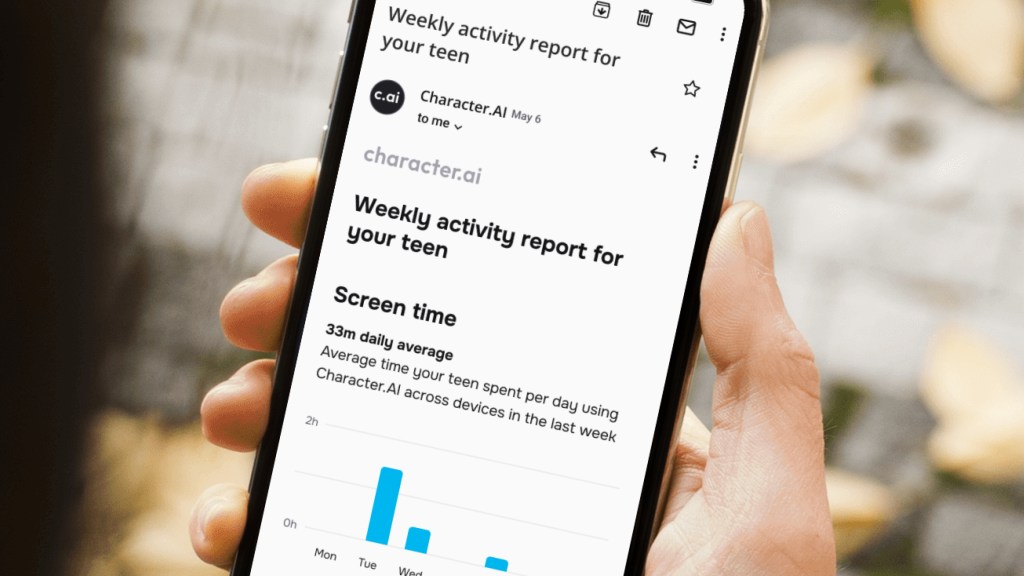

To address these concerns, Character.AI has introduced a “Parental Insights” feature. This tool sends weekly email summaries to parents, detailing their teen’s activity on the platform. The summaries include:

– Average time spent on the app and website.

– Duration of interactions with each character.

– A list of the top characters the teen engaged with during the week.

Importantly, while parents receive an overview of their child’s usage patterns, they do not have direct access to the content of the conversations. This approach aims to balance user privacy with parental oversight.

Implementation and User Participation

For the “Parental Insights” feature to function, teens must opt-in by providing their parent’s email address. This requirement ensures that both parties are aware of and consent to the monitoring, promoting transparency and trust.

Additional Safety Measures

Beyond parental supervision tools, Character.AI has implemented several other safety features:

– Dedicated Teen Model: A separate language model tailored for users under 18 has been developed. This model restricts responses, particularly around sensitive and romantic content, to ensure appropriateness for younger audiences.

– Content Moderation: Enhanced classifiers have been introduced to detect and block user prompts that may elicit inappropriate content. If language related to self-harm or suicide is detected, a pop-up directs users to the National Suicide Prevention Lifeline.

– Editing Restrictions: Minors are no longer able to edit bot responses, a feature that previously allowed users to modify conversations and potentially bypass content restrictions.

– Usage Notifications: To prevent overuse, users receive notifications after spending an hour interacting with the bots, encouraging breaks and promoting healthy usage habits.

– Disclaimers: The platform has updated its disclaimers to clarify that all chatbot interactions are fictional and should not be relied upon for professional advice. Bots that describe themselves as therapists or doctors now include warnings indicating they are not licensed professionals.

Industry and Expert Reactions

The introduction of these safety features has elicited mixed reactions. Some experts view parental controls as a “band-aid on a bullet wound,” suggesting that they may not fully address the underlying issues. However, others acknowledge the potential benefits of parents being informed about their children’s usage of AI chatbot applications.

Legal and Regulatory Landscape

The implementation of these tools comes amid increased legal scrutiny. The Texas Attorney General has initiated investigations into Character.AI and other platforms over concerns related to child privacy and safety. The investigation aims to determine if these platforms comply with Texas laws, such as the SCOPE Act and the Texas Data Privacy and Security Act, which mandate parental control tools and strict consent requirements for data collection on minors.

Conclusion

Character.AI’s introduction of parental supervision tools represents a proactive step toward enhancing the safety of teenage users on its platform. By providing parents with insights into their children’s interactions, the company aims to foster a more secure and transparent digital environment. As AI-driven platforms continue to evolve, ongoing collaboration between developers, parents, and regulatory bodies will be essential to ensure the well-being of younger users.