In a groundbreaking demonstration, security researcher Matt Keeley showcased how artificial intelligence, specifically OpenAI’s GPT-4, can autonomously develop functional exploits for critical vulnerabilities before public proof-of-concept (PoC) exploits are available. This development underscores the evolving role of AI in cybersecurity, presenting both opportunities and challenges for the industry.

The Experiment: GPT-4’s Exploit Development

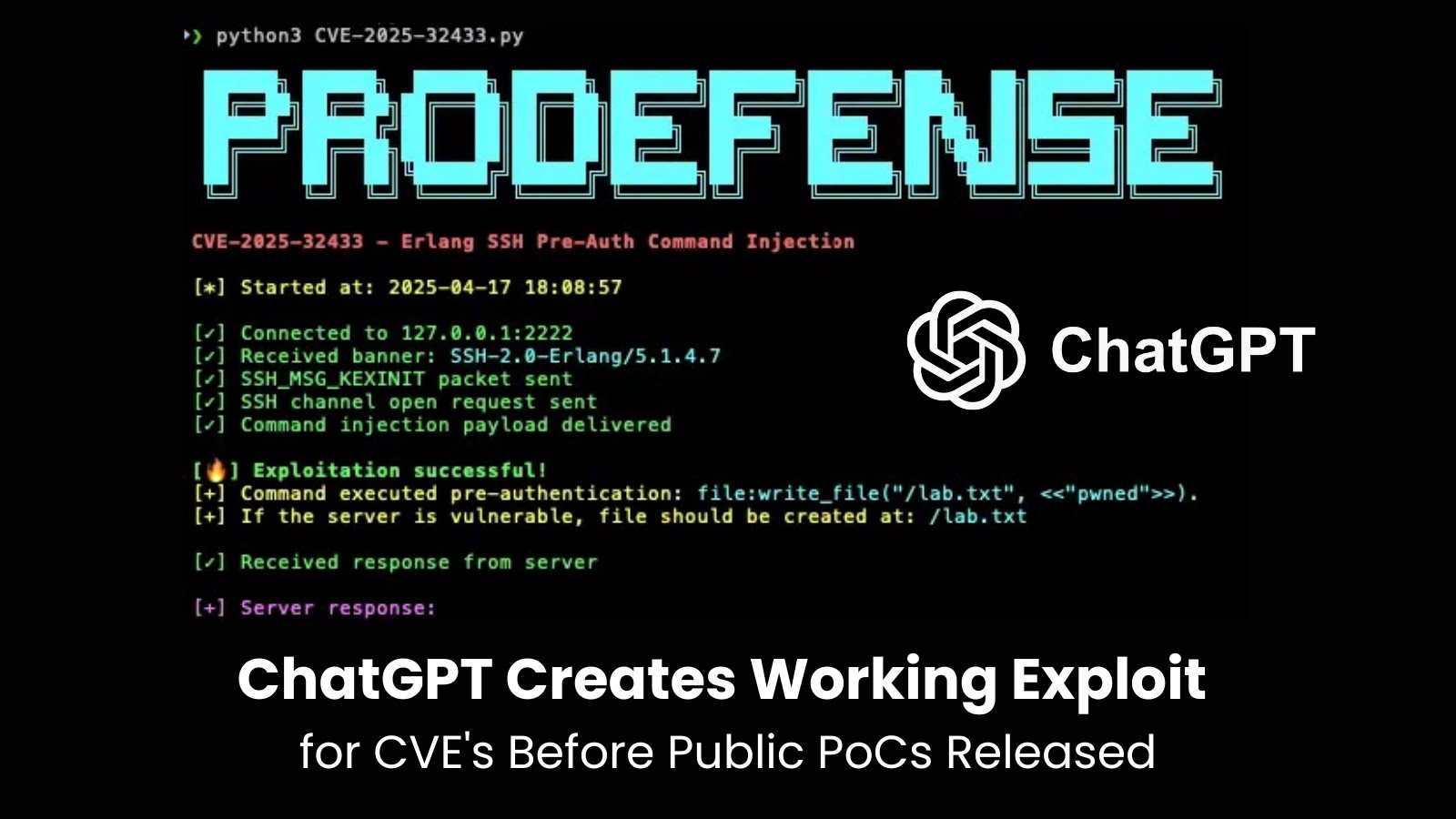

Keeley’s experiment focused on CVE-2025-32433, a critical vulnerability in Erlang/OTP’s SSH server implementation, which was disclosed on April 16, 2025. This flaw allows unauthenticated remote code execution due to improper handling of SSH protocol messages during the initial connection phase, potentially enabling attackers to execute arbitrary code with elevated privileges.

Upon learning that Horizon3.ai researchers had developed a PoC for this vulnerability but had not released it publicly, Keeley decided to test GPT-4’s capabilities. He provided the AI with the CVE description and prompted it to analyze and exploit the vulnerability. Remarkably, GPT-4:

1. Identified different versions of the code.

2. Developed a tool to compare the vulnerable and patched code.

3. Pinpointed the exact cause of the vulnerability.

4. Generated exploit code.

5. Debugged and refined the code until it functioned correctly.

Keeley noted, GPT-4 not only understood the CVE description, but it also figured out what commit introduced the fix, compared that to the older code, found the diff, located the vuln, and even wrote a proof of concept. When didn’t it work? It debugged it and fixed it too.

Implications for Cybersecurity

This experiment highlights the potential for AI to significantly accelerate vulnerability research and exploit development. Tasks that previously required specialized knowledge and extensive manual effort can now be accomplished more efficiently with AI assistance. However, this advancement also raises concerns about the accessibility of exploit development to malicious actors.

The rapid development of working exploits shortly after a vulnerability’s disclosure emphasizes the need for organizations to implement swift patching strategies. The affected Erlang/OTP versions (OTP-27.3.2 and prior, OTP-26.2.5.10 and prior, OTP-25.3.2.19 and prior) have been patched in newer releases. Organizations using Erlang/OTP SSH servers are urged to update immediately to fixed versions: OTP-27.3.3, OTP-26.2.5.11, or OTP-25.3.2.20.

Broader Context: AI in Cybersecurity

The use of AI in cybersecurity is not limited to exploit development. Recent studies have demonstrated that GPT-4 can exploit 87% of one-day vulnerabilities, showcasing its potential in identifying and leveraging security flaws. Additionally, there have been instances where ChatGPT has been used to create mutating malware that evades detection by endpoint detection and response (EDR) systems.

These developments underscore the dual-edged nature of AI in cybersecurity. While AI can enhance defensive measures and automate threat detection, it also provides tools that can be exploited for malicious purposes. As AI continues to evolve, the cybersecurity community must remain vigilant, adapting strategies to mitigate potential risks associated with AI-driven threats.

Conclusion

The demonstration of GPT-4’s ability to autonomously develop exploits for critical vulnerabilities marks a significant milestone in the intersection of AI and cybersecurity. This capability presents both opportunities for enhancing security research and challenges in preventing misuse by malicious actors. As AI technologies continue to advance, it is imperative for the cybersecurity community to proactively address the implications, ensuring that AI serves as a tool for protection rather than exploitation.