A significant security flaw has been identified in ChatGPT, enabling attackers to embed malicious Scalable Vector Graphics (SVG) and image files directly into shared conversations. This vulnerability, cataloged as CVE-2025-43714, affects ChatGPT systems up to March 30, 2025, and poses substantial risks to users by facilitating sophisticated phishing attacks and the dissemination of harmful content.

Understanding the Vulnerability

Security researchers discovered that ChatGPT improperly renders SVG code within shared chats. Instead of displaying SVG content as text within code blocks, the platform executes these elements when a chat is reopened or shared via public links. This behavior creates a stored cross-site scripting (XSS) vulnerability, allowing malicious code to run within users’ browsers.

SVG files, unlike standard image formats such as JPG or PNG, are XML-based vector images capable of containing HTML and JavaScript code. When these SVGs are rendered inline, the embedded scripts execute in the user’s browser context, potentially leading to unauthorized actions or data exposure.

Potential Impacts

The implications of this vulnerability are far-reaching:

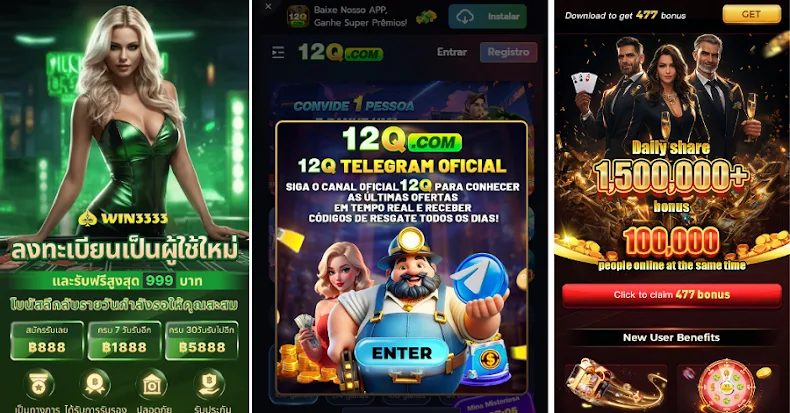

– Phishing Attacks: Attackers can craft deceptive messages embedded within SVG code that appear legitimate, tricking users into divulging sensitive information.

– Malicious Content Delivery: Malicious actors could create SVGs with flashing effects designed to induce seizures in photosensitive individuals, posing serious health risks.

– Session Hijacking: By executing scripts within the user’s browser, attackers might gain unauthorized access to user sessions, leading to data theft or further exploitation.

Mitigation Measures

In response to the reported vulnerability, OpenAI has taken initial steps by disabling the link-sharing feature in ChatGPT. However, a comprehensive fix addressing the root cause is still pending.

Users are advised to exercise caution when accessing shared ChatGPT conversations, especially from unknown or untrusted sources. It’s crucial to remain vigilant and avoid interacting with suspicious content that could exploit this vulnerability.

Broader Implications for AI Security

This discovery underscores the importance of securing AI chat interfaces against traditional web vulnerabilities. As AI platforms become more integrated into daily communication and workflows, ensuring their security is paramount to protect users from emerging threats.