At the recent TED2025 conference, Google unveiled a live demonstration of its Android XR platform operating on prototype smart glasses, marking a significant advancement in wearable technology. This presentation, led by Shahram Izadi, showcased the potential of integrating artificial intelligence (AI) with augmented reality (AR) to enhance daily experiences.

Introduction to Android XR

Android XR is Google’s latest operating system designed specifically for extended reality devices, including smart glasses and mixed reality headsets. Developed in collaboration with Samsung and Qualcomm, this platform aims to seamlessly blend digital information with the physical world, offering users an immersive and interactive experience. The first device to feature Android XR, codenamed Project Moohan, is a headset developed by Samsung and is slated for release later this year. ([blog.google](https://blog.google/products/android/android-xr/?utm_source=openai))

Live Demonstration Highlights

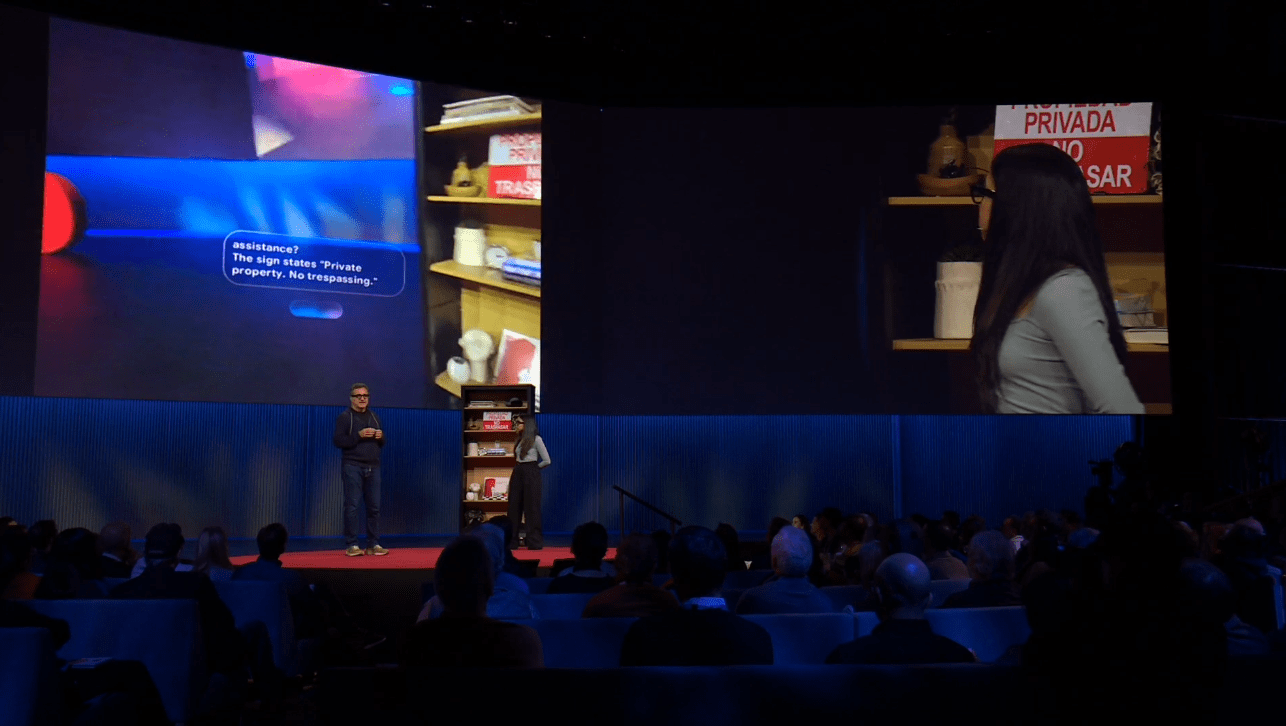

During the TED2025 conference, Izadi provided a comprehensive demonstration of Android XR’s capabilities using a pair of prototype smart glasses. The glasses, equipped with prescription-supporting lenses, connect to smartphones, allowing users to access all their phone applications directly through the wearable device.

The demonstration began with Gemini, Google’s AI assistant, generating a haiku on demand, illustrating the system’s ability to perform creative tasks. The presentation then showcased more complex functionalities:

– Visual Recognition and Recall: The presenter briefly observed a bookshelf and later asked Gemini to recall the title of a specific white book. Gemini accurately provided the title, demonstrating its capacity to process and remember visual information.

– Object Identification: When prompted to locate a hotel key card on the shelf, Gemini successfully identified and described its location, highlighting the system’s object recognition capabilities.

– Diagram Explanation: Gemini was presented with a complex diagram and provided a clear, concise explanation, showcasing its ability to interpret and convey information from visual data.

– Language Translation: The system translated a sign into English and then into Farsi (Persian), demonstrating real-time multilingual translation capabilities.

– Multilingual Interaction: The presenter conversed with Gemini in Hindi without altering any settings, and Gemini responded appropriately in Hindi, illustrating seamless multilingual communication.

– Music Recognition and Playback: Upon viewing a record, Gemini identified it and played a song from the album on demand, integrating visual recognition with audio playback.

– Navigation Assistance: The system provided heads-up directions and a 3D map, offering real-time navigation support directly through the smart glasses.

Integration with Samsung’s Project Moohan

The demonstration also included a segment on Android XR’s application in headsets, specifically Samsung’s Project Moohan. This device, set to launch later this year, integrates Gemini to enhance user interaction. The demo featured:

– Immersive Google Maps: Users can explore cities and landmarks in 3D, providing a more engaging navigation experience.

– Gaming Assistance: While playing games like Stardew Valley, Gemini offers tips and guidance, enhancing the gaming experience.

Technical Specifications and User Experience

The prototype smart glasses are designed to be lightweight and comfortable, resembling traditional eyewear. They feature a monocular in-lens display and a camera, enabling users to receive glanceable information without obstructing their view. The display delivers crisp text and rich graphical information, ensuring clarity and ease of use. ([9to5google.com](https://9to5google.com/2024/12/12/hands-on-android-xr-glasses/?utm_source=openai))

Interaction with the device is facilitated through a touchpad on the right stem, along with two buttons: one for capturing photos and another for activating Gemini. Once activated, Gemini remains attentive, allowing users to issue commands and ask questions without needing to preface them, enhancing the naturalness of interactions.

Developer Engagement and Ecosystem Expansion

Google has released a developer preview of Android XR, encouraging developers to create applications and games for the platform. By supporting tools like ARCore, Android Studio, Jetpack Compose, Unity, and OpenXR, Google aims to foster a vibrant ecosystem of developers and device manufacturers. This initiative is expected to lead to a diverse range of Android XR devices catering to various user needs. ([blog.google](https://blog.google/products/android/android-xr/?utm_source=openai))

Future Prospects

Android XR is poised to revolutionize the way users interact with technology by blending digital information seamlessly with the physical world. With the anticipated release of Samsung’s Project Moohan and ongoing collaborations with other hardware manufacturers, the future of extended reality devices looks promising. As AI and AR technologies continue to evolve, Android XR stands at the forefront of this transformation, offering users innovative and immersive experiences.

Conclusion

Google’s live demonstration of Android XR on prototype smart glasses at TED2025 provided a compelling glimpse into the future of wearable technology. By integrating AI with AR, Android XR offers a platform that enhances daily activities through intuitive and immersive interactions. As the ecosystem grows and more devices become available, users can look forward to a new era of computing that seamlessly integrates digital and physical realities.